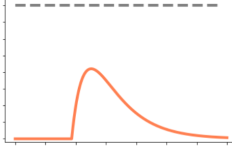

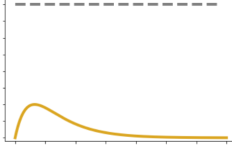

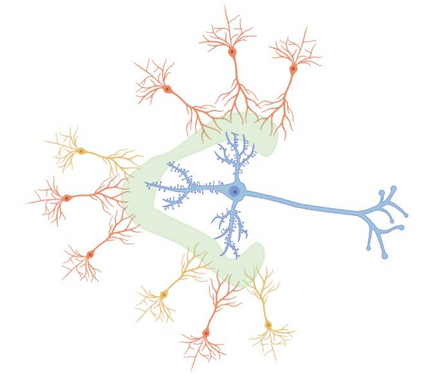

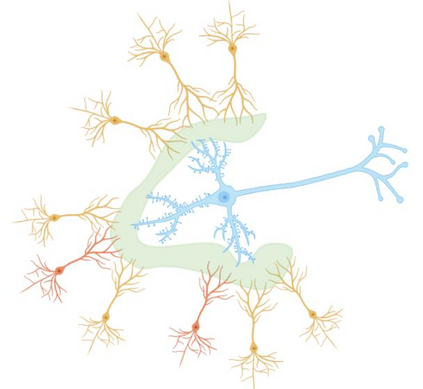

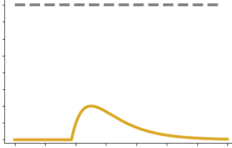

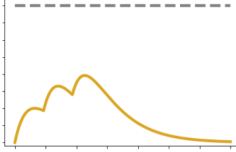

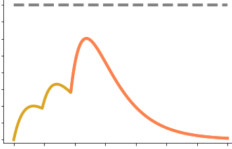

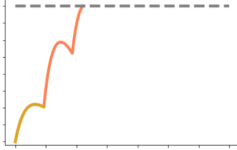

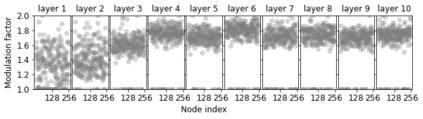

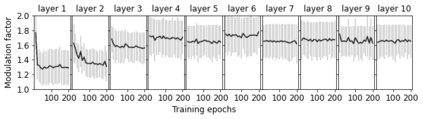

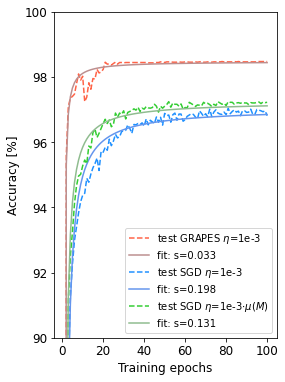

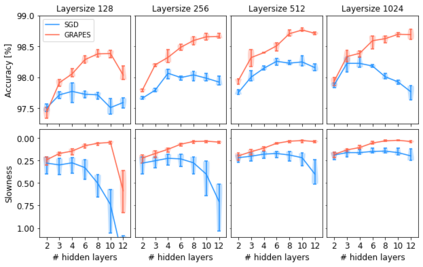

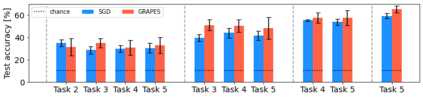

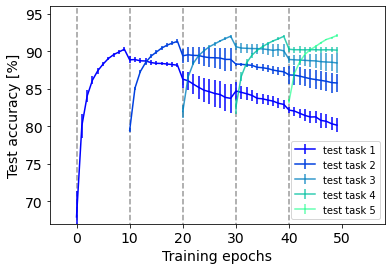

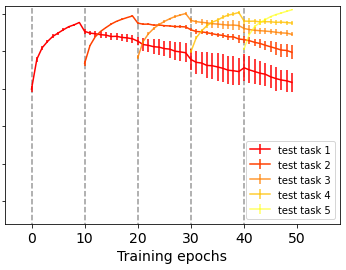

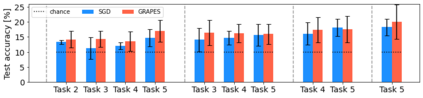

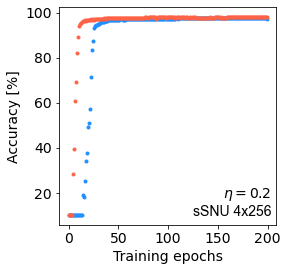

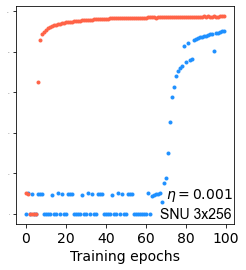

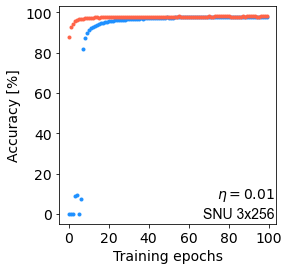

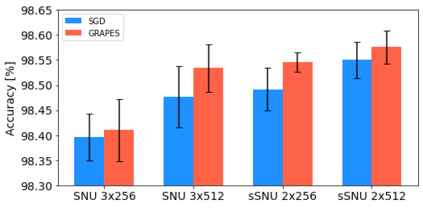

Plasticity circuits in the brain are known to be influenced by the distribution of the synaptic weights through the mechanisms of synaptic integration and local regulation of synaptic strength. However, the complex interplay of stimulation-dependent plasticity with local learning signals is disregarded by most of the artificial neural network training algorithms devised so far. Here, we propose a novel biologically inspired optimizer for artificial (ANNs) and spiking neural networks (SNNs) that incorporates key principles of synaptic integration observed in dendrites of cortical neurons: GRAPES (Group Responsibility for Adjusting the Propagation of Error Signals). GRAPES implements a weight-distribution dependent modulation of the error signal at each node of the neural network. We show that this biologically inspired mechanism leads to a systematic improvement of the convergence rate of the network, and substantially improves classification accuracy of ANNs and SNNs with both feedforward and recurrent architectures. Furthermore, we demonstrate that GRAPES supports performance scalability for models of increasing complexity and mitigates catastrophic forgetting by enabling networks to generalize to unseen tasks based on previously acquired knowledge. The local characteristics of GRAPES minimize the required memory resources, making it optimally suited for dedicated hardware implementations. Overall, our work indicates that reconciling neurophysiology insights with machine intelligence is key to boosting the performance of neural networks.

翻译:据了解,大脑中的可塑性电路受到通过合成整合和地方调节合成强度的机制对合成神经重量的分布的影响。然而,迄今为止设计的大多数人工神经网络培训算法都忽略了刺激依赖的可塑性与当地学习信号之间的复杂相互作用。在这里,我们提议为人工神经网络和神经网络提供一种新颖的生物启发优化器,其中结合了在以下神经神经畸形中观察到的合成整合的主要原则:GRAPES(调整错误信号信号推进网络的集体责任)。GRAPES在神经网络的每个节点对偏差信号进行加权分配依赖调节。我们表明,这种生物启发机制导致系统地改进网络的趋同率,并大大提高ANNS和神经网络的分类准确性,同时具有饲料前方和经常结构。此外,我们证明,GRAPES支持提高复杂性和减轻灾难性的模型的性能调整性能,通过使网络能够根据神经神经信号网络的每个节奏进行重的重力配置,从而将我们以往获得的硬性能最佳的硬性能应用,从而将我们所获取的常规性硬化的硬质感应变的硬性研究。