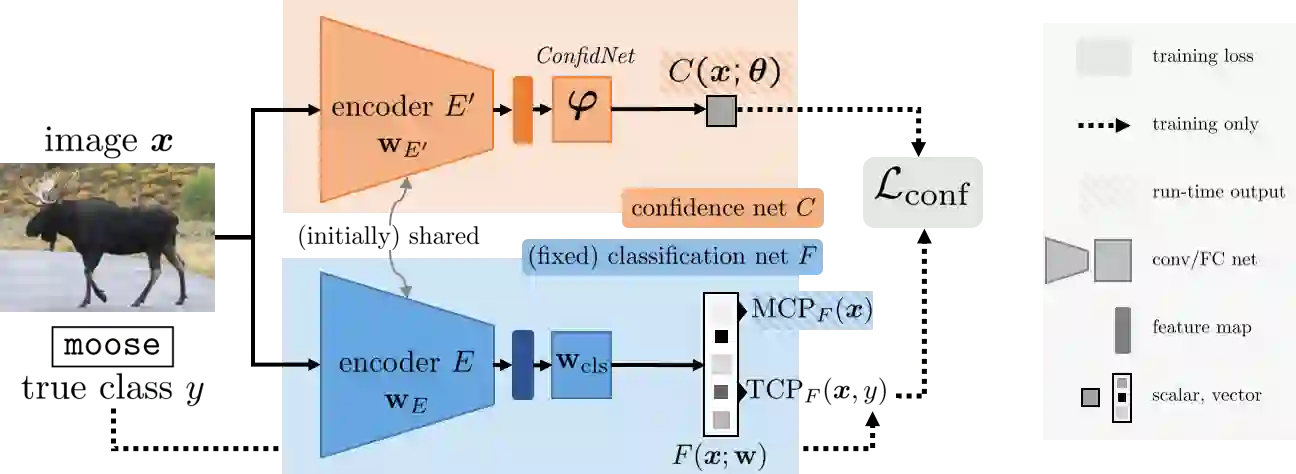

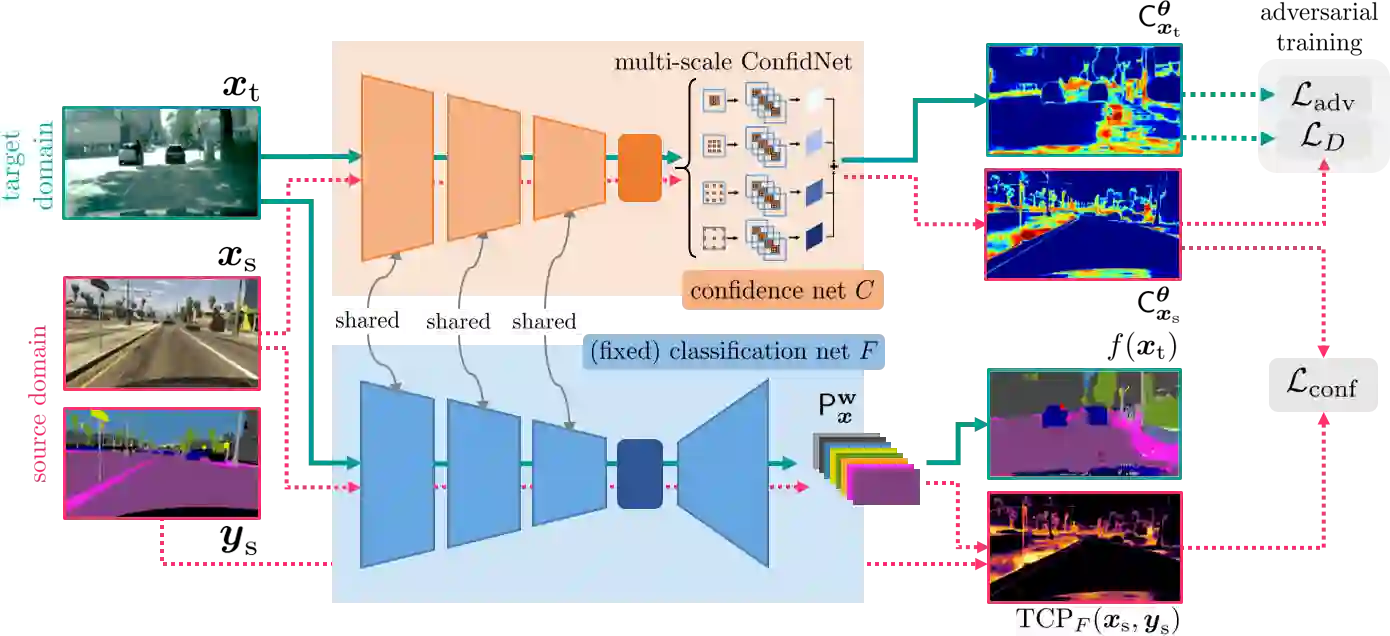

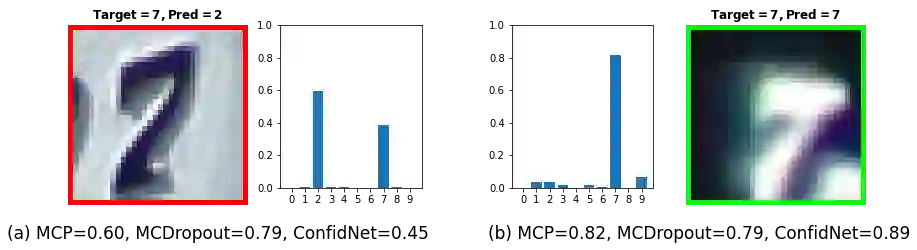

Reliably quantifying the confidence of deep neural classifiers is a challenging yet fundamental requirement for deploying such models in safety-critical applications. In this paper, we introduce a novel target criterion for model confidence, namely the true class probability (TCP). We show that TCP offers better properties for confidence estimation than standard maximum class probability (MCP). Since the true class is by essence unknown at test time, we propose to learn TCP criterion from data with an auxiliary model, introducing a specific learning scheme adapted to this context. We evaluate our approach on the task of failure prediction and of self-training with pseudo-labels for domain adaptation, which both necessitate effective confidence estimates. Extensive experiments are conducted for validating the relevance of the proposed approach in each task. We study various network architectures and experiment with small and large datasets for image classification and semantic segmentation. In every tested benchmark, our approach outperforms strong baselines.

翻译:将深神经分级者的信心可靠量化是将此类模型用于安全关键应用的一个具有挑战性但根本性的要求。在本文中,我们引入了一个新的模型信任目标标准,即真实的等级概率(TCP ) 。我们显示,TCP比标准的最大等级概率(MCP ) 提供了更好的信心估计属性。由于在测试时真实的类别本质上并不为人所知,我们建议从一个辅助模型的数据中学习TCP标准,引入一个适合这一背景的具体学习计划。我们评估了我们关于失败预测和以假标签进行自我培训的任务的方法,这都需要有效的信任估计。我们进行了广泛的实验,以验证拟议方法在每项任务中的适切性。我们研究了各种网络结构,并试验了用于图像分类和语义分化的小型和大型数据集。在每一个测试的基准中,我们的方法都超越了强的基线。