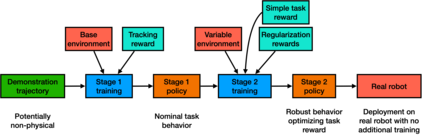

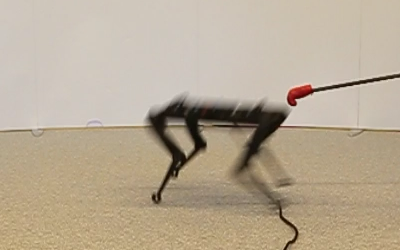

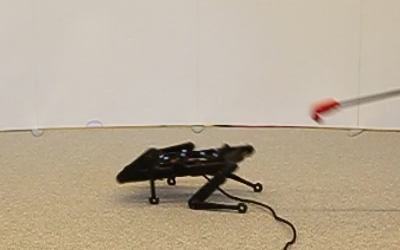

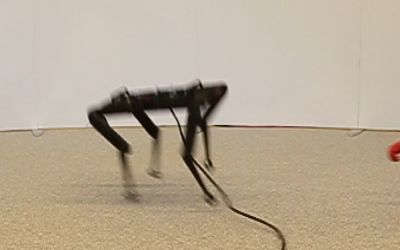

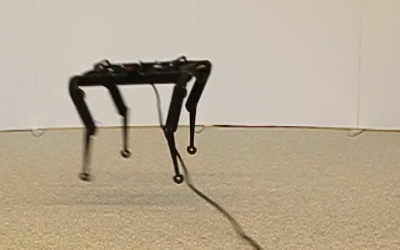

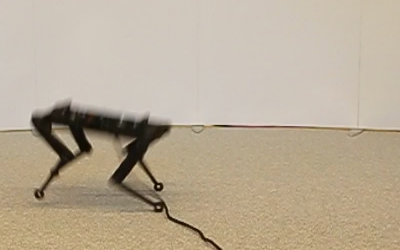

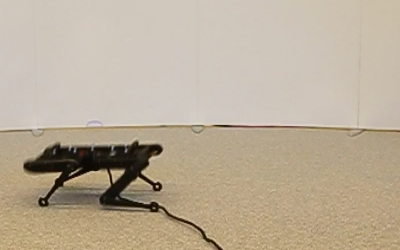

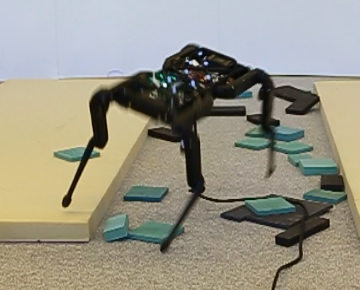

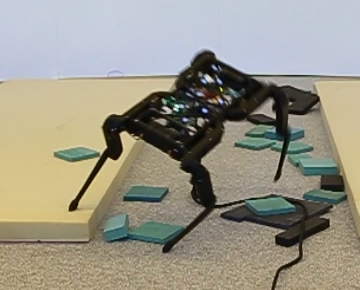

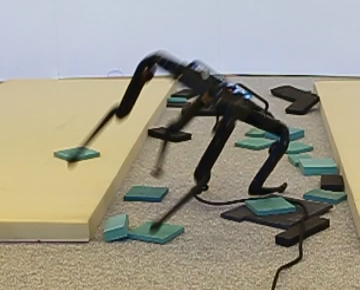

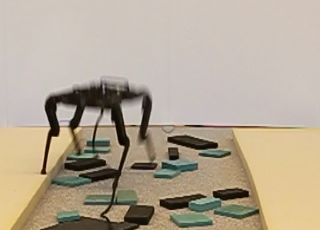

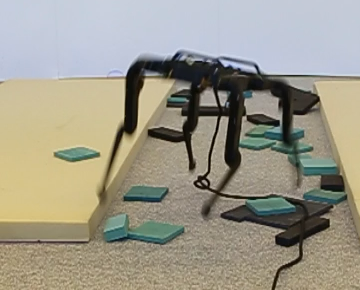

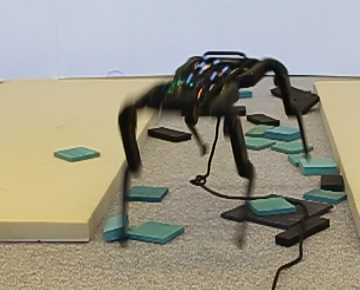

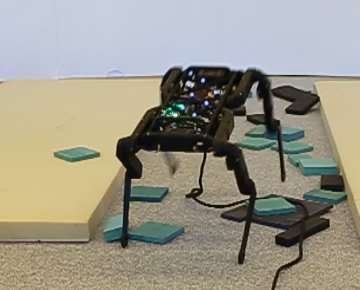

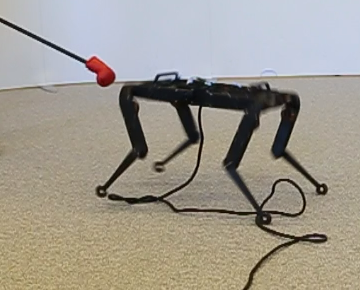

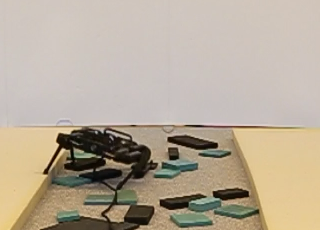

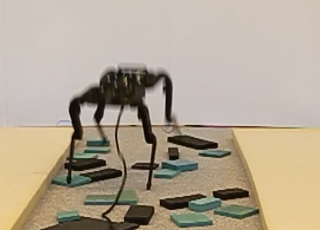

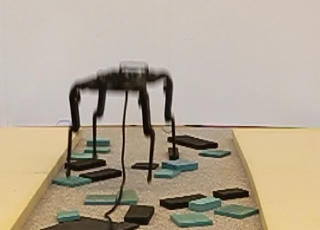

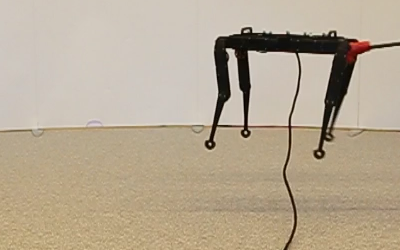

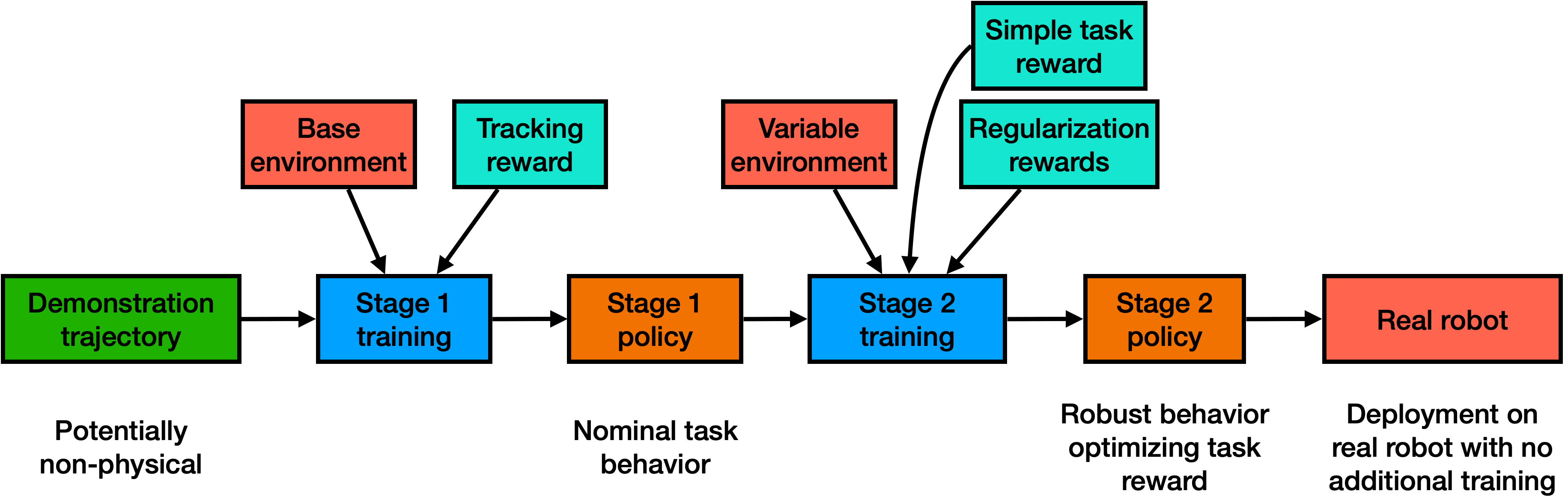

In this work we present a general, two-stage reinforcement learning approach for going from a single demonstration trajectory to a robust policy that can be deployed on hardware without any additional training. The demonstration is used in the first stage as a starting point to facilitate initial exploration. In the second stage, the relevant task reward is optimized directly and a policy robust to environment uncertainties is computed. We demonstrate and examine in detail performance and robustness of our approach on highly dynamic hopping and bounding tasks on a real quadruped robot.

翻译:在这项工作中,我们提出了一个一般的、分为两个阶段的强化学习方法,即从单一的示范轨迹走向一项强有力的政策,在不经过任何额外培训的情况下,可以在硬件上部署。演示在第一阶段作为便利初步勘探的起点。在第二阶段,直接优化相关任务奖励,并计算出一项对环境不确定因素具有活力的政策。我们详细展示和审查我们对于高度动态的购物和捆绑在真正四重机器人身上的任务的做法的绩效和稳健性。