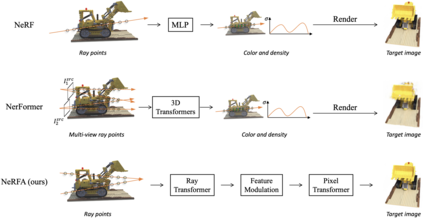

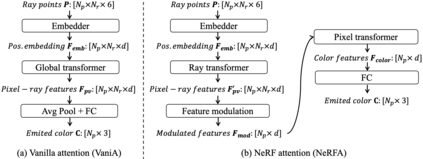

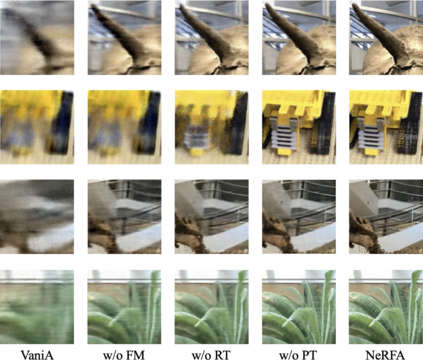

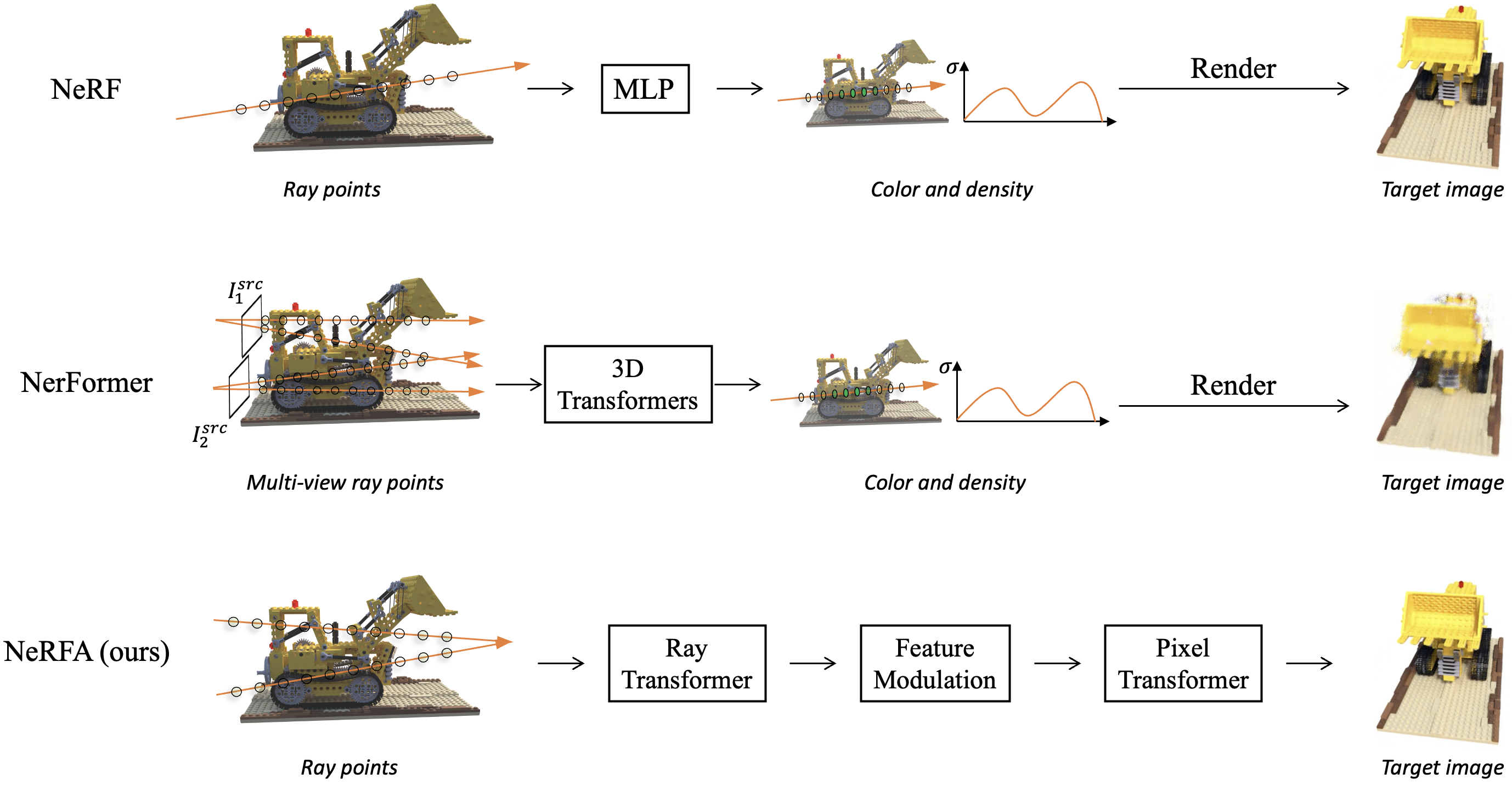

In this paper, we present a simple seq2seq formulation for view synthesis where we take a set of ray points as input and output colors corresponding to the rays. Directly applying a standard transformer on this seq2seq formulation has two limitations. First, the standard attention cannot successfully fit the volumetric rendering procedure, and therefore high-frequency components are missing in the synthesized views. Second, applying global attention to all rays and pixels is extremely inefficient. Inspired by the neural radiance field (NeRF), we propose the NeRF attention (NeRFA) to address the above problems. On the one hand, NeRFA considers the volumetric rendering equation as a soft feature modulation procedure. In this way, the feature modulation enhances the transformers with the NeRF-like inductive bias. On the other hand, NeRFA performs multi-stage attention to reduce the computational overhead. Furthermore, the NeRFA model adopts the ray and pixel transformers to learn the interactions between rays and pixels. NeRFA demonstrates superior performance over NeRF and NerFormer on four datasets: DeepVoxels, Blender, LLFF, and CO3D. Besides, NeRFA establishes a new state-of-the-art under two settings: the single-scene view synthesis and the category-centric novel view synthesis. The code will be made publicly available.

翻译:在本文中, 我们展示了一个简单的后继2seq 配方, 用于查看合成。 我们将一组射线点作为与射线相对应的输入和输出颜色。 直接将一个标准变压器用于此后继2seq 配方有两个限制。 首先, 标准关注无法成功地适应体积转换程序, 因此在综合观点中缺少高频组件。 其次, 对所有射线和像素应用全球关注极为低效。 在神经光亮场的启发下, 我们建议 NERFA 关注( NERFA ) 解决上述问题。 一方面, NERFA 将体积转换方程式视为软功能调制程序。 这样, 功能调制能增强变压器, 与NERFS一样的演算偏差。 另一方面, NERFA 将多阶段关注减少计算管理。 此外, NERFA 模型采用射线和像变压器来学习射线和像素之间的相互作用。 NERFA 将展示优优于NRF和 NerferF3 的图像, 在四种公开的版本中, 设置下, 。 将建立一个新的 Creal- Forformax- 。 。 。 在四个 设置下, 建立新的 建立新的 Cal- gold- gold- 。