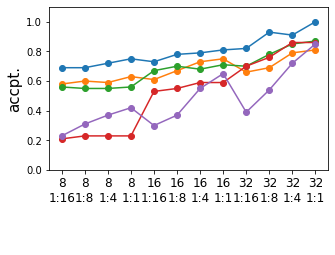

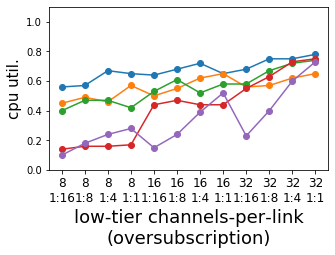

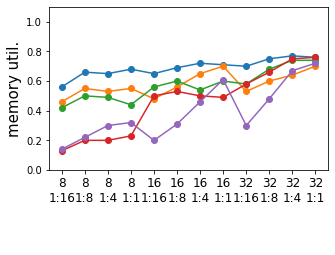

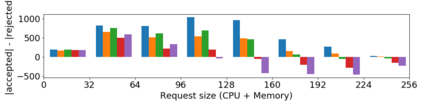

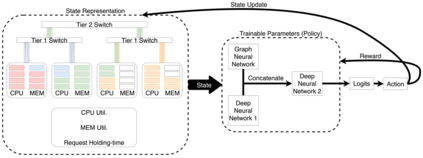

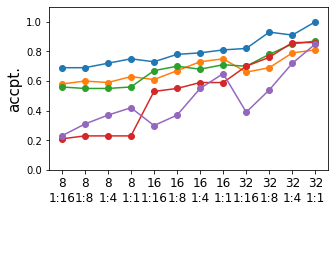

Resource-disaggregated data centres (RDDC) propose a resource-centric, and high-utilisation architecture for data centres (DC), avoiding resource fragmentation and enabling arbitrarily sized resource pools to be allocated to tasks, rather than server-sized ones. RDDCs typically impose greater demand on the network, requiring more infrastructure and increasing cost and power, so new resource allocation algorithms that co-manage both server and networks resources are essential to ensure that allocation is not bottlenecked by the network, and that requests can be served successfully with minimal networking resources. We apply reinforcement learning (RL) to this problem for the first time and show that an RL policy based on graph neural networks can learn resource allocation policies end-to-end that outperform previous hand-engineered heuristics by up to 22.0\%, 42.6\% and 22.6\% for acceptance ratio, CPU and memory utilisation respectively, maintain performance when scaled up to RDDC topologies with $10^2\times$ more nodes than those seen during training and can achieve comparable performance to the best baselines while using $5.3\times$ less network resources.

翻译:资源分类数据中心(RDC)为数据中心提出了一个以资源为中心的高利用率结构,避免资源分散,使任意规模的资源集合能够用于任务,而不是服务器规模的任务。 RDC通常对网络造成更大的需求,需要更多的基础设施,并增加成本和动力,因此,对服务器和网络资源进行共同管理的新资源分配算法至关重要,以确保分配不被网络所制约,并且请求能够以最少的联网资源成功满足。 我们首次对该问题应用了强化学习(RL),并表明基于图表神经网络的RL政策可以学习资源分配政策的端到端,该政策在接受率、CPU和记忆利用率方面分别超过以往手工设计的超常值,在升级到RDC的表层时,其业绩比培训期间看到的要高10美元或2美元,其节点比培训期间看到的要高,并且可以达到与最佳基线相近的业绩,同时使用5.3美元网络资源。