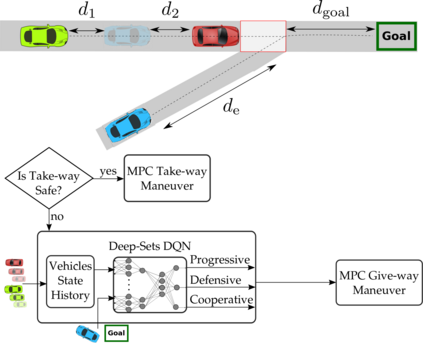

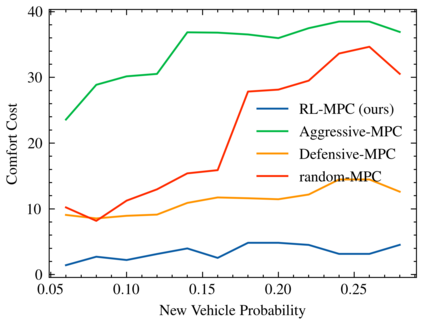

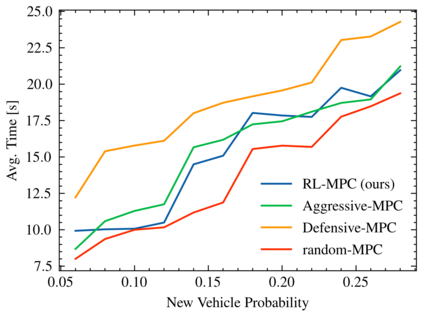

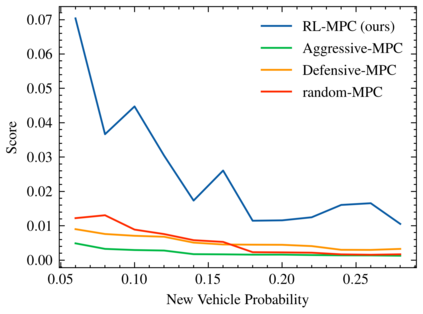

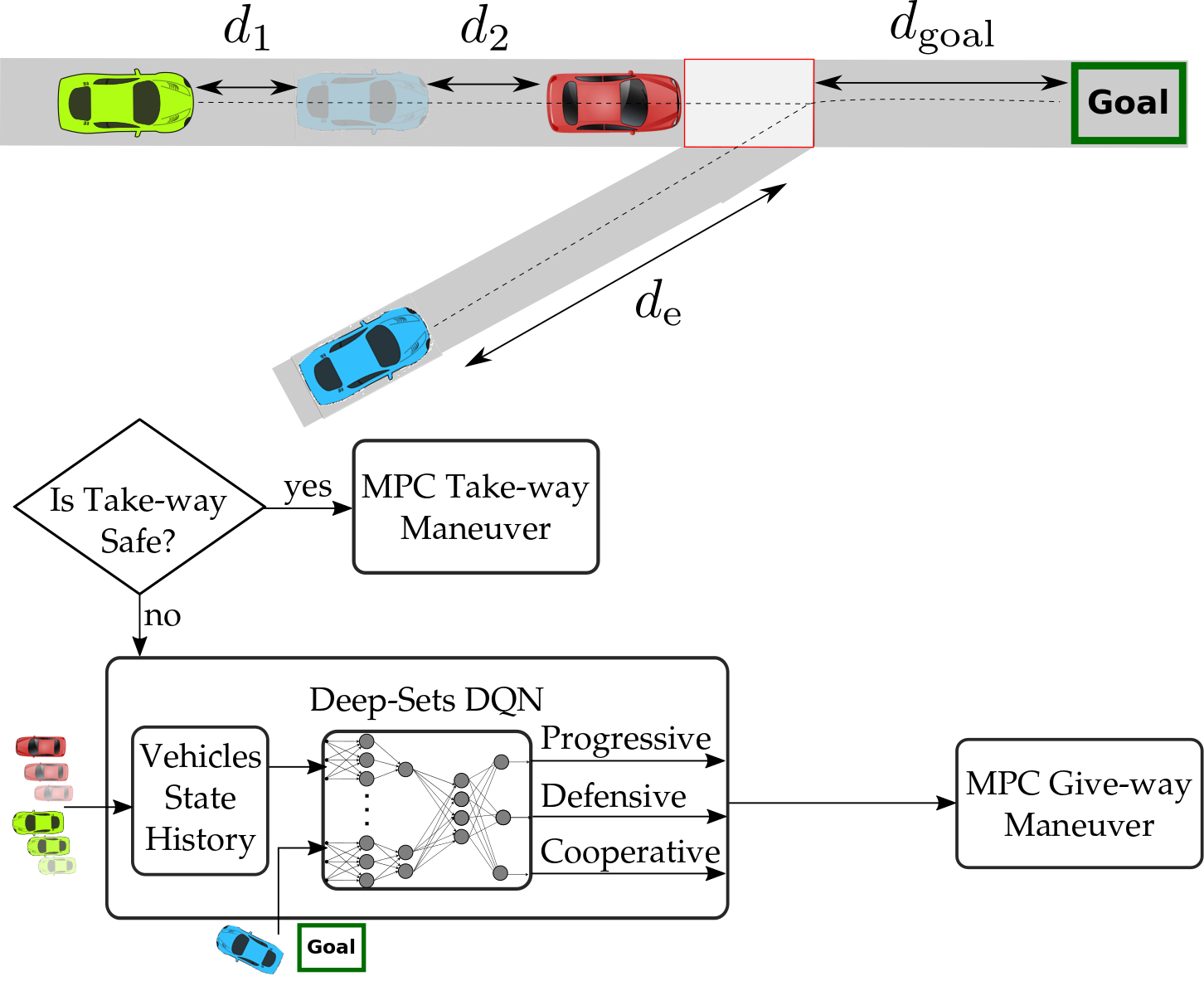

Reinforcement learning (RL) has recently been used for solving challenging decision-making problems in the context of automated driving. However, one of the main drawbacks of the presented RL-based policies is the lack of safety guarantees, since they strive to reduce the expected number of collisions but still tolerate them. In this paper, we propose an efficient RL-based decision-making pipeline for safe and cooperative automated driving in merging scenarios. The RL agent is able to predict the current situation and provide high-level decisions, specifying the operation mode of the low level planner which is responsible for safety. In order to learn a more generic policy, we propose a scalable RL architecture for the merging scenario that is not sensitive to changes in the environment configurations. According to our experiments, the proposed RL agent can efficiently identify cooperative drivers from their vehicle state history and generate interactive maneuvers, resulting in faster and more comfortable automated driving. At the same time, thanks to the safety constraints inside the planner, all of the maneuvers are collision free and safe.

翻译:强化学习(RL)最近被用于解决自动化驾驶过程中具有挑战性的决策问题,然而,所提出的以RL为基础的政策的一个主要缺点是缺乏安全保障,因为这些政策力求减少预期的碰撞次数,但仍能容忍碰撞次数。在本文件中,我们提议建立一个基于RL的高效决策管道,用于在合并情况下安全和合作的自动驾驶。RL代理能够预测当前情况并提供高层决定,具体说明负责安全的低级别规划员的操作模式。为了了解更通用的政策,我们为合并方案提出了一个可扩缩的RL结构结构,该结构对环境配置的变化并不敏感。根据我们的实验,拟议的RL代理可以高效率地识别其车辆状况历史中的合作驾驶者,并产生互动的动作,从而导致更快和更舒适的自动化驾驶。与此同时,由于规划员内部的安全限制,所有动作都是自由而安全的。