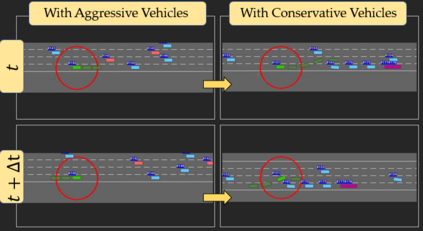

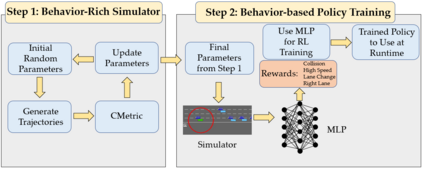

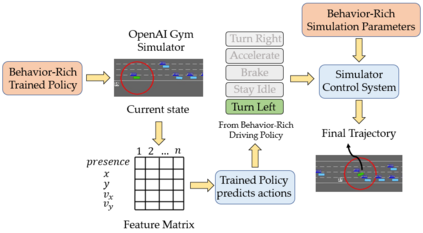

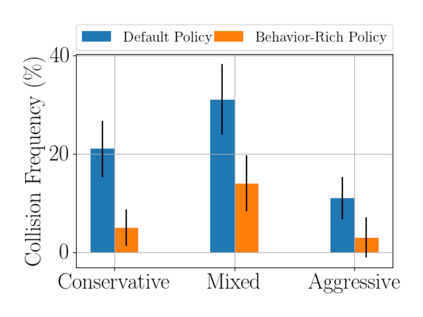

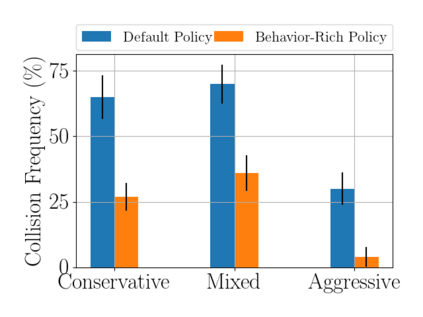

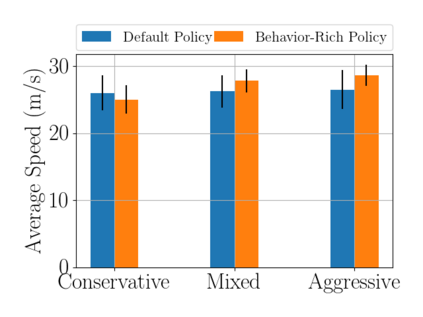

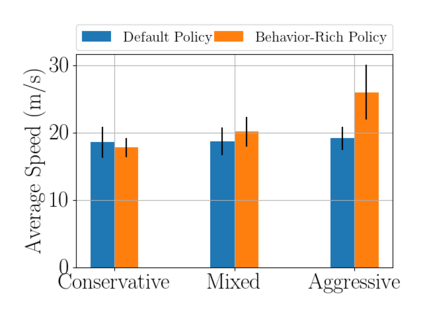

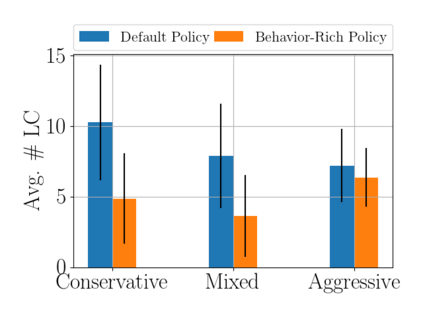

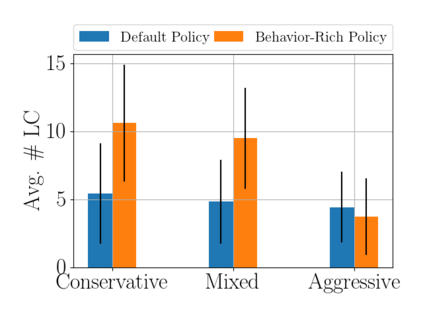

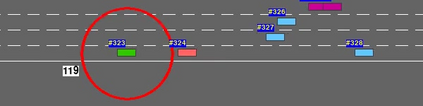

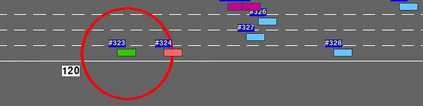

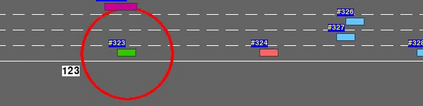

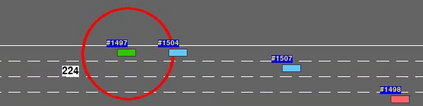

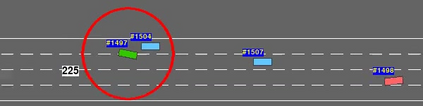

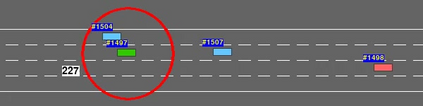

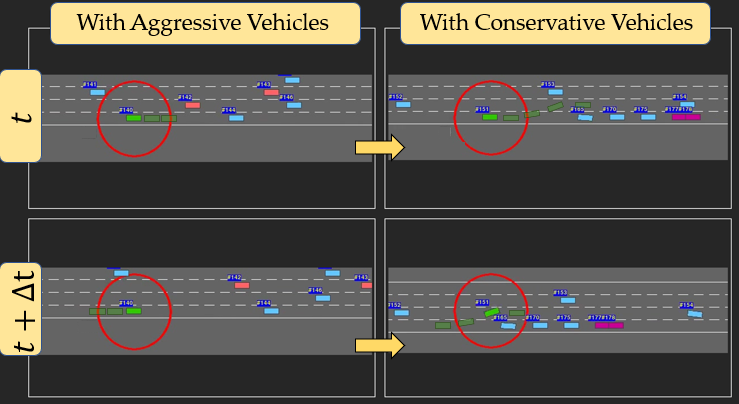

We focus on safe ego-navigation in dense simulated traffic environments populated by road agents with varying driver behavior. Navigation in such environments is challenging due to unpredictability in agents' actions caused by their heterogeneous behaviors. To overcome these challenges, we propose a new simulation technique which consists of enriching existing traffic simulators with behavior-rich trajectories corresponding to varying levels of aggressiveness. We generate these trajectories with the help of a driver behavior modeling algorithm. We then use the enriched simulator to train a deep reinforcement learning (DRL) policy for behavior-guided action prediction and local navigation in dense traffic. The policy implicitly models the interactions between traffic agents and computes safe trajectories for the ego-vehicle accounting for aggressive driver maneuvers such as overtaking, over-speeding, weaving, and sudden lane changes. Our enhanced behavior-rich simulator can be used for generating datasets that consist of trajectories corresponding to diverse driver behaviors and traffic densities, and our behavior-based navigation scheme reduces collisions by $7.13 - 8.40$%, handling scenarios with $8\times$ higher traffic density compared to prior DRL-based approaches.

翻译:我们的重点是在由具有不同驱动行为行为的公路代理商组成的密集模拟交通环境中安全自我导航。 在这种环境中,由于代理人行为的多样性造成的行动不可预测,导航具有挑战性。为了克服这些挑战,我们提议一种新的模拟技术,包括用与不同进取程度相当的超强行为轨迹来丰富现有的交通模拟器;我们在驱动行为模型算法的帮助下生成这些轨迹。然后我们利用浓缩模拟器来为行为引导行动预测和密集交通的地方导航培训深度强化学习(DRL)政策。该政策隐含地模拟了交通代理商与计算自我驱动器会计安全轨迹之间的相互作用,以便进行主动驱动器的动作,例如超速、超速、编织和突然的车道变化。我们增强行为丰富的模拟器可用于生成由与不同驱动器行为和交通密度对应的轨迹构成的数据集。我们基于行为的更高导航计划将碰撞减少7.13-8.40美美美分的碰撞率,与基于前期的交通密度对比8.DR。