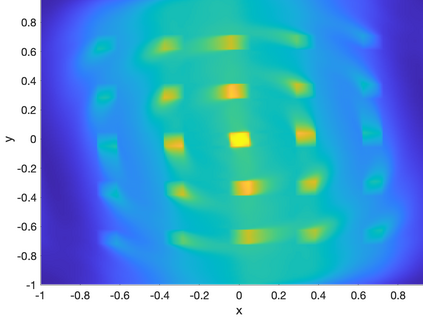

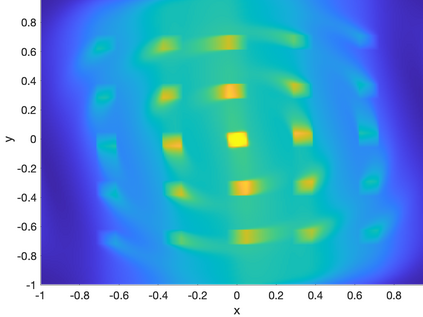

In this paper, we present a predictor-corrector strategy for constructing rank-adaptive, dynamical low-rank approximations (DLRAs) of matrix-valued ODE systems. The strategy is a compromise between (i) low-rank step-truncation approaches that alternately evolve and compress solutions and (ii) strict DLRA approaches that augment the low-rank manifold using subspaces generated locally in time by the DLRA integrator. The strategy is based on an analysis of the error between a forward temporal update into the ambient full-rank space, which is typically computed in a step-truncation approach before re-compressing, and the standard DLRA update, which is forced to live in a low-rank manifold. We use this error, without requiring its full-rank representation, to correct the DLRA solution. A key ingredient for maintaining a low-rank representation of the error is a randomized singular value decomposition (SVD), which introduces some degree of stochastic variability into the implementation. The strategy is formulated and implemented in the context of discontinuous Galerkin spatial discretizations of partial differential equations and applied to several versions of DLRA methods found in the literature, as well as a new variant. Numerical experiments comparing predictor-corrector strategy to other methods demonstrate robustness the overcome short-comings of step truncation or strict DLRA approaches: the former may require more memory than is strictly needed while the latter may miss transients solution features that cannot be recovered. The effect of randomization, tolerances, and other implementation parameters is also explored.

翻译:在本文中,我们提出了一个预测-校正策略,用于构建矩阵估值的 ODE 系统的级适、动态低端近似值(DLAs) 。 战略是以下两种方法之间的折中:(一) 低级分流方法,这些方法会交替演变和压缩解决方案,以及(二) 严格的 DLRA 方法,利用DLA 整合器及时在当地生成的子空间,来增加低级分层。 战略的基础是分析以下两种方法之间的差错:(一) 低级分流方法,这些方法通常在重压缩之前以累进式方法进行计算,而标准DRA 更新是被迫生活在低级的分流方法;(二) 严格的DLRA 方法,在不要求全级代表的情况下,使用这一错误方法来提高低级分层的电路段电路段。 战略的随机奇特异性(SVD) 可能给环境全层空间空间空间空间空间空间空间带来某种程度的变异化。 战略的制定和实施是在不连续性加距误差方法背景下进行的, 将空间分流方法作为后方位分析后方程式, 也无法将新的分解方法作为新的分解方法,而将新的分解方法作为新的分解法,而需要, 将新的分解法作为新的分解法, 。