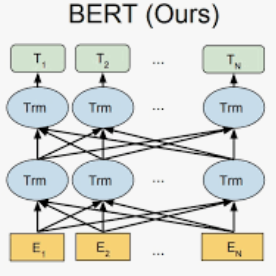

The current UMLS (Unified Medical Language System) Metathesaurus construction process for integrating over 200 biomedical source vocabularies is expensive and error-prone as it relies on the lexical algorithms and human editors for deciding if the two biomedical terms are synonymous. Recent advances in Natural Language Processing such as Transformer models like BERT and its biomedical variants with contextualized word embeddings have achieved state-of-the-art (SOTA) performance on downstream tasks. We aim to validate if these approaches using the BERT models can actually outperform the existing approaches for predicting synonymy in the UMLS Metathesaurus. In the existing Siamese Networks with LSTM and BioWordVec embeddings, we replace the BioWordVec embeddings with the biomedical BERT embeddings extracted from each BERT model using different ways of extraction. In the Transformer architecture, we evaluate the use of the different biomedical BERT models that have been pre-trained using different datasets and tasks. Given the SOTA performance of these BERT models for other downstream tasks, our experiments yield surprisingly interesting results: (1) in both model architectures, the approaches employing these biomedical BERT-based models do not outperform the existing approaches using Siamese Network with BioWordVec embeddings for the UMLS synonymy prediction task, (2) the original BioBERT large model that has not been pre-trained with the UMLS outperforms the SapBERT models that have been pre-trained with the UMLS, and (3) using the Siamese Networks yields better performance for synonymy prediction when compared to using the biomedical BERT models.

翻译:UMLS (统一医疗语言系统) 当前用于整合200多个生物医学源词汇库的UMLS(统一医疗语言系统) 元词库构建流程成本昂贵且容易出错,因为它依赖于词汇算法和人类编辑来确定这两个生物医学术语是否同义。 自然语言处理(如BERT等变异模型及其带有背景化字嵌入功能的生物医学变异模型)最近的进展已经达到了下游任务的最新状态( SOTA ) 。 我们的目标是验证使用BERT模型的这些方法是否能够实际超过现有方法来预测UMLS(UMLS) 元词库中的同义学。 在使用LSTM 和 BioWordVec 嵌入的SAM 算算网络中,我们用生物医学BERT 嵌入模型的嵌入器取代了BOWERT。 在使用不同的数据组前测试模型之前的模型BERUMUM (MUF) (MUD) (MT) 之前使用不同的数据集和任务之前的计算方法。 由于这些BERT 模型的SERT模型的运行比LS-RS-RES) 的模型在下游任务中比较任务中, 我们的S-RERMILS-ILS-ILS-ILS-ILS-S-ILS-ILS-ILS-S(使用这些模型的实验结果是使用这些模型,在使用这些模型,在使用这些模型之前的模型,在使用这些模型之前的模型之前的模型的模型的运行中,在使用S-stFTLS-IFTFTF 的模型的运行方法在使用了这些模型的模型的模型的模型的运行中,在使用S-S-S-IFTFTFTF-S-S-S-S-S-S-ILS-ILS-ILS-S-S-S-S-S-S-S-S-S-S-S-ILS-S-S-S-S-S-ILS-ILS-S-S-S-S-S-S-ILS-ILS-ILS-ILS-ILS-S-