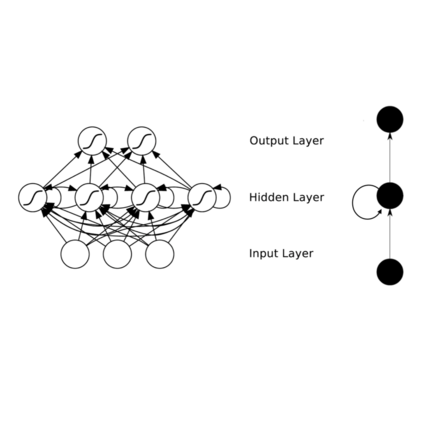

We provide a unifying framework where artificial neural networks and their architectures can be formally described as particular cases of a general mathematical construction--machines of finite depth. Unlike neural networks, machines have a precise definition, from which several properties follow naturally. Machines of finite depth are modular (they can be combined), efficiently computable and differentiable. The backward pass of a machine is again a machine and can be computed without overhead using the same procedure as the forward pass. We prove this statement theoretically and practically, via a unified implementation that generalizes several classical architectures--dense, convolutional, and recurrent neural networks with a rich shortcut structure--and their respective backpropagation rules.

翻译:我们提供了一个统一框架,使人工神经网络及其结构可以被正式描述为具有有限深度的一般数学构造-机器的特殊案例。 与神经网络不同,机器有精确的定义,从中自然产生若干特性。 有限深度的机器是模块式的(可以组合)、高效的可比较和可区分的。 机器的后端通道又是一个机器,可以不使用前方通道的相同程序来计算间接费用。 我们从理论上和实践上证明这一说法,通过统一实施,将一些古典建筑-感官、革命和经常性神经网络普遍化,拥有丰富的捷径结构及其各自的反向转换规则。