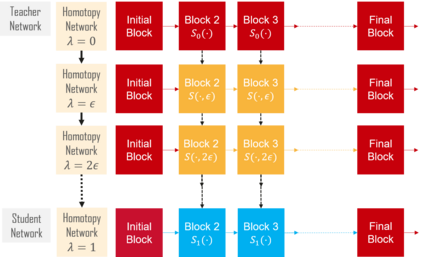

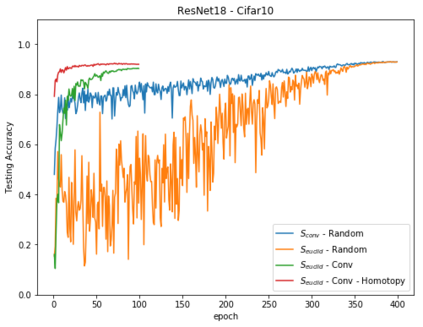

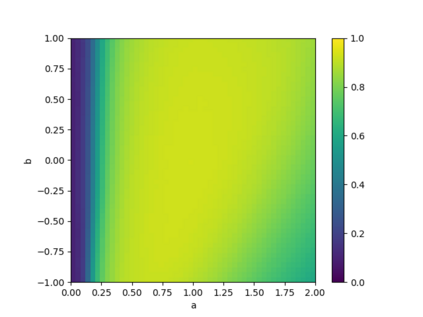

With the advent of deep learning application on edge devices, researchers actively try to optimize their deployments on low-power and restricted memory devices. There are established compression method such as quantization, pruning, and architecture search that leverage commodity hardware. Apart from conventional compression algorithms, one may redesign the operations of deep learning models that lead to more efficient implementation. To this end, we propose EuclidNet, a compression method, designed to be implemented on hardware which replaces multiplication, $xw$, with Euclidean distance $(x-w)^2$. We show that EuclidNet is aligned with matrix multiplication and it can be used as a measure of similarity in case of convolutional layers. Furthermore, we show that under various transformations and noise scenarios, EuclidNet exhibits the same performance compared to the deep learning models designed with multiplication operations.

翻译:随着在边缘装置上的深层学习应用的到来,研究人员积极试图优化其在低功率和限制内存装置上的部署。有固定的压缩方法,例如量化、剪裁和建筑搜索,利用商品硬件。除了传统的压缩算法外,还可以重新设计深层学习模式的运作,从而更有效地实施。为此,我们提议EuclidNet,一种压缩方法,设计用于硬件,用Euclidean距离(x-w)$2美元取代倍增,用Euclidean距离(x-w)$2美元。我们表明,EuclidNet与矩阵倍增量一致,在聚合层的情况下可以用作类似度的尺度。此外,我们表明,在各种变异和噪音情况下,EuclidNet与用倍增作业设计的深层学习模式相比,其性能相同。