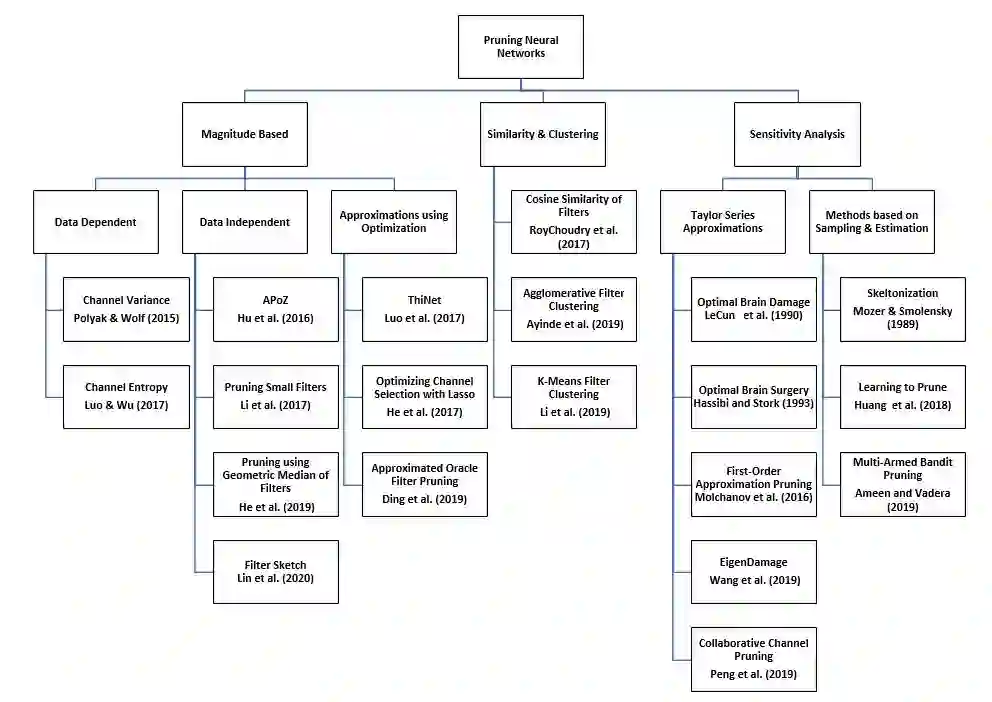

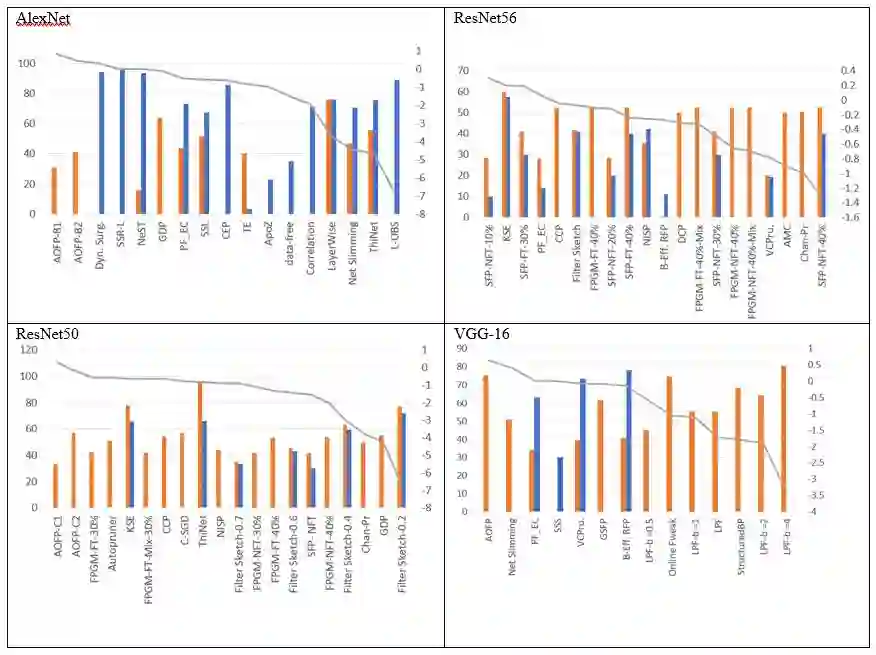

This paper presents a survey of methods for pruning deep neural networks. It begins by categorising over 150 studies based on the underlying approach used and then focuses on three categories: methods that use magnitude based pruning, methods that utilise clustering to identify redundancy, and methods that use sensitivity analysis to assess the effect of pruning. Some of the key influencing studies within these categories are presented to highlight the underlying approaches and results achieved. Most studies present results which are distributed in the literature as new architectures, algorithms and data sets have developed with time, making comparison across different studied difficult. The paper therefore provides a resource for the community that can be used to quickly compare the results from many different methods on a variety of data sets, and a range of architectures, including AlexNet, ResNet, DenseNet and VGG. The resource is illustrated by comparing the results published for pruning AlexNet and ResNet50 on ImageNet and ResNet56 and VGG16 on the CIFAR10 data to reveal which pruning methods work well in terms of retaining accuracy whilst achieving good compression rates. The paper concludes by identifying some promising directions for future research.

翻译:本文介绍了对深神经网络运行方法的调查,首先根据所采用的基本方法对150多项研究进行分类,然后侧重于三类:使用基于规模的运行方法,利用集群确定冗余的方法,以及使用敏感性分析评估裁剪效果的方法,这些类别中的一些影响关键研究的介绍强调了基本方法和取得的成果。大多数研究显示文献中传播的结果,这些结果随着新结构、算法和数据集的开发而随着时间的推移而发展,使得不同研究的比较变得困难。因此,本文件为社区提供了一个资源,可用于快速比较关于各种数据集的多种不同方法的结果,以及一系列结构,包括AlexNet、ResNet、DenseNet和VGG。通过比较关于图像网络和ResNet56和VGG16的PRONet50和ResNet56和VGG10数据公布的结果,以揭示哪些方法在保持准确性并实现良好压缩率方面效果良好。文件的结论是,确定了未来研究的一些有希望的方向。