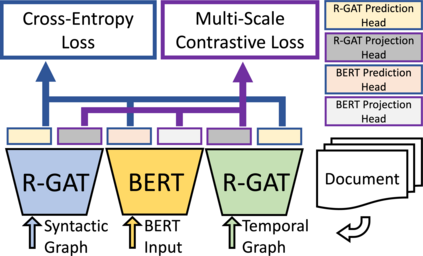

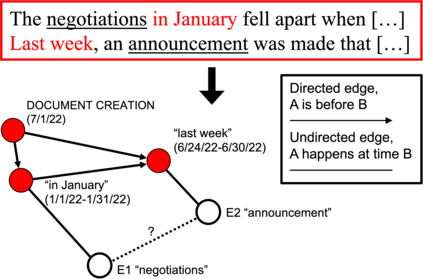

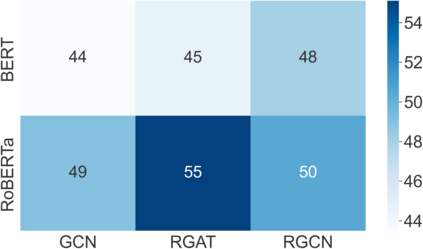

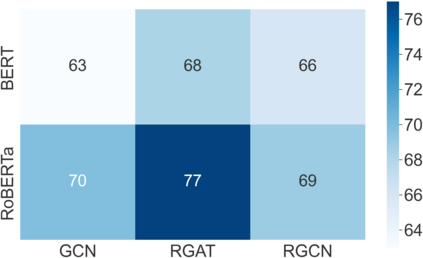

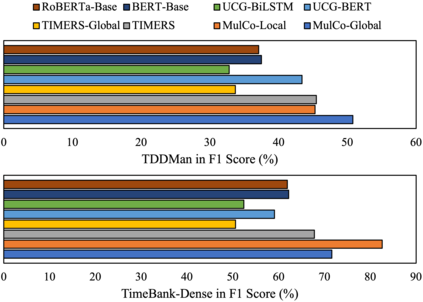

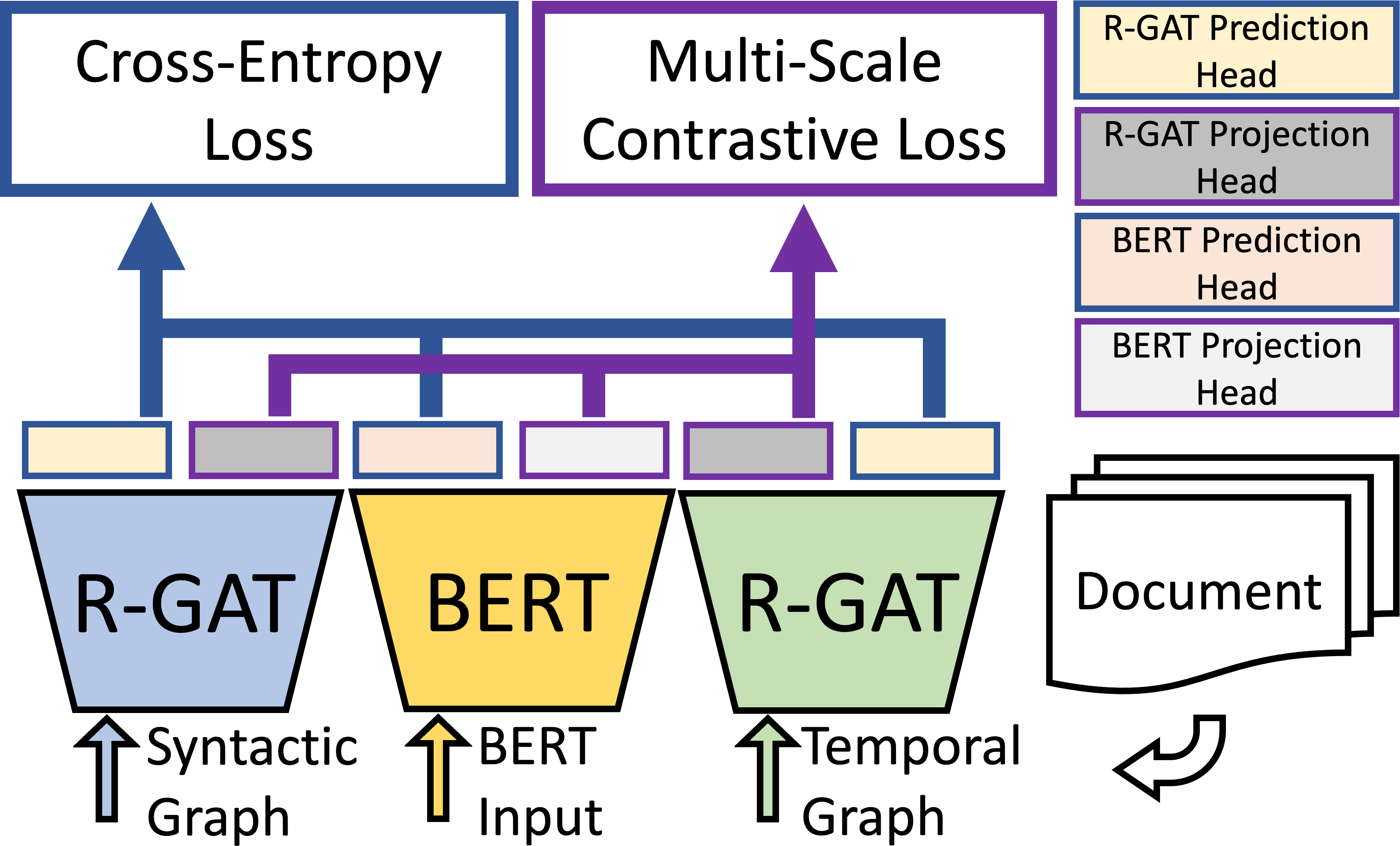

Extracting temporal relationships between pairs of events in texts is a crucial yet challenging problem for natural language understanding. Depending on the distance between the events, models must learn to differently balance information from local and global contexts surrounding the event pair for temporal relation prediction. Learning how to fuse this information has proved challenging for transformer-based language models. Therefore, we present MulCo: Multi-Scale Contrastive Co-Training, a technique for the better fusion of local and global contextualized features. Our model uses a BERT-based language model to encode local context and a Graph Neural Network (GNN) to represent global document-level syntactic and temporal characteristics. Unlike previous state-of-the-art methods, which use simple concatenation on multi-view features or select optimal sentences using sophisticated reinforcement learning approaches, our model co-trains GNN and BERT modules using a multi-scale contrastive learning objective. The GNN and BERT modules learn a synergistic parameterization by contrasting GNN multi-layer multi-hop subgraphs (i.e., global context embeddings) and BERT outputs (i.e., local context embeddings) through end-to-end back-propagation. We empirically demonstrate that MulCo provides improved ability to fuse local and global contexts encoded using BERT and GNN compared to the current state-of-the-art. Our experimental results show that MulCo achieves new state-of-the-art results on several temporal relation extraction datasets.

翻译:在文本中提取事件对等之间的时间关系对于自然语言理解来说是一个至关重要但具有挑战性的问题。根据事件之间的距离,模型必须学会从地方和全球背景中不同地平衡信息,以进行时间关系预测。学习如何将这一信息结合到基于变压器的语言模型上,因此,我们介绍MulCo:多层次对比共修培训,这是更好地融合本地和全球背景特征的一种技术。我们的模型使用基于BERT的语言模型来编码本地背景和图形神经网络(GNN),以代表全球文档级合成和时间特性。与以往的时态方法不同,前者使用简单对多视图特征的配置,或使用复杂的强化学习方法选择最佳句子。因此,我们介绍MulCo:多层次对比共读培训,这是一种更好地融合本地和全球背景特征的技术。GNNN和BERT模块通过对比GNN-NNM(即全球背景嵌入和BERT)的新结果,与BERT(iroal-al-al-lational-lational-cal-col-cal-col-cal-col-cal-col-cal-cal-col-col-col-col-col-col-lation) 展示,通过我们当前、当地背景和当地背景显示GNFislation-B-C-C-B-C-C-C-cal-cal-cal-B-C-C-cal-cal-cal-C-cal-cal-C-C-cal-cal-cal-cal-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-lation-C-C-C-C-leval-leval-d-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-l-l-C-C-C-C-C-C-C-C-C-C-C-C-C-