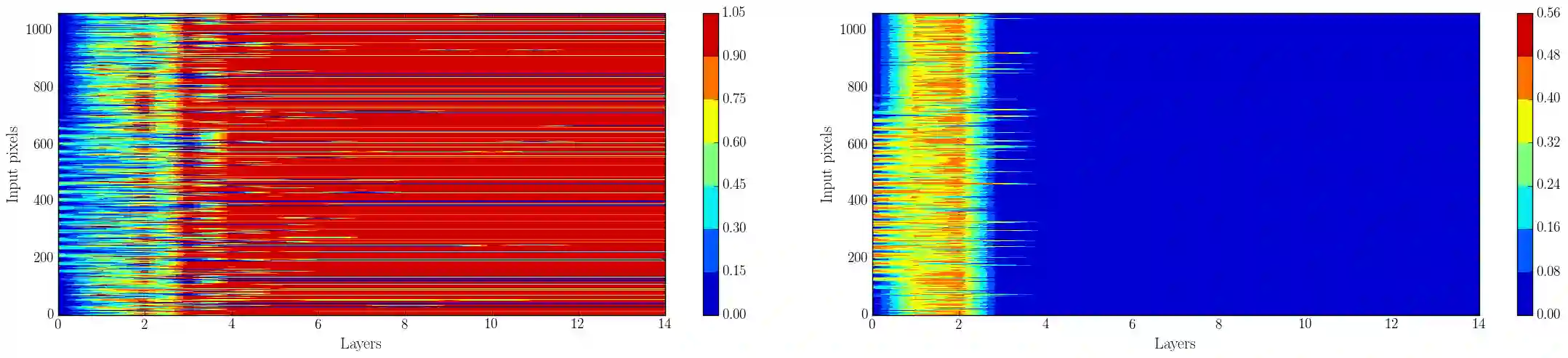

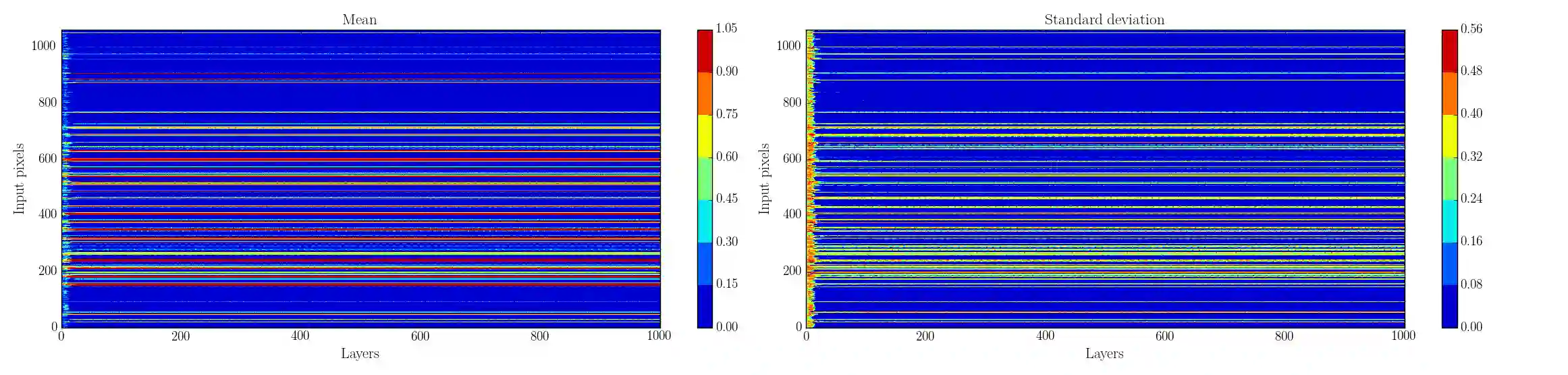

In this study, we analyze the input-output behavior of residual networks from a dynamical system point of view by disentangling the residual dynamics from the output activities before the classification stage. For a network with simple skip connections between every successive layer, and for logistic activation function, and shared weights between layers, we show analytically that there is a cooperation and competition dynamics between residuals corresponding to each input dimension. Interpreting these kind of networks as nonlinear filters, the steady state value of the residuals in the case of attractor networks are indicative of the common features between different input dimensions that the network has observed during training, and has encoded in those components. In cases where residuals do not converge to an attractor state, their internal dynamics are separable for each input class, and the network can reliably approximate the output. We bring analytical and empirical evidence that residual networks classify inputs based on the integration of the transient dynamics of the residuals, and will show how the network responds to input perturbations. We compare the network dynamics for a ResNet and a Multi-Layer Perceptron and show that the internal dynamics, and the noise evolution are fundamentally different in these networks, and ResNets are more robust to noisy inputs. Based on these findings, we also develop a new method to adjust the depth for residual networks during training. As it turns out, after pruning the depth of a ResNet using this algorithm,the network is still capable of classifying inputs with a high accuracy.

翻译:在本研究中,我们从动态系统的角度分析残余网络的输入-输出行为,将剩余网络从动态系统的角度分析,将剩余动态从分类阶段之前的产出活动中分离出来。对于每个连续层之间简单跳过连接的网络,以及后勤激活功能和不同层之间共享加权的网络,我们从分析中显示,每个输入层面对应的剩余网络之间存在合作和竞争动态。将这些类型的网络解释为非线性过滤器,吸引网络情况下剩余网络的稳定状态值表明网络在培训期间观察到的不同输入层面之间的共同特征,并在这些组件中编码。对于每个连续层之间简单跳过连接连接的网络,以及后勤激活功能功能功能的运行,每个输入类别之间的内部动态是分离的,而网络可以可靠地接近产出。我们提供分析性和实证证据表明,剩余网络在整合残余的动态的基础上对投入进行分类,并将网络对投入的响应情况仍然显示。我们比较了ResNet和多Layer Percepron的网络的网络动态,并显示,如果剩余的网络在内部动态和存储的深度网络中进行更精确的网络,则显示,这些动态的网络在不断的网络中进行这些变化的网络调整。