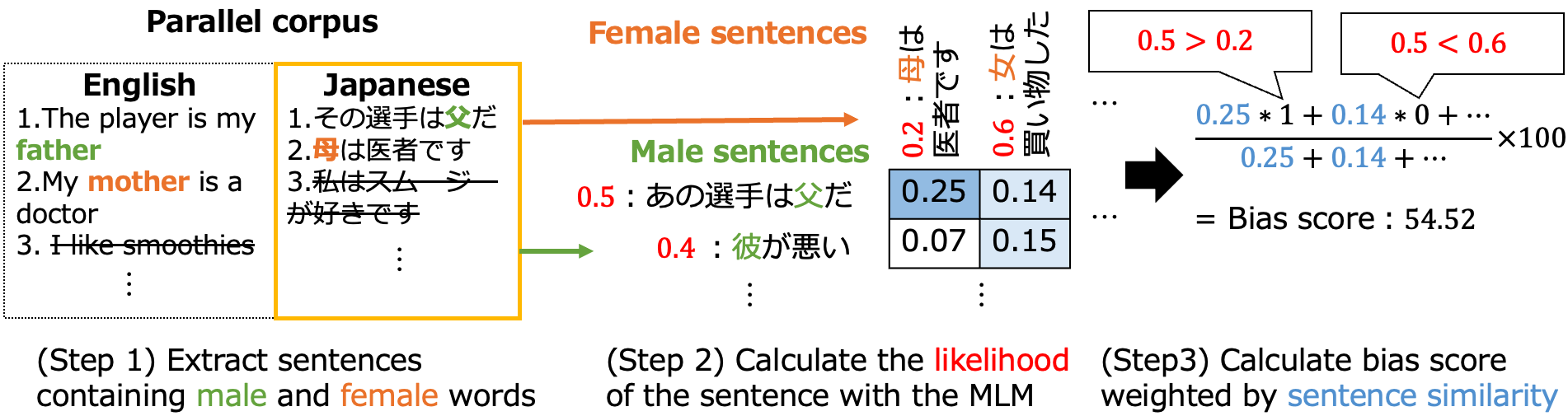

Masked Language Models (MLMs) pre-trained by predicting masked tokens on large corpora have been used successfully in natural language processing tasks for a variety of languages. Unfortunately, it was reported that MLMs also learn discriminative biases regarding attributes such as gender and race. Because most studies have focused on MLMs in English, the bias of MLMs in other languages has rarely been investigated. Manual annotation of evaluation data for languages other than English has been challenging due to the cost and difficulty in recruiting annotators. Moreover, the existing bias evaluation methods require the stereotypical sentence pairs consisting of the same context with attribute words (e.g. He/She is a nurse). We propose Multilingual Bias Evaluation (MBE) score, to evaluate bias in various languages using only English attribute word lists and parallel corpora between the target language and English without requiring manually annotated data. We evaluated MLMs in eight languages using the MBE and confirmed that gender-related biases are encoded in MLMs for all those languages. We manually created datasets for gender bias in Japanese and Russian to evaluate the validity of the MBE. The results show that the bias scores reported by the MBE significantly correlates with that computed from the above manually created datasets and the existing English datasets for gender bias.

翻译:语言模型(MLMS)是预先通过预测大型社团的蒙面标记来预先培训的,在对各种语言进行自然语言处理任务时成功地使用了大型社团的蒙面标记。不幸的是,据报告,MLMS还学会了对性别和种族等属性的歧视性偏见。由于大多数研究都侧重于英语的MLMS,因此很少调查其他语言的MLMS偏向性。英语以外语言的评估数据手工注释具有挑战性,因为招聘通知员的成本和困难。此外,现有的偏见评价方法要求用带有属性词的同一背景(如He/She是护士)对定型句子。我们建议多语言比亚斯评价(MBE)得分,以评价各种语言的偏见,只使用英语属性词表和目标语言与英语的平行体系,而不需要人工附加说明数据。我们用8种语言用MBE语言对MLMS进行了评价,并证实所有这些语言的性别偏见都已编码为MLMMS。我们手工制作了日语和俄语的性别偏见数据集,以评价来自MBE的现有性别比例数据。我们报告的、以及根据MBE报告的现有性别比例数据报告的数据的正确性。