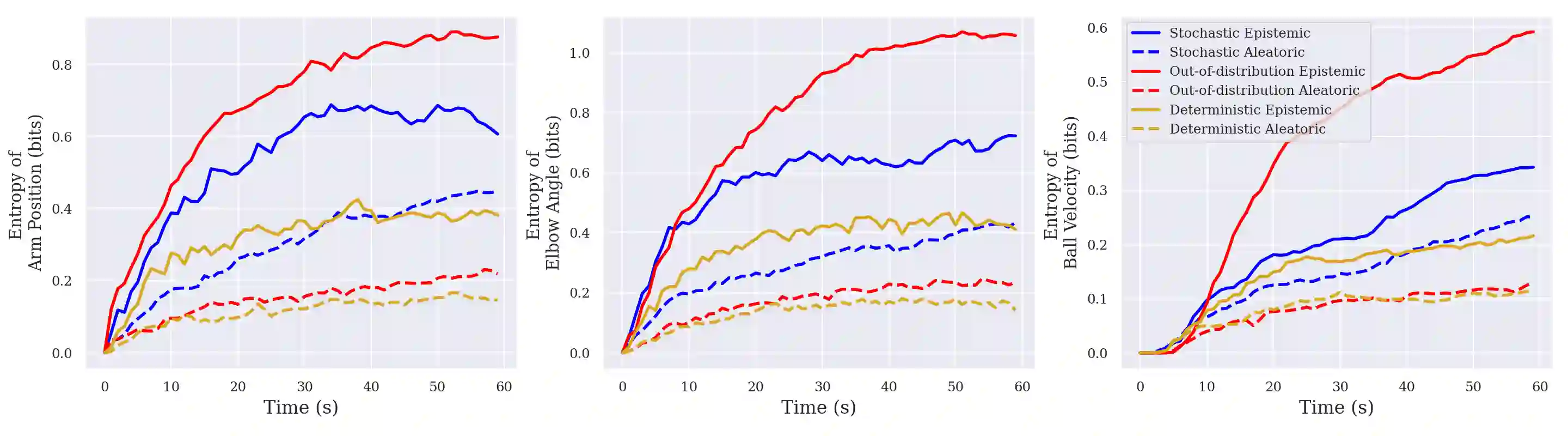

For safe and reliable deployment in the real world, autonomous agents must elicit appropriate levels of trust from human users. One method to build trust is to have agents assess and communicate their own competencies for performing given tasks. Competency depends on the uncertainties affecting the agent, making accurate uncertainty quantification vital for competency assessment. In this work, we show how ensembles of deep generative models can be used to quantify the agent's aleatoric and epistemic uncertainties when forecasting task outcomes as part of competency assessment.

翻译:为了在现实世界中安全可靠地部署,自主代理机构必须从人类用户那里获得适当程度的信任。建立信任的方法之一是让代理机构评估和交流自己执行特定任务的能力。能力取决于影响代理机构的不确定性,使准确的不确定性量化对能力评估至关重要。在这项工作中,我们展示如何在作为能力评估的一部分预测任务结果时,利用深层基因化模型组合来量化该代理机构的疏导性和突触性不确定性。