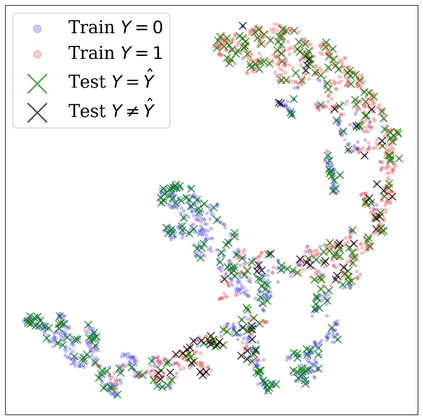

Graph Neural Networks (GNNs) have been shown to achieve competitive results to tackle graph-related tasks, such as node and graph classification, link prediction and node and graph clustering in a variety of domains. Most GNNs use a message passing framework and hence are called MPNNs. Despite their promising results, MPNNs have been reported to suffer from over-smoothing, over-squashing and under-reaching. Graph rewiring and graph pooling have been proposed in the literature as solutions to address these limitations. However, most state-of-the-art graph rewiring methods fail to preserve the global topology of the graph, are neither differentiable nor inductive, and require the tuning of hyper-parameters. In this paper, we propose DiffWire, a novel framework for graph rewiring in MPNNs that is principled, fully differentiable and parameter-free by leveraging the Lov\'asz bound. Our approach provides a unified theory for graph rewiring by proposing two new, complementary layers in MPNNs: CT-Layer, a layer that learns the commute times and uses them as a relevance function for edge re-weighting; and GAP-Layer, a layer to optimize the spectral gap, depending on the nature of the network and the task at hand. We empirically validate the value of each of these layers separately with benchmark datasets for graph classification. DiffWire brings together the learnability of commute times to related definitions of curvature, opening the door to creating more expressive MPNNs.

翻译:显示神经网图(GNNs)是为了达到具有竞争力的结果,以完成与图表有关的任务,如节点和图表分类、链接预测、节点和图表组合等。大多数GNNS使用信息传递框架,因此被称为MPNNS。尽管其结果很有希望,但据报告MPNNS受到过度移动、过度隔开和影响不足的影响。文献中提出了图的重新连线和图集,作为解决这些限制的办法。然而,大多数最先进的图表重新连线方法未能保存图表的全球表层,既不不同,也不感性,需要调整超参数。在本文件中,我们提议DiffWire,这是MPNNNNMs图重新连结的新框架,它具有原则性,完全不同,而且没有参数。我们的方法为图表的重新连线提供了统一理论,方法是在MPNNNW中提出两个新的、互补的层次:CT-Layer、一个不易变异的、一个需要调整的图层,用来从Gifferalalal-alalal 时间里,将GAFloralal-rialal-al-deal-deal-rial-rial-dealtial-de lide lide lideal-lide listal-lide lex lex le, lex lex lex lex lex lex lex lex lex lex lex lex lex lex) 将它们作为这些比数的每个比数,将每个调调调调调调调。