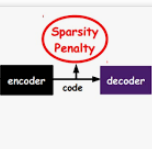

Constrained tensor and matrix factorization models allow to extract interpretable patterns from multiway data. Therefore identifiability properties and efficient algorithms for constrained low-rank approximations are nowadays important research topics. This work deals with columns of factor matrices of a low-rank approximation being sparse in a known and possibly overcomplete basis, a model coined as Dictionary-based Low-Rank Approximation (DLRA). While earlier contributions focused on finding factor columns inside a dictionary of candidate columns, i.e. one-sparse approximations, this work is the first to tackle DLRA with sparsity larger than one. I propose to focus on the sparse-coding subproblem coined Mixed Sparse-Coding (MSC) that emerges when solving DLRA with an alternating optimization strategy. Several algorithms based on sparse-coding heuristics (greedy methods, convex relaxations) are provided to solve MSC. The performance of these heuristics is evaluated on simulated data. Then, I show how to adapt an efficient MSC solver based on the LASSO to compute Dictionary-based Matrix Factorization and Canonical Polyadic Decomposition in the context of hyperspectral image processing and chemometrics. These experiments suggest that DLRA extends the modeling capabilities of low-rank approximations, helps reducing estimation variance and enhances the identifiability and interpretability of estimated factors.

翻译:整合后 的 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 软体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体 硬体