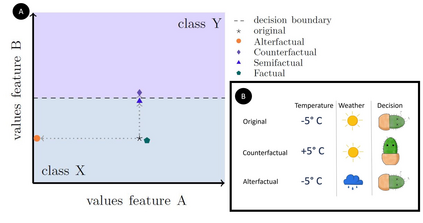

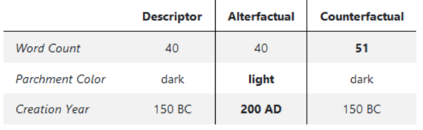

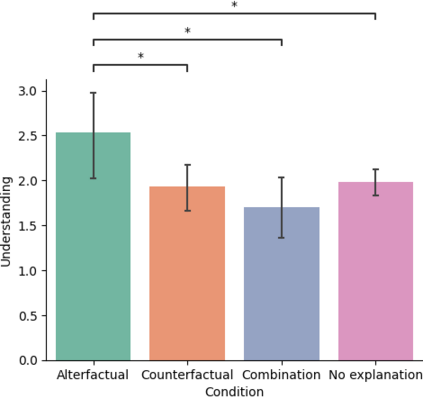

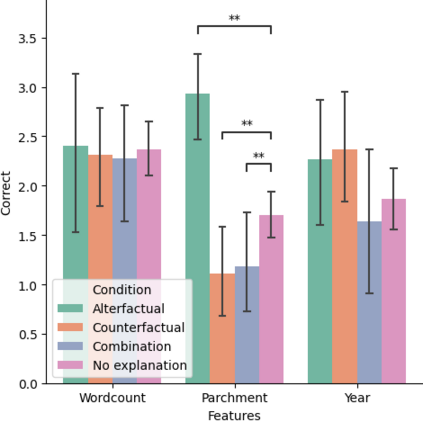

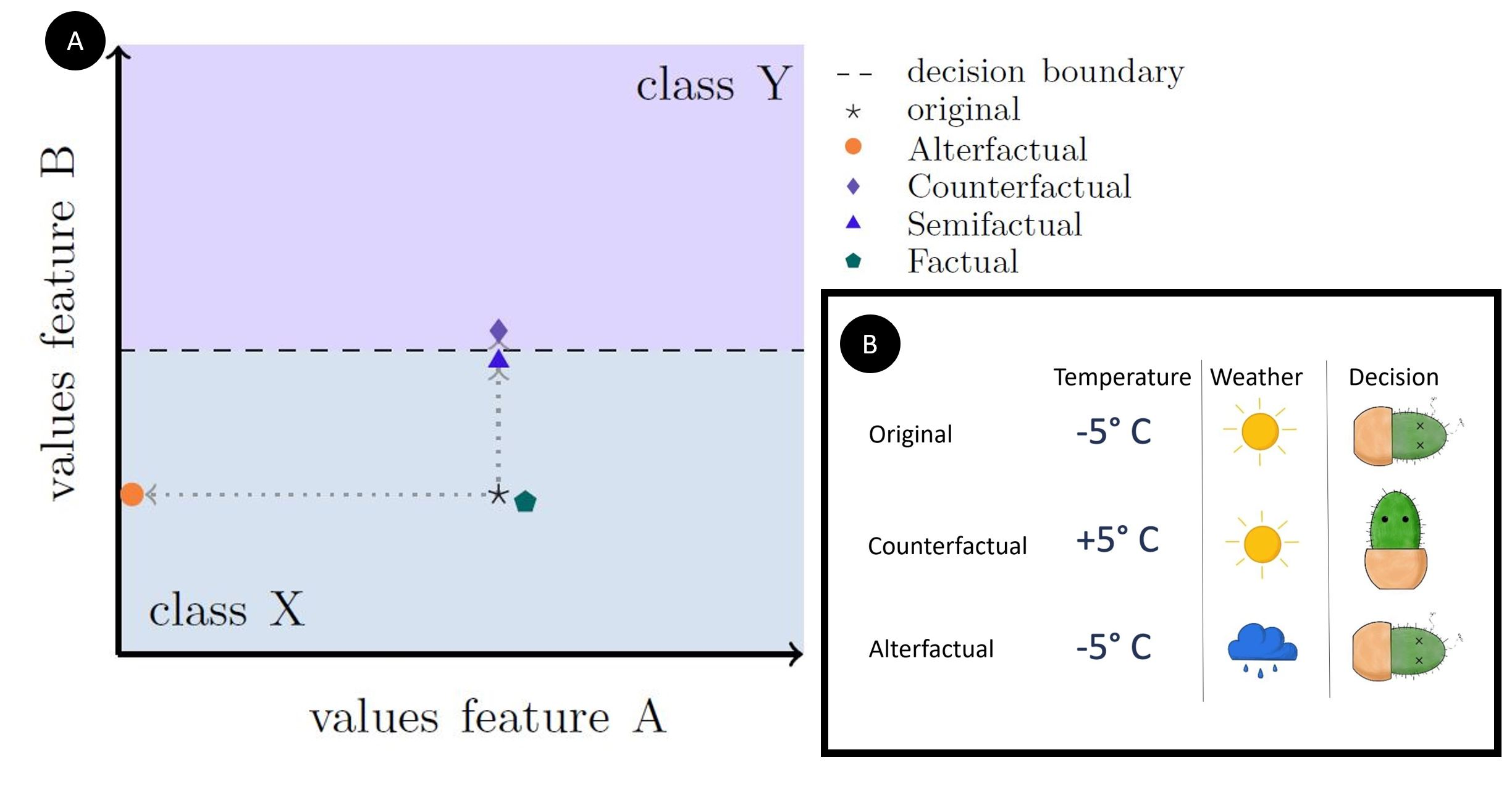

Explanation mechanisms from the field of Counterfactual Thinking are a widely-used paradigm for Explainable Artificial Intelligence (XAI), as they follow a natural way of reasoning that humans are familiar with. However, all common approaches from this field are based on communicating information about features or characteristics that are especially important for an AI's decision. We argue that in order to fully understand a decision, not only knowledge about relevant features is needed, but that the awareness of irrelevant information also highly contributes to the creation of a user's mental model of an AI system. Therefore, we introduce a new way of explaining AI systems. Our approach, which we call Alterfactual Explanations, is based on showing an alternative reality where irrelevant features of an AI's input are altered. By doing so, the user directly sees which characteristics of the input data can change arbitrarily without influencing the AI's decision. We evaluate our approach in an extensive user study, revealing that it is able to significantly contribute to the participants' understanding of an AI. We show that alterfactual explanations are suited to convey an understanding of different aspects of the AI's reasoning than established counterfactual explanation methods.

翻译:反事实思考领域的解释机制是解释人造情报(XAI)的一个广泛使用的范例,因为它们遵循人类熟悉的自然推理方法。然而,这一领域的所有共同方法都以交流关于对AI决定特别重要的特征或特征的信息为基础。我们争辩说,为了充分理解一项决定,不仅需要了解相关特征,而且对不相关信息的认识也非常有助于创建AI系统用户的心理模式。因此,我们引入了解释AI系统的新方法。我们称之为Alterfact解释的方法,其基础是显示替代现实,即AI投入的无关特征被改变。通过这样做,用户直接看到输入数据的哪些特征可以任意改变,而不影响AI的决定。我们在广泛的用户研究中评估我们的方法,表明它能够极大地促进参与者对AI的理解。我们表明,改变事实的解释适合于表达对AI理论不同方面的理解,而不是既定的反事实解释方法。