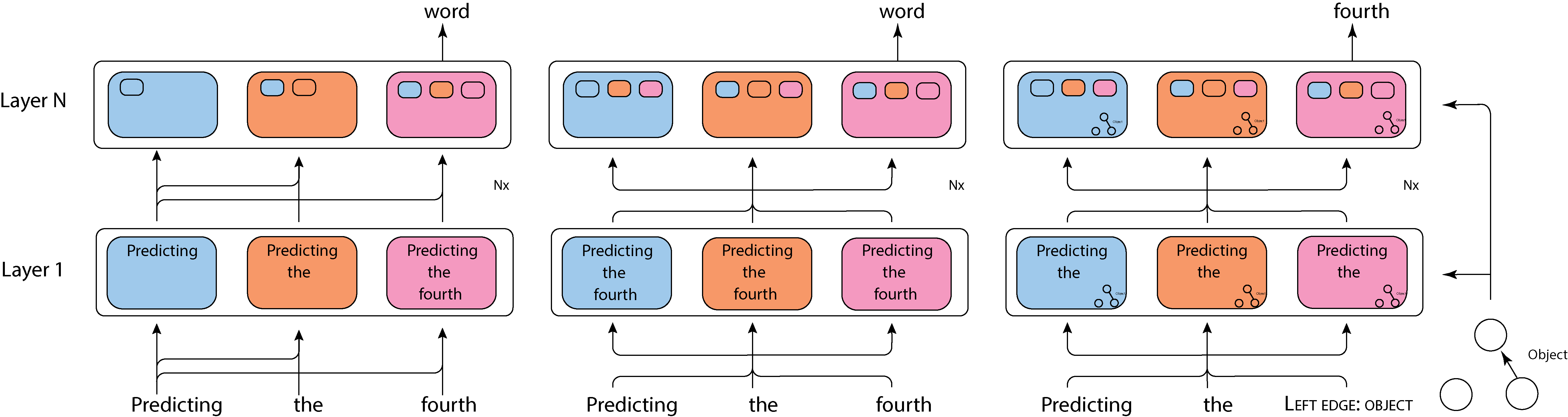

While a number of works showed gains from incorporating source-side symbolic syntactic and semantic structure into neural machine translation (NMT), much fewer works addressed the decoding of such structure. We propose a general Transformer-based approach for tree and graph decoding based on generating a sequence of transitions, inspired by a similar approach that uses RNNs by Dyer (2016). Experiments with using the proposed decoder with Universal Dependencies syntax on English-German, German-English and English-Russian show improved performance over the standard Transformer decoder, as well as over ablated versions of the model.\tacltxt{\footnote{All code implementing the presented models will be released upon acceptance.

翻译:虽然一些工程在将源端象征性合成和语义结构纳入神经机翻译(NMT)方面有所收获,但处理这种结构解码的工程却少得多。我们提议,在Dyer(Dyer)(Dyer)(用RNN(RN)的类似方法)的启发下,对树形和图形解码进行一个基于转换器的一般解码法。在对英语-德语、德语-英语和英语-俄语使用拟议的与普遍依赖性语法的解码法的实验表明,在标准变换器解码器以及模型的汇总版本上,其性能有所改善。\tacltxt\foot{所有实施所介绍的模式的代码一旦被接受,将予以公布。