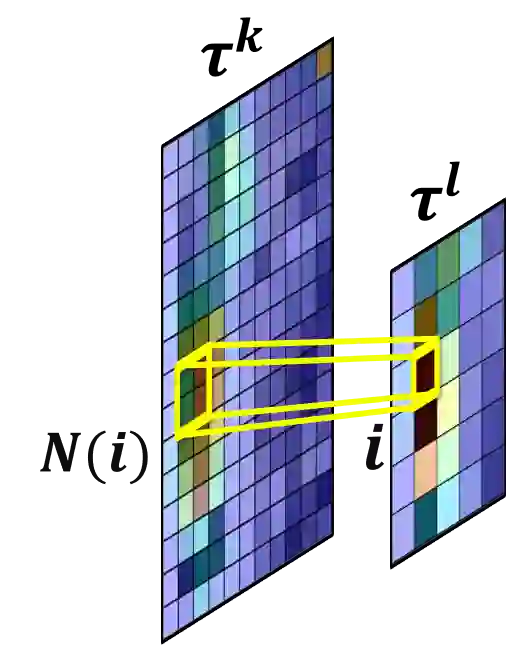

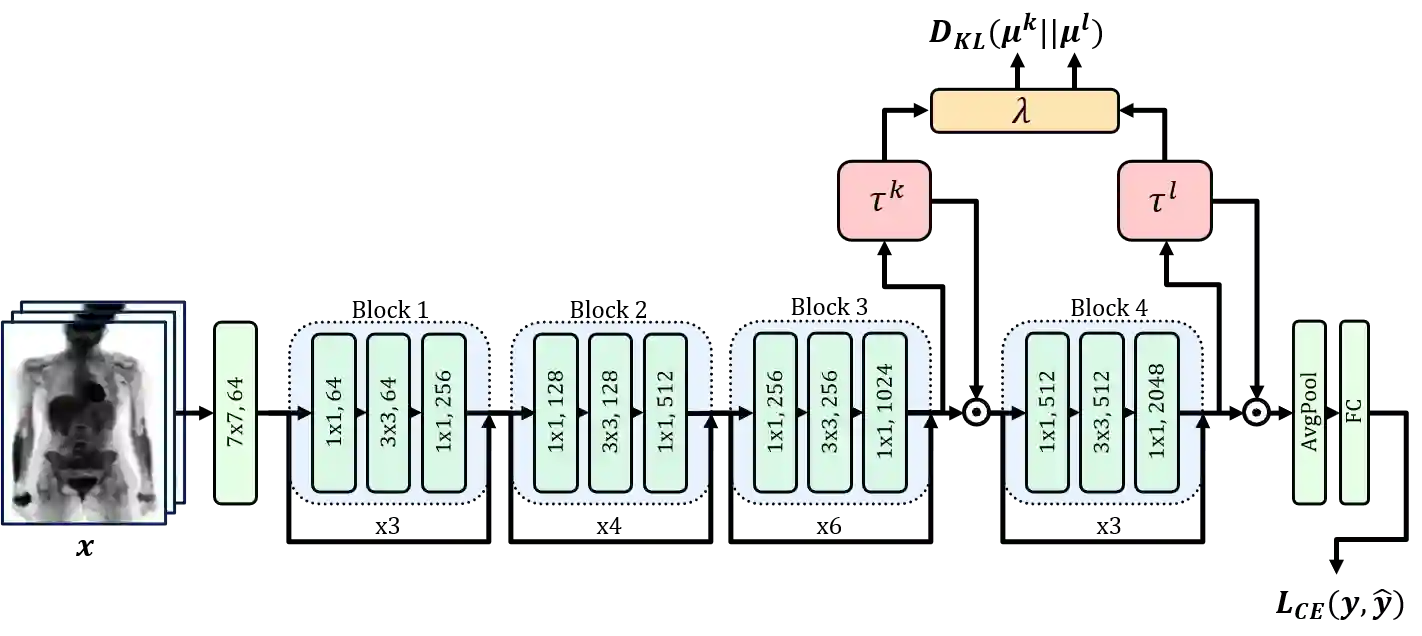

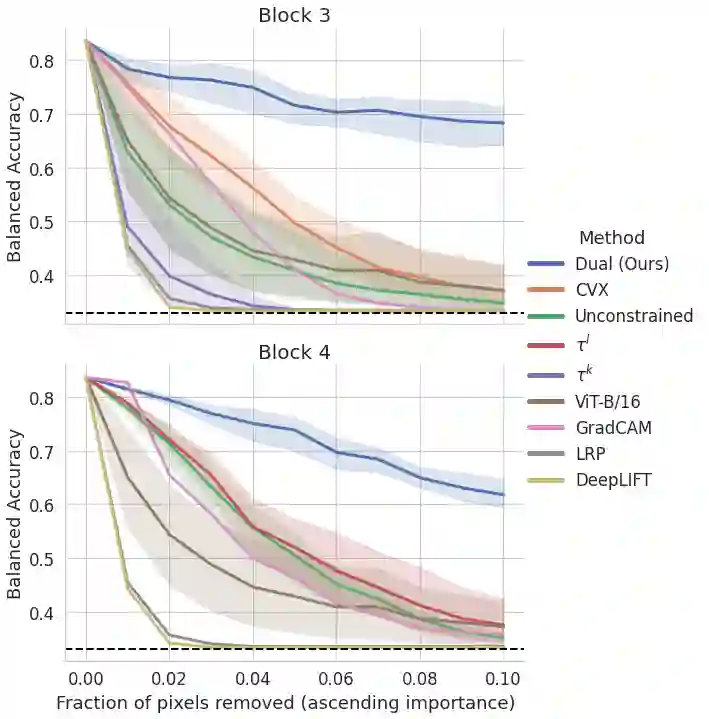

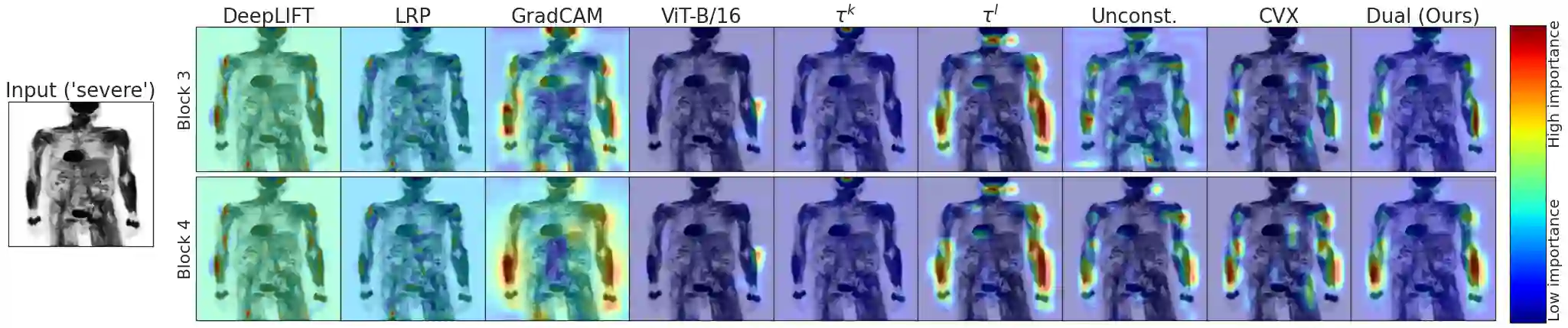

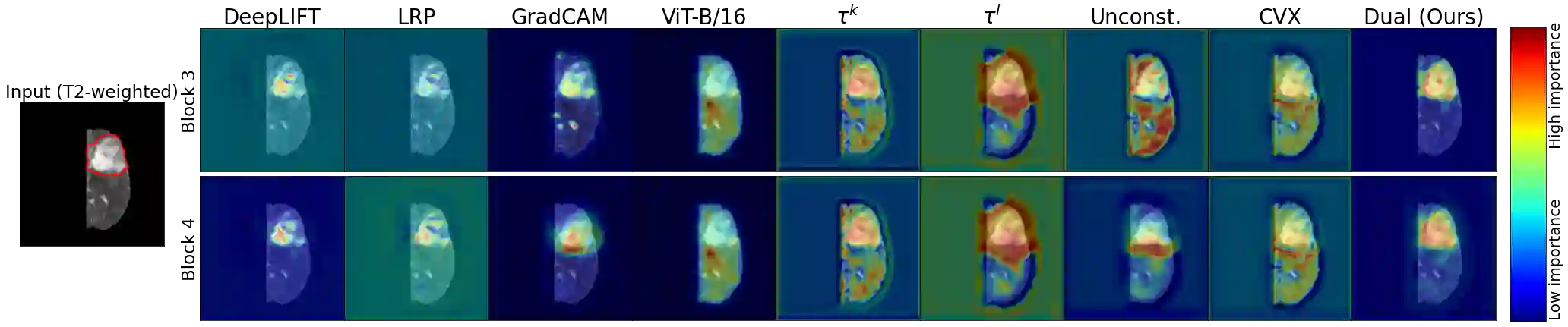

A key concern in integrating machine learning models in medicine is the ability to interpret their reasoning. Popular explainability methods have demonstrated satisfactory results in natural image recognition, yet in medical image analysis, many of these approaches provide partial and noisy explanations. Recently, attention mechanisms have shown compelling results both in their predictive performance and in their interpretable qualities. A fundamental trait of attention is that it leverages salient parts of the input which contribute to the model's prediction. To this end, our work focuses on the explanatory value of attention weight distributions. We propose a multi-layer attention mechanism that enforces consistent interpretations between attended convolutional layers using convex optimization. We apply duality to decompose the consistency constraints between the layers by reparameterizing their attention probability distributions. We further suggest learning the dual witness by optimizing with respect to our objective; thus, our implementation uses standard back-propagation, hence it is highly efficient. While preserving predictive performance, our proposed method leverages weakly annotated medical imaging data and provides complete and faithful explanations to the model's prediction.

翻译:医学中集成机器学习模型的一个关键关注点是解释其推理的能力。通用解释方法在自然图像识别方面显示出令人满意的结果,但在医学图像分析方面,许多这类方法都提供了部分和吵闹的解释。最近,关注机制在预测性表现和可解释性两方面都显示了令人信服的结果。关注的一个根本特征是它利用了有助于模型预测的显著投入部分。为此,我们的工作侧重于关注重量分布的解释价值。我们建议了一个多层关注机制,用电流优化法在进化层之间执行一致的解释。我们采用双重性,通过重新测量其关注概率分布来消除层之间的一致性限制。我们进一步建议通过优化我们的目标来学习双目证人;因此,我们的实施方法使用标准反演算法,因此效率很高。在保留预测性表现的同时,我们提出的方法利用了微弱的附加注释的医疗成像数据,并为模型的预测提供了完整和忠实的解释。