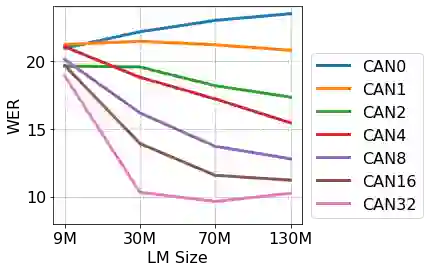

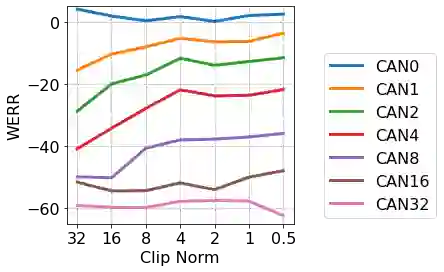

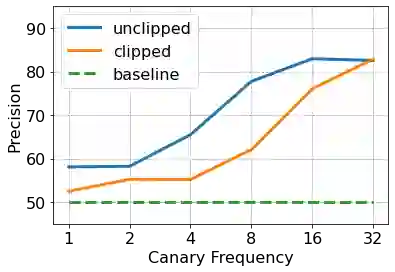

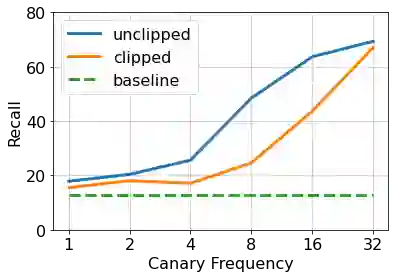

End-to-end (E2E) models are often being accompanied by language models (LMs) via shallow fusion for boosting their overall quality as well as recognition of rare words. At the same time, several prior works show that LMs are susceptible to unintentionally memorizing rare or unique sequences in the training data. In this work, we design a framework for detecting memorization of random textual sequences (which we call canaries) in the LM training data when one has only black-box (query) access to LM-fused speech recognizer, as opposed to direct access to the LM. On a production-grade Conformer RNN-T E2E model fused with a Transformer LM, we show that detecting memorization of singly-occurring canaries from the LM training data of 300M examples is possible. Motivated to protect privacy, we also show that such memorization gets significantly reduced by per-example gradient-clipped LM training without compromising overall quality.

翻译:终端到终端( E2E) 模型往往伴有语言模型( LMs), 通过浅质融合提高整体质量和识别稀有字词。 同时, 前几部工程显示, LMs很容易无意地在培训数据中背诵稀有或独特的序列。 在这项工作中,我们设计了一个框架,用于在LM培训数据中检测随机文本序列(我们打听金丝雀)的记忆化(我们打听金丝雀)时,当一个人只有黑盒(query)才能使用LM 粉碎语音识别器时,而不是直接使用LM 。 在与变异器LM 结合的生产- 级ConnN-T E2E 模型上,我们显示,从LM 培训数据300M 实例中检测单发罐子的记忆化是可能的。 我们为了保护隐私,还显示,这种记忆化通过每次Example Eliced LM 培训而大大降低整体质量。