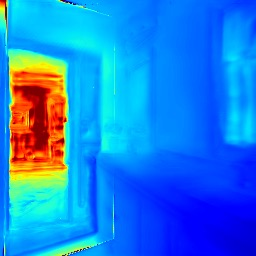

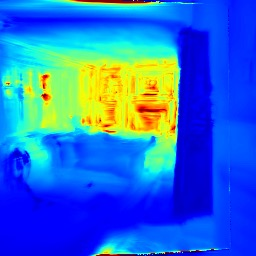

We introduce a method for novel view synthesis given only a single wide-baseline stereo image pair. In this challenging regime, 3D scene points are regularly observed only once, requiring prior-based reconstruction of scene geometry and appearance. We find that existing approaches to novel view synthesis from sparse observations fail due to recovering incorrect 3D geometry and due to the high cost of differentiable rendering that precludes their scaling to large-scale training. We take a step towards resolving these shortcomings by formulating a multi-view transformer encoder, proposing an efficient, image-space epipolar line sampling scheme to assemble image features for a target ray, and a lightweight cross-attention-based renderer. Our contributions enable training of our method on a large-scale real-world dataset of indoor and outdoor scenes. We demonstrate that our method learns powerful multi-view geometry priors while reducing the rendering time. We conduct extensive comparisons on held-out test scenes across two real-world datasets, significantly outperforming prior work on novel view synthesis from sparse image observations and achieving multi-view-consistent novel view synthesis.

翻译:我们介绍了一种仅给定单个广基线立体图像对的新视角综合方法。在这种具有挑战性的情况下,只有一个3D场景点被定期观察,需要先基于先验重构场景的几何和外观。我们发现,由于恢复不正确的3D几何和不可扩展的可微分渲染的高成本,从稀疏观察中生成新视角的现有方法会失败,因此无法扩展到大规模训练。我们通过制定多视图变换器编码器,提出了一种有效的基于图像空间的极线采样方案,以组装目标光线的图像特征以及轻量级的基于交叉注意的渲染器,已经朝着解决这些缺点迈出了一步。我们的贡献使我们能够在室内和室外场景的大规模真实世界数据集上训练我们的方法。我们展示了我们的方法学习了强大的多视图几何先验,同时减少了渲染时间。我们在两个真实世界数据集中进行了广泛的测试场景比较,在从稀疏图像观察中生成新视角方面显着优于以前的工作,并实现了多视图一致的新视角综合。