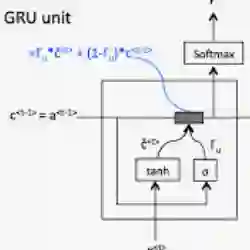

The scalability of recurrent neural networks (RNNs) is hindered by the sequential dependence of each time step's computation on the previous time step's output. Therefore, one way to speed up and scale RNNs is to reduce the computation required at each time step independent of model size and task. In this paper, we propose a model that reformulates Gated Recurrent Units (GRU) as an event-based activity-sparse model that we call the Event-based GRU (EGRU), where units compute updates only on receipt of input events (event-based) from other units. When combined with having only a small fraction of the units active at a time (activity-sparse), this model has the potential to be vastly more compute efficient than current RNNs. Notably, activity-sparsity in our model also translates into sparse parameter updates during gradient descent, extending this compute efficiency to the training phase. We show that the EGRU demonstrates competitive performance compared to state-of-the-art recurrent network models in real-world tasks, including language modeling while maintaining high activity sparsity naturally during inference and training. This sets the stage for the next generation of recurrent networks that are scalable and more suitable for novel neuromorphic hardware.

翻译:经常神经网络的可缩放性受到每个时间步骤计算方法对上一个时间步骤输出的顺序依附性的影响。 因此, 加速和缩放RNN的一个方法就是在不依赖模型大小和任务的情况下, 减少每个时间步骤所需的计算。 在本文中, 我们提议一个模型, 将Gated 经常单位( GRU) 重新配为一种基于事件的活动偏差模式, 我们称之为以事件为基础的 GRU( URU), 单位只能根据从其他单位接收输入事件( 以活动为基础的) 来计算更新。 当每个时间( 活动偏斜) 的单位中只有一小部分, 当这种模式与在活动时( 活动偏斜) 的单位合并时, 这个模型有可能比目前的 RNNNS 更能大大地降低所需的计算效率。 值得注意的是, 我们模型中的活动差异也会在梯度下降期间转化为稀少的参数更新, 将这一配置效率扩大到培训阶段。 我们显示, EURU 显示与现实世界任务中最先进的经常网络模型相比, 包括下一个语言模型, 同时保持高的活动宽度网络的频率, 和再变的常规系统系统系统。