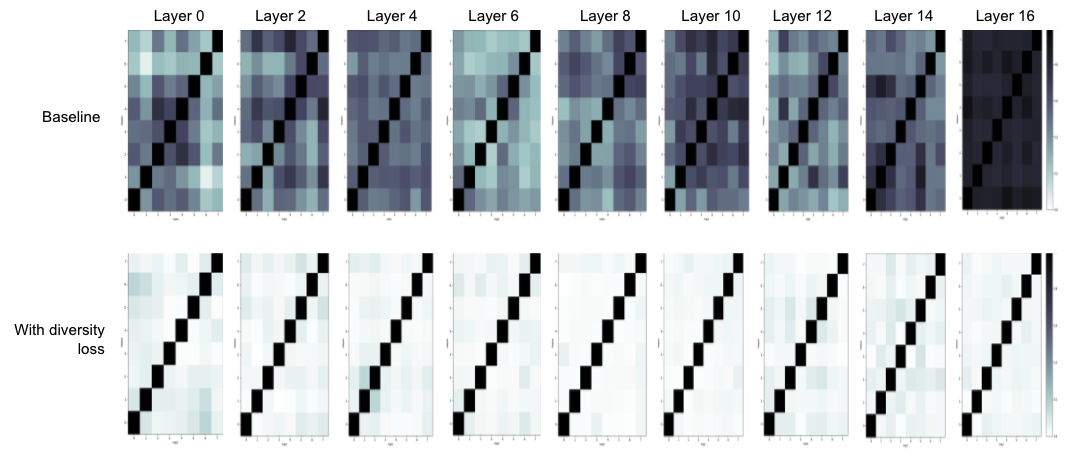

Attention layers are an integral part of modern end-to-end automatic speech recognition systems, for instance as part of the Transformer or Conformer architecture. Attention is typically multi-headed, where each head has an independent set of learned parameters and operates on the same input feature sequence. The output of multi-headed attention is a fusion of the outputs from the individual heads. We empirically analyze the diversity between representations produced by the different attention heads and demonstrate that the heads become highly correlated during the course of training. We investigate a few approaches to increasing attention head diversity, including using different attention mechanisms for each head and auxiliary training loss functions to promote head diversity. We show that introducing diversity-promoting auxiliary loss functions during training is a more effective approach, and obtain WER improvements of up to 6% relative on the Librispeech corpus. Finally, we draw a connection between the diversity of attention heads and the similarity of the gradients of head parameters.

翻译:关注层是现代端到端自动语音识别系统的一个组成部分,例如,作为变换器或连接器结构的一部分,关注层是现代端到端自动语音识别系统的一个组成部分。关注层通常是多头的,每个头都有一套独立的学习参数,并且以相同的输入特征序列运作。多头关注的输出是各个头部产出的组合。我们从经验上分析不同注意头部产生的表达形式的多样性,并表明头部在培训过程中变得高度相关。我们调查了增加头部多样性的几个方法,包括使用不同关注机制,对每个头部和辅助培训损失功能进行不同的关注,以促进头部多样性。我们表明,在培训中引入促进多样性的辅助损失功能是一种更有效的方法,并在利布里斯佩奇体中实现最多6%的WER改进。最后,我们指出,头部的注意力多样性与头项参数的梯度相似性之间的联系。