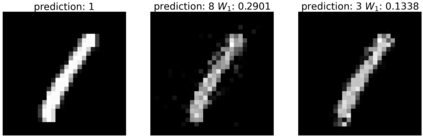

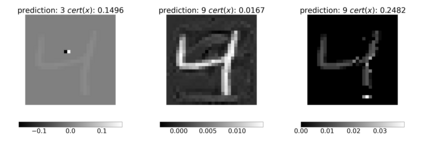

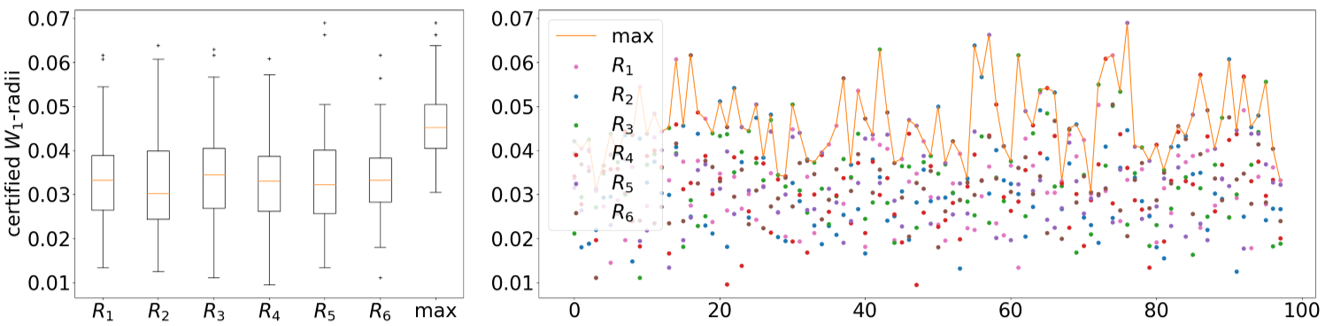

Machine learning image classifiers are susceptible to adversarial and corruption perturbations. Adding imperceptible noise to images can lead to severe misclassifications of the machine learning model. Using $L_p$-norms for measuring the size of the noise fails to capture human similarity perception, which is why optimal transport based distance measures like the Wasserstein metric are increasingly being used in the field of adversarial robustness. Verifying the robustness of classifiers using the Wasserstein metric can be achieved by proving the absence of adversarial examples (certification) or proving their presence (attack). In this work we present a framework based on the work by Levine and Feizi, which allows us to transfer existing certification methods for convex polytopes or $L_1$-balls to the Wasserstein threat model. The resulting certification can be complete or incomplete, depending on whether convex polytopes or $L_1$-balls were chosen. Additionally, we present a new Wasserstein adversarial attack that is projected gradient descent based and which has a significantly reduced computational burden compared to existing attack approaches.

翻译:机器学习图像分类系统很容易受到对抗性和腐败的干扰。 给图像添加不易察觉的噪音可能导致机器学习模式的严重分类错误。 使用 $L_ p$- 诺尔姆来衡量噪音大小无法捕捉人类相似感, 这就是为什么在对抗性强力领域越来越多地使用瓦塞斯坦指标等基于运输的最佳措施。 使用瓦塞斯坦指标来验证分类系统是否稳健, 可以通过证明没有对抗性实例( 认证) 或证明它们的存在( 攻击) 来实现。 在这项工作中,我们根据Levine 和 Feizi的工作提出了一个框架, 该框架使我们能够将现有的 convex 聚顶或$L_ 1美元球的认证方法转移到瓦塞斯坦威胁模型。 由此产生的认证可以完整或不完整, 取决于是否选择了 convex 聚层或 $L_ 1美元球。 此外, 我们提出了一个新的瓦塞斯坦对抗性对抗性攻击系统攻击, 其预测基于梯度血统, 与现有的攻击方法相比, 其计算负担会大大减少。