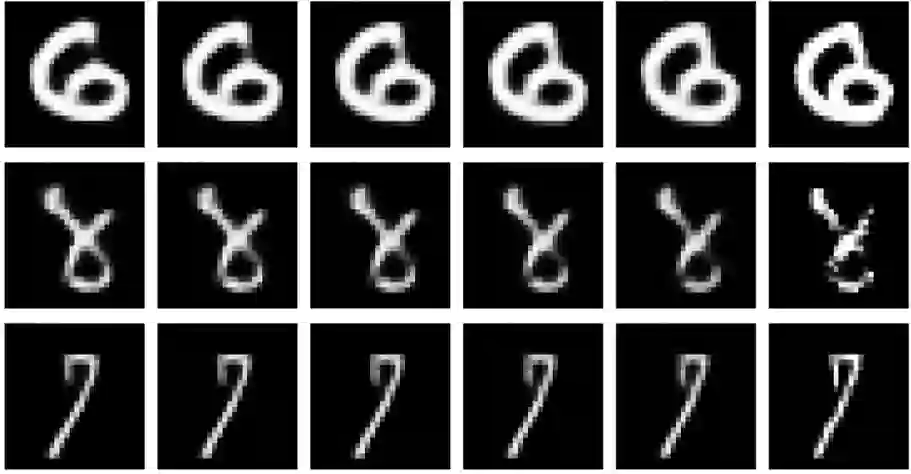

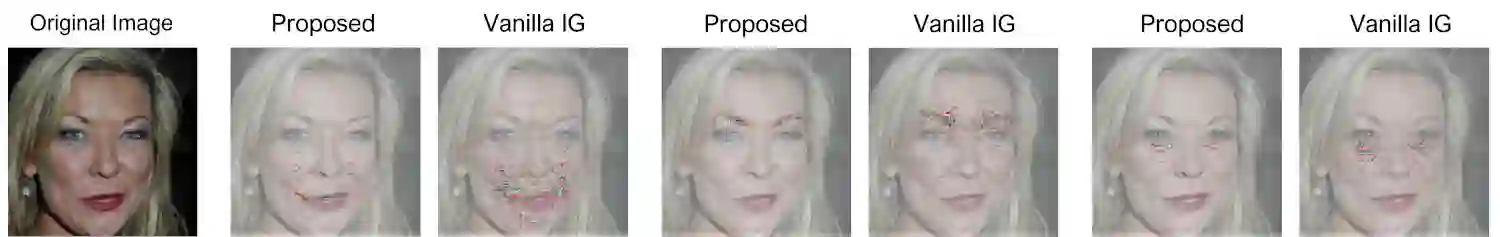

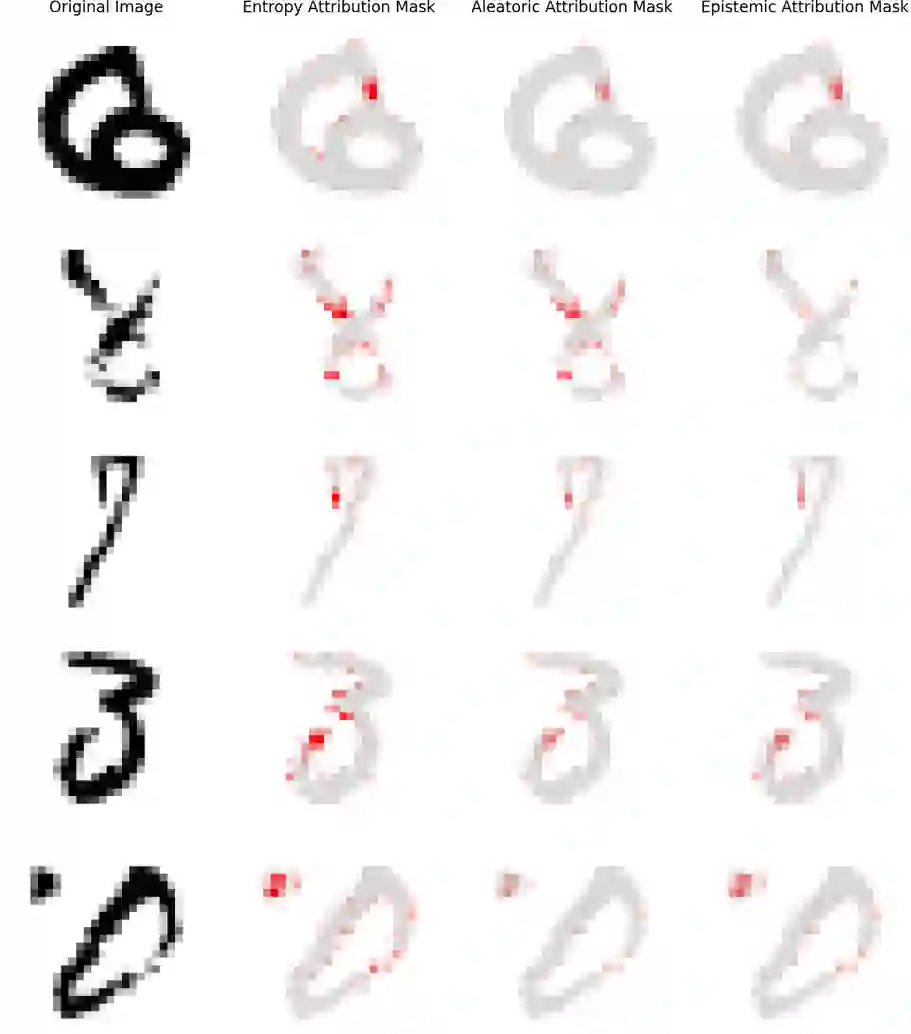

Enabling interpretations of model uncertainties is of key importance in Bayesian machine learning applications. Often, this requires to meaningfully attribute predictive uncertainties to source features in an image, text or categorical array. However, popular attribution methods are particularly designed for classification and regression scores. In order to explain uncertainties, state of the art alternatives commonly procure counterfactual feature vectors, and proceed by making direct comparisons. In this paper, we leverage path integrals to attribute uncertainties in Bayesian differentiable models. We present a novel algorithm that relies on in-distribution curves connecting a feature vector to some counterfactual counterpart, and we retain desirable properties of interpretability methods. We validate our approach on benchmark image data sets with varying resolution, and show that it significantly simplifies interpretability over the existing alternatives.

翻译:对模型不确定性的有利解释在巴耶斯机器学习应用中至关重要。 这通常需要将预测不确定性有意义地归因于图像、文本或绝对阵列中的特性来源。 但是,流行的归属方法是特别为分类和回归分而设计的。 为了解释不确定性,通常采购反事实特质矢量的最先进的替代方法的状况,并直接进行比较。 在本文中,我们利用路径的内在组成部分来将巴耶斯不同模型中的不确定性归因于巴耶斯不同模型。 我们提出了一个新奇的算法,它依赖于将特征矢量连接到某些反事实对应方的分布曲线,我们保留了可解释方法的可取特性。我们用不同分辨率验证我们的基准图像数据集方法,并表明它大大简化了现有替代品的可解释性。