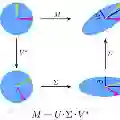

Random projection (RP) have recently emerged as popular techniques in themachine learning community for their ability in reducing the dimension of veryhigh-dimensional tensors. Following the work in [29], we consider a tensorizedrandom projection relying on Tensor Train (TT) decomposition where each elementof the core tensors is drawn from a Rademacher distribution. Our theoreticalresults reveal that the Gaussian low-rank tensor represented in compressed formin TT format in [29] can be replaced by a TT tensor with core elements drawnfrom a Rademacher distribution with the same embedding size. Experiments onsynthetic data demonstrate that tensorized Rademacher RP can outperform thetensorized Gaussian RP studied in [29]. In addition, we show both theoreticallyand experimentally, that the tensorized RP in the Matrix Product Operator (MPO)format proposed in [5] for performing SVD on large matrices is not a Johnson-Lindenstrauss transform (JLT) and therefore not a well-suited random projectionmap

翻译:随机投影(RP)最近成为机器学习界的流行技术,因为其能够减少甚高维抗粒体的维度。在[29]年的工作之后,我们考虑依靠Tensor列车(TT)分解的抗拉度投影,其中核心的每个元件都是从Rademacher的分布中抽取的。我们的理论结果显示,[29] 中压缩的TTT格式中代表的低层压强可被一个TT TT 抗压器替换,核心元素从Rademacher的分布中抽取,其嵌入尺寸相同。合成数据实验表明,在[29] 研究的Tensor 列电磁器(TTT)中,抗拉热拉热器(TTT)能够超过加速的Gausian RP。此外,我们从理论上和实验上表明,[5]中提议的用于在大型基质产品操作器中进行SVD的压强型RP不是硬体变形(Jonson-Ledstraus),因此不是一个完全随机投射图。