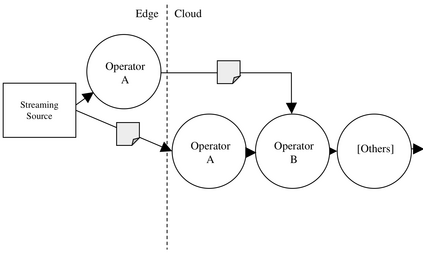

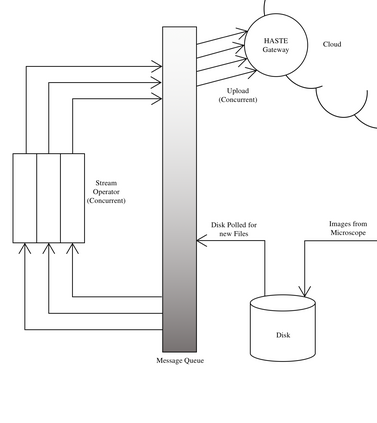

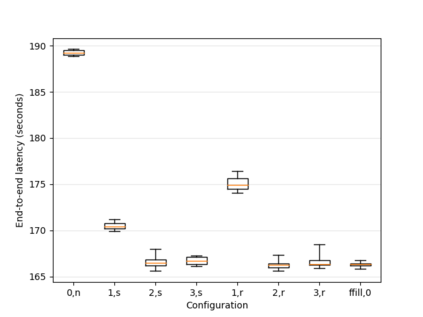

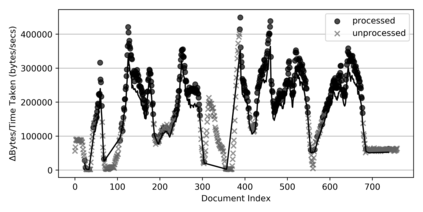

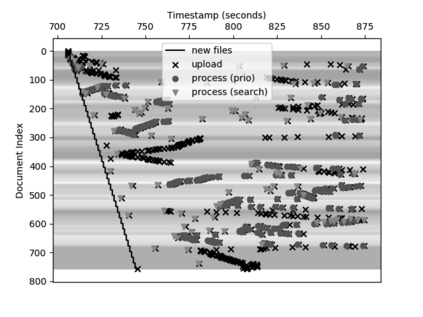

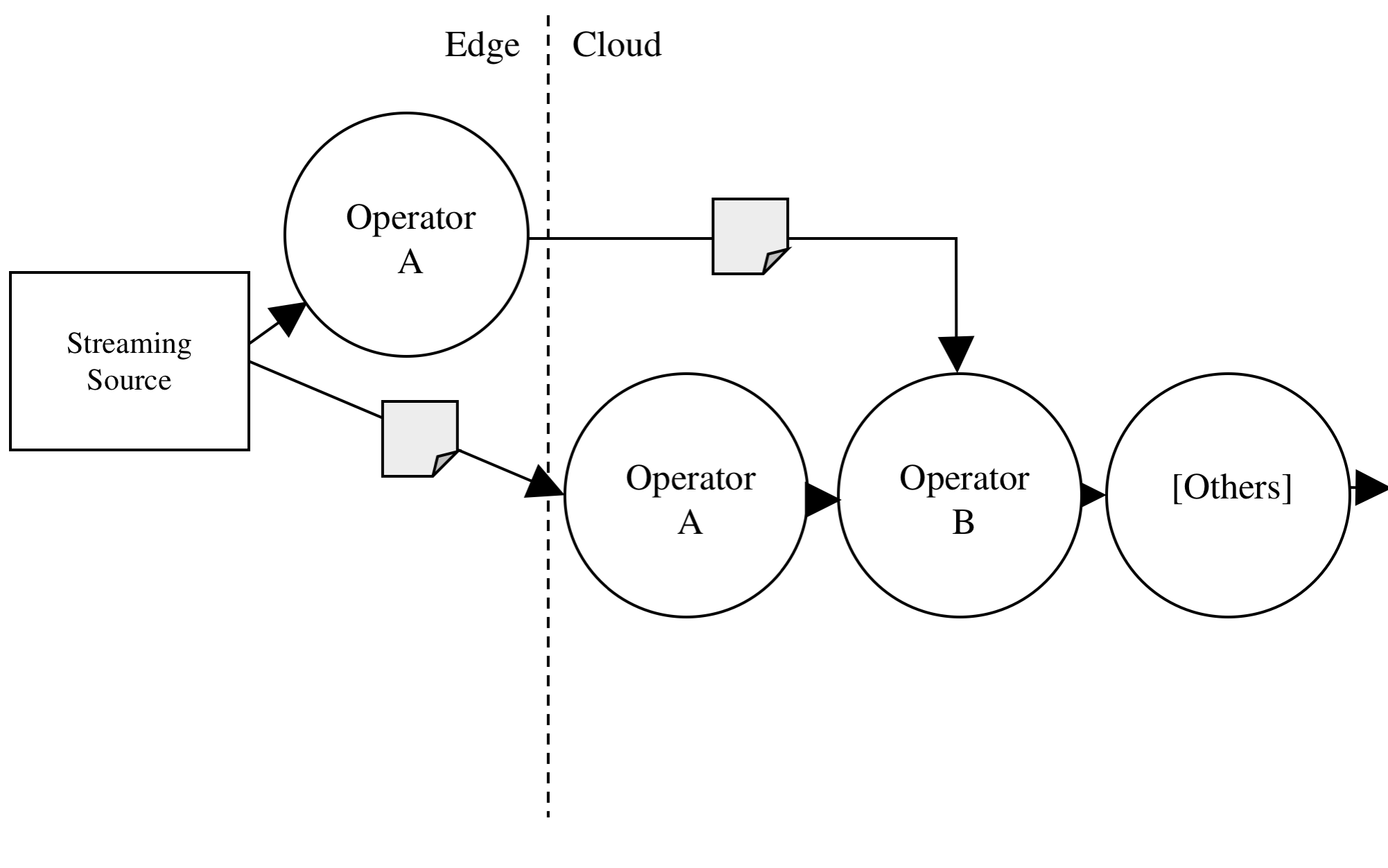

Whilst computational resources at the cloud edge can be leveraged to improve latency and reduce the costs of cloud services for a wide variety mobile, web, and IoT applications; such resources are naturally constrained. For distributed stream processing applications, there are clear advantages to offloading some processing work to the cloud edge. Many state of the art stream processing applications such as Flink and Spark Streaming, being designed to run exclusively in the cloud, are a poor fit for such hybrid edge/cloud deployment settings, not least because their schedulers take limited consideration of the heterogeneous hardware in such deployments. In particular, their schedulers broadly assume a homogeneous network topology (aside from data locality consideration in, e.g., HDFS/Spark). Specialized stream processing frameworks intended for such hybrid deployment scenarios, especially IoT applications, allow developers to manually allocate specific operators in the pipeline to nodes at the cloud edge. In this paper, we investigate scheduling stream processing in hybrid cloud/edge deployment settings with sensitivity to CPU costs and message size, with the aim of maximizing throughput with respect to limited edge resources. We demonstrate real-time edge processing of a stream of electron microscopy images, and measure a consistent reduction in end-to-end latency under our approach versus a resource-agnostic baseline scheduler, under benchmarking.

翻译:虽然云端的计算资源可以被用来改善长线,降低用于各种移动、网络和IoT应用程序的云服务成本;这种资源自然会受到限制;对于分布式流处理应用程序,将某些处理工作卸下到云端边缘有明显的优势。许多先进的流处理应用程序,如Flink和Spark Streaming,设计专门在云端运行,对于这种混合边缘/云层部署环境来说,其适应性很差,特别是因为它们的调度员在部署过程中对多种硬件的考虑有限。特别是,它们的调度员广泛承担一个同质的网络地形学(除了HDFS/Spark等分布式流程处理应用程序中的数据位置考虑之外),将某些加工工作卸载到云端边缘。在本文中,我们对混合云端/顶端部署环境中的流处理工作进行了研究,目的是在有限的边缘资源方面实现最大程度的吞吐。我们展示了用于这种混合部署情景(例如HDDFS/Spark)的特定流处理框架框架,特别是IoT应用程序,允许开发者将特定管道操作者人工分配到云端,在云端的电基底测量中,我们电基底线下对电基线的处理。