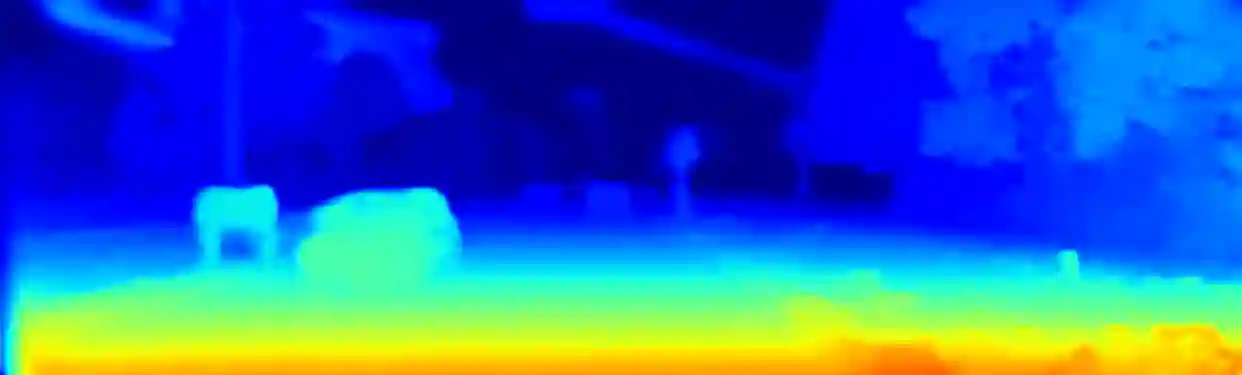

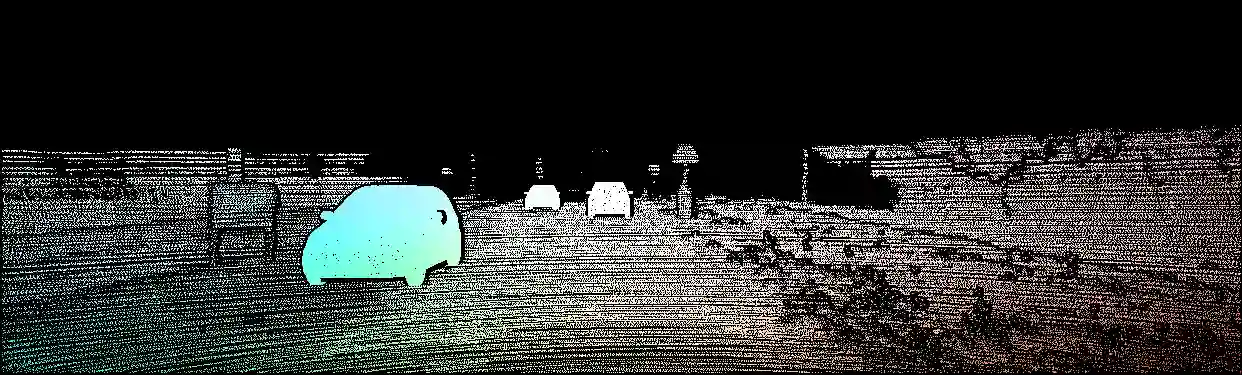

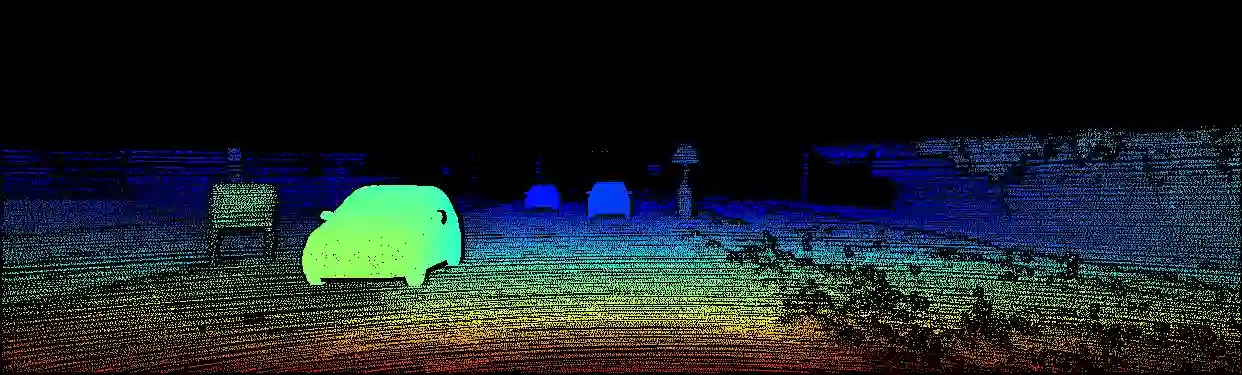

In recent years, deep neural networks showed their exceeding capabilities in addressing many computer vision tasks including scene flow prediction. However, most of the advances are dependent on the availability of a vast amount of dense per pixel ground truth annotations, which are very difficult to obtain for real life scenarios. Therefore, synthetic data is often relied upon for supervision, resulting in a representation gap between the training and test data. Even though a great quantity of unlabeled real world data is available, there is a huge lack in self-supervised methods for scene flow prediction. Hence, we explore the extension of a self-supervised loss based on the Census transform and occlusion-aware bidirectional displacements for the problem of scene flow prediction. Regarding the KITTI scene flow benchmark, our method outperforms the corresponding supervised pre-training of the same network and shows improved generalization capabilities while achieving much faster convergence.

翻译:近些年来,深神经网络在完成包括现场流预测在内的许多计算机视觉任务方面表现出了超能力,但是,大部分进展取决于能否获得大量密度很高的像素地面真实说明,而实际生活中很难获得这些说明,因此,合成数据往往依靠监督,造成培训和测试数据之间的代表差距,尽管有大量未贴标签的真实世界数据,但缺乏自我监督的现场流预测方法。因此,我们探索根据普查变换和隔离觉察双向迁移扩大自我监督的损失范围,以预测现场流的问题。关于KITTI现场流动基准,我们的方法超过了对同一网络进行的相应监督前培训,并显示在更快地实现汇合的同时,总体能力得到了提高。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem