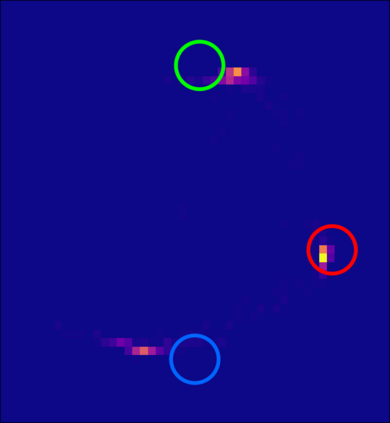

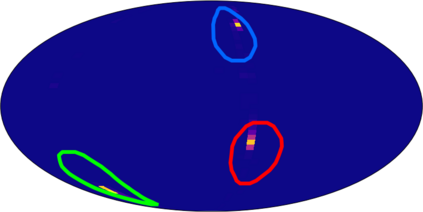

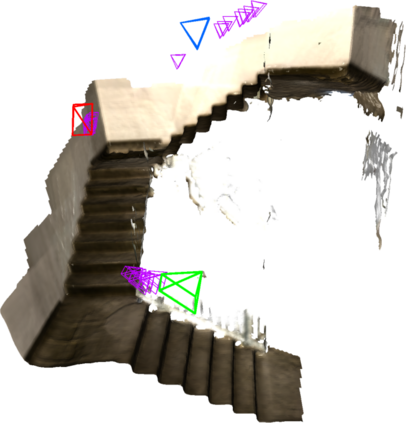

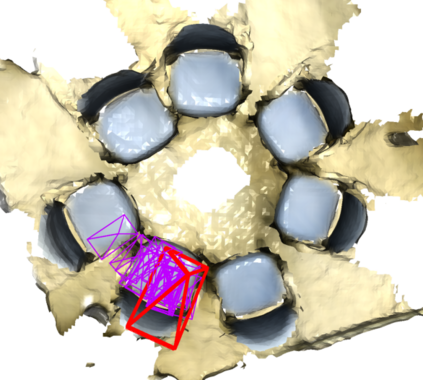

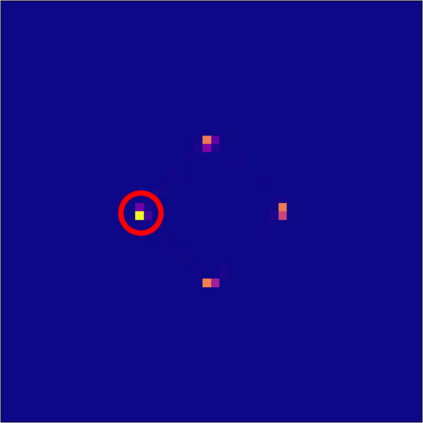

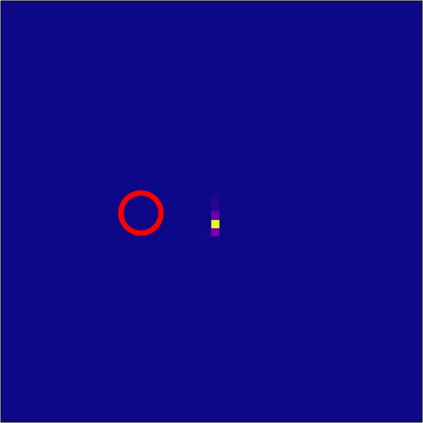

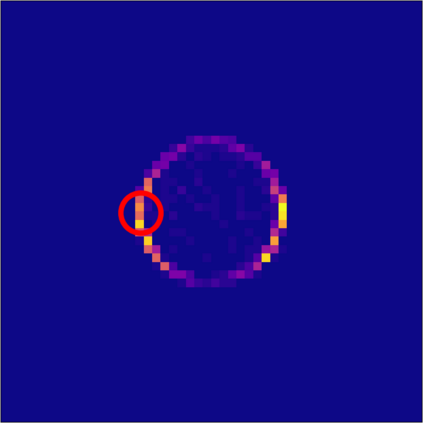

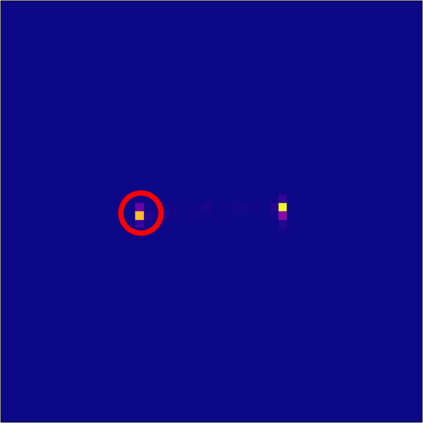

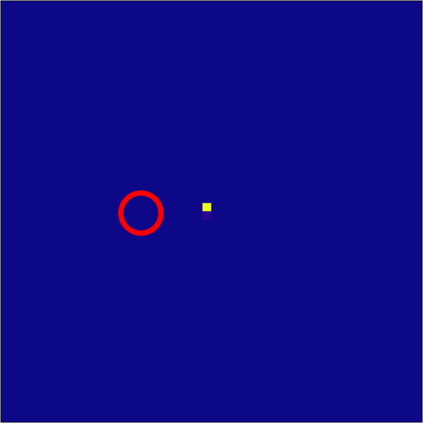

Visual localization allows autonomous robots to relocalize when losing track of their pose by matching their current observation with past ones. However, ambiguous scenes pose a challenge for such systems, as repetitive structures can be viewed from many distinct, equally likely camera poses, which means it is not sufficient to produce a single best pose hypothesis. In this work, we propose a probabilistic framework that for a given image predicts the arbitrarily shaped posterior distribution of its camera pose. We do this via a novel formulation of camera pose regression using variational inference, which allows sampling from the predicted distribution. Our method outperforms existing methods on localization in ambiguous scenes. Code and data will be released at https://github.com/efreidun/vapor.

翻译:视觉本地化让自主机器人在失去其外形的轨迹时,通过将其当前观测与过去观测相匹配,重新定位。然而,模糊的场景给这些系统带来了挑战,因为重复结构可以从许多不同和同样可能的照相机上看到,这意味着不足以产生一个最佳的假说。在这项工作中,我们提议一个概率框架,用于给定图像预测其相片外形的任意外形分布。我们通过一种新颖的相机配方,使用变异的推论,使图像出现倒退,从而能够从预测的分布中取样。我们的方法优于在模糊的场景中现有本地化方法。代码和数据将在https://github.com/efreidun/vapor上发布。