META微软等最新ACL2022教程《非自回归序列生成》,168页ppt

ACL 是计算语言学和自然语言处理领域的顶级国际会议,由国际计算语言学协会组织,每年举办一次。一直以来,ACL 在 NLP 领域的学术影响力都位列第一,它也是 CCF-A 类推荐会议。今年的 ACL 大会已是第 60 届,于 5 月 22-5 月 27 在爱尔兰都柏林举办。

ACL 2022论文奖项公布,伯克利摘得最佳论文,陈丹琦、杨笛一等华人团队获杰出论文

来自META微软等最新ACL2022教程《非自回归序列生成》,168页ppt,值得关注!

https://nar-tutorial.github.io/acl2022/

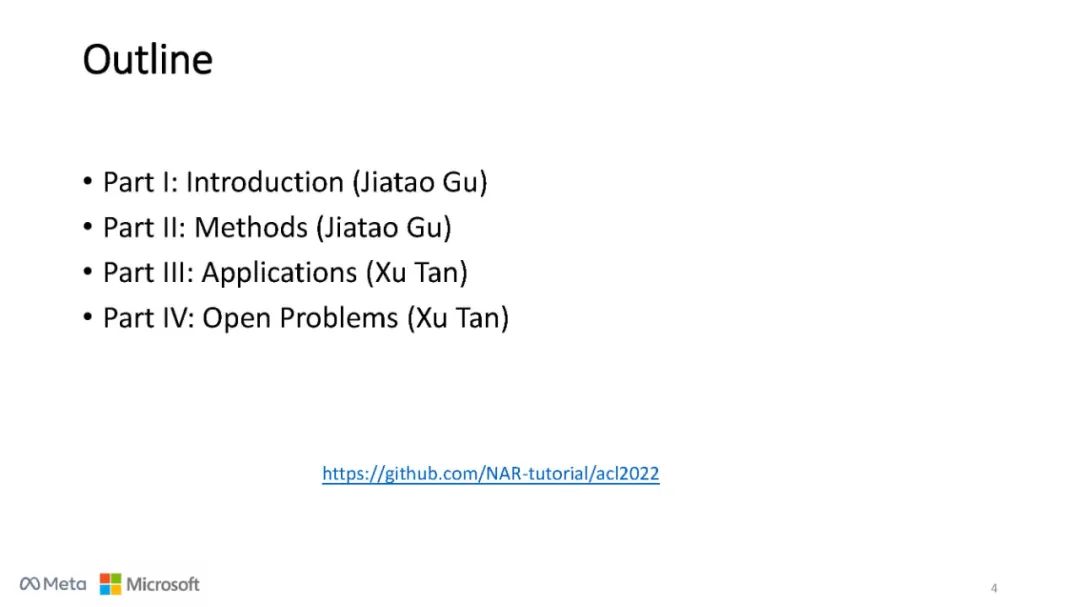

目录内容:

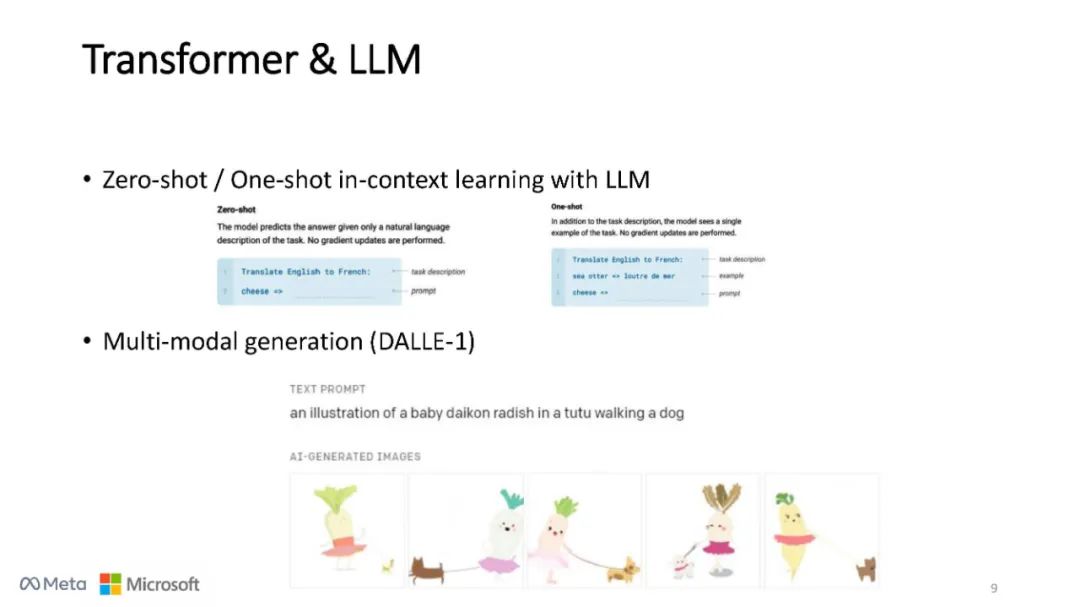

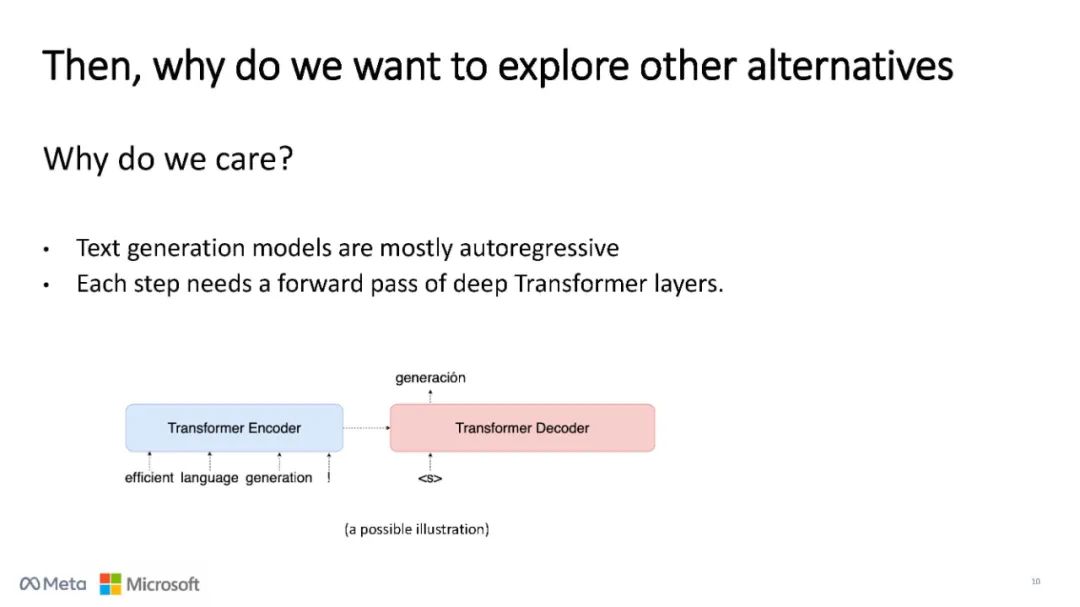

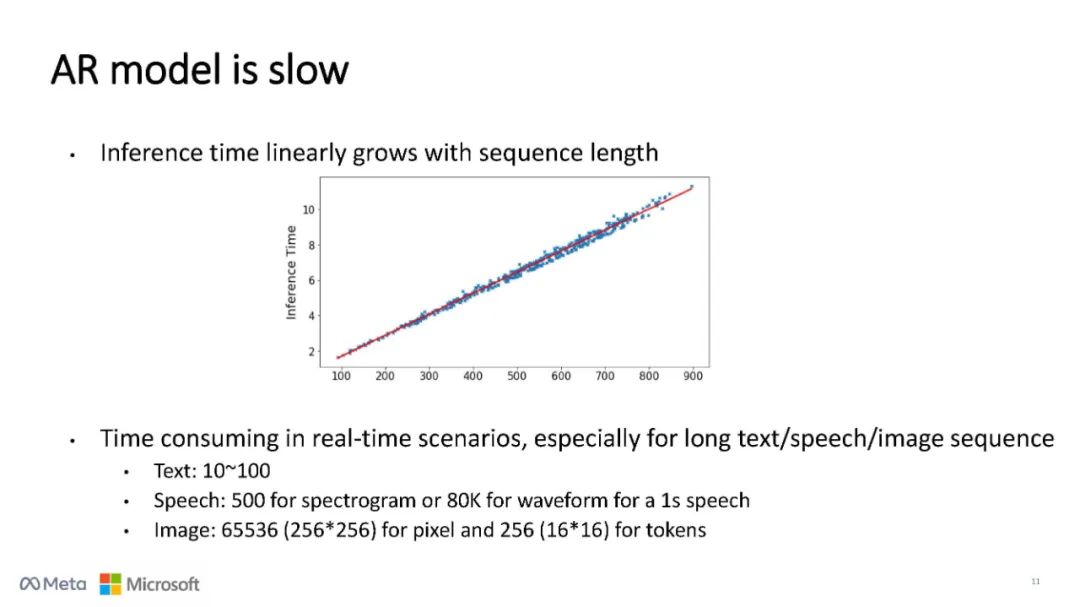

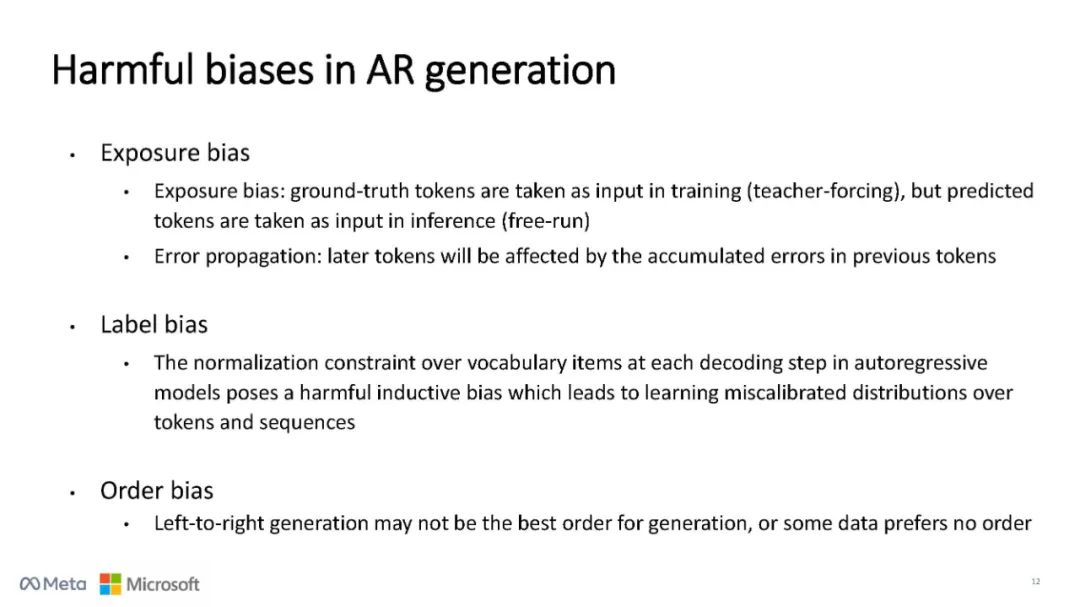

Introduction (~ 20 minutes)

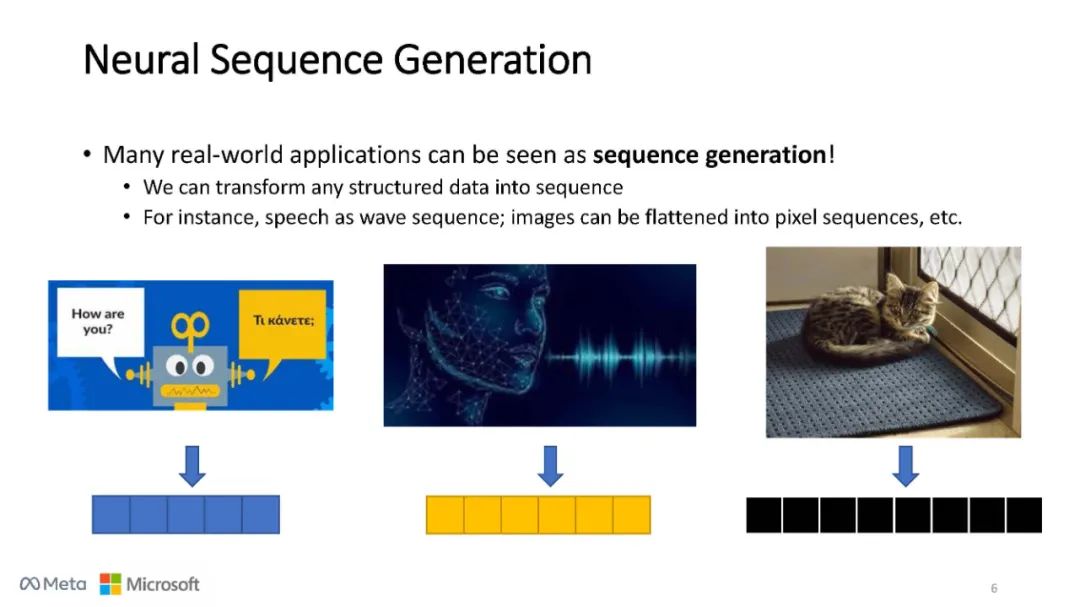

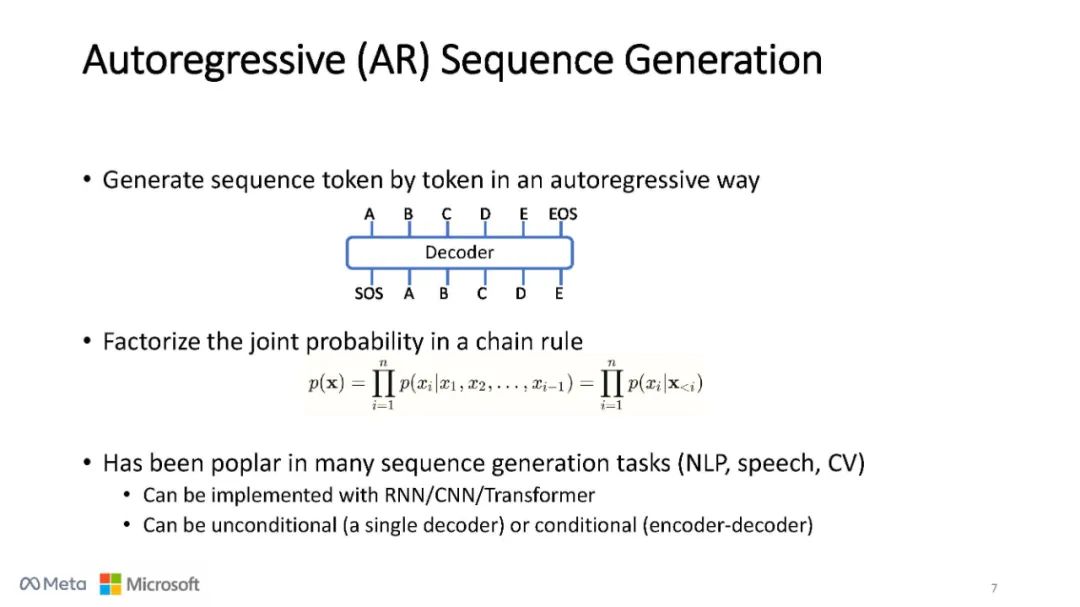

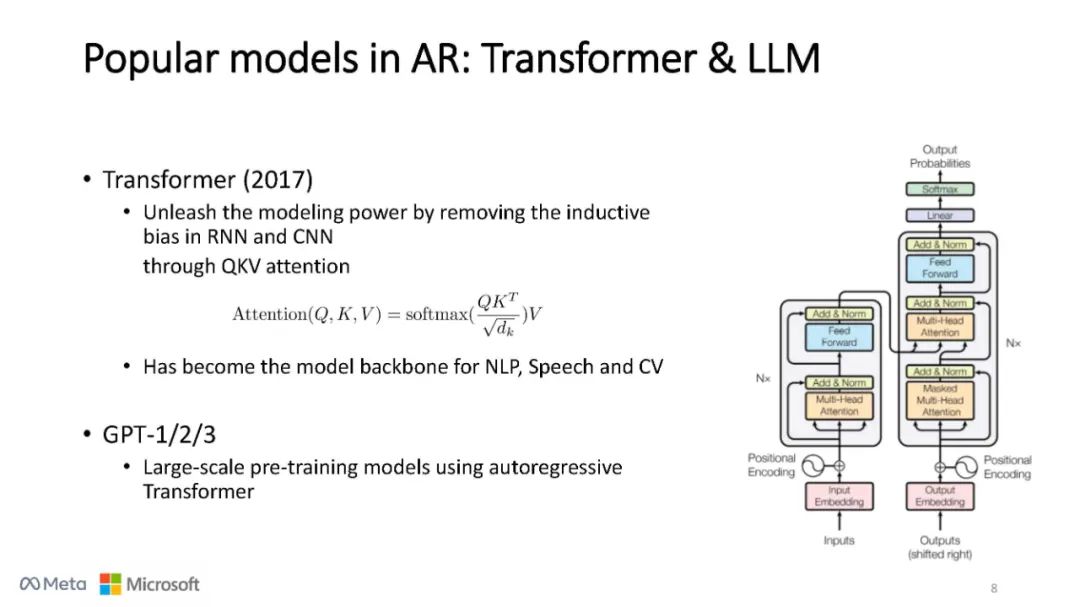

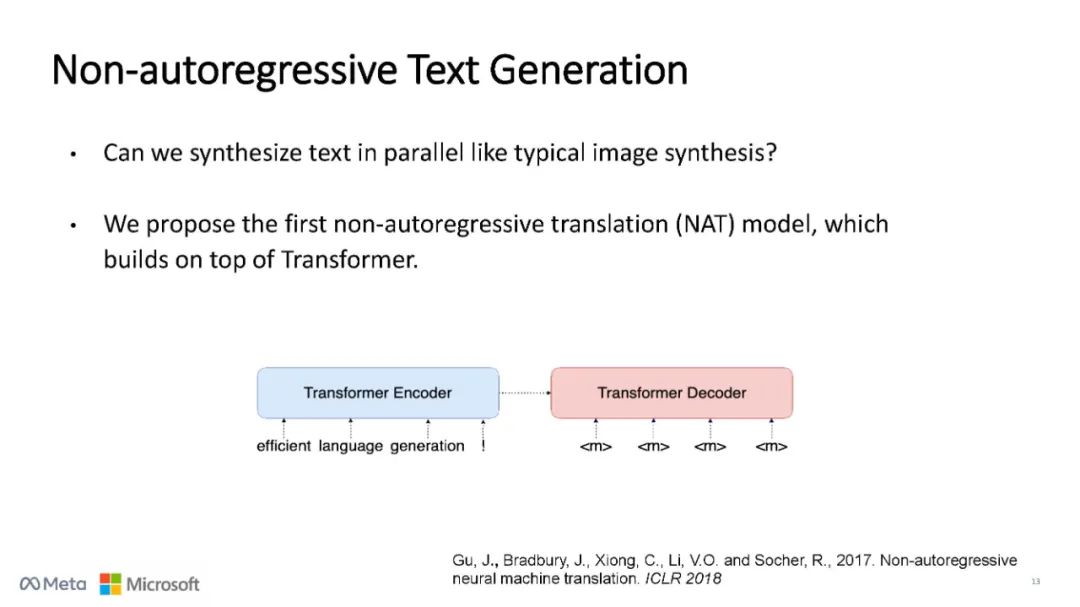

1.1 Problem definition

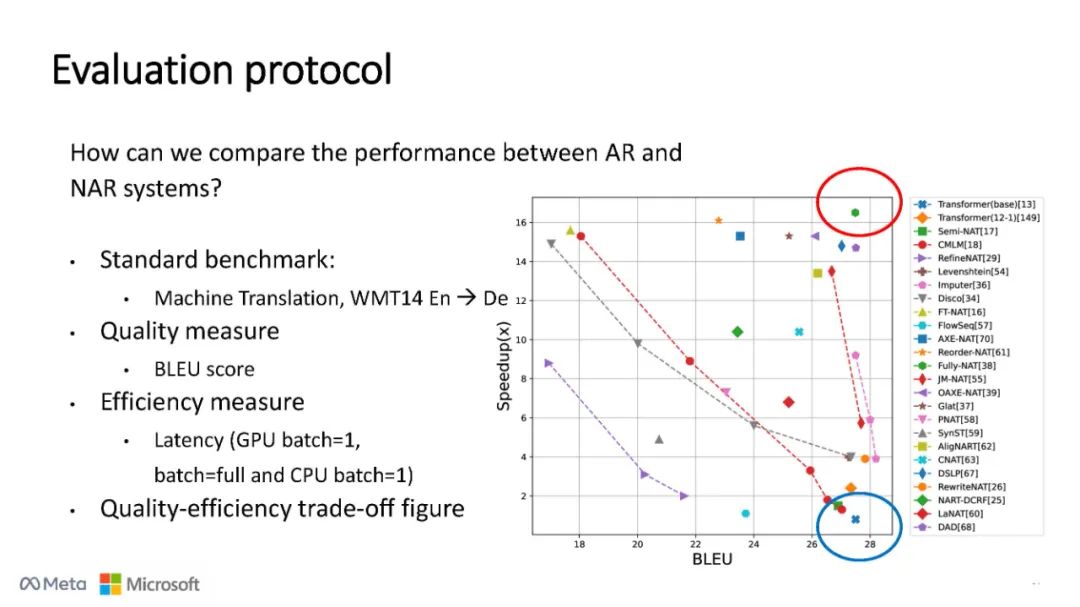

1.2 Evaluation protocol

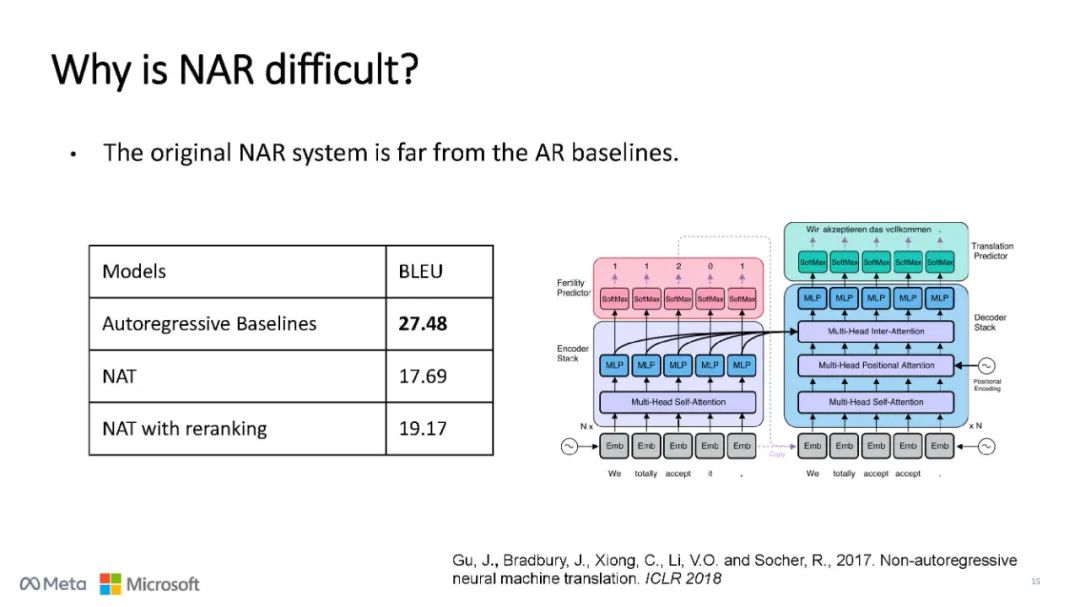

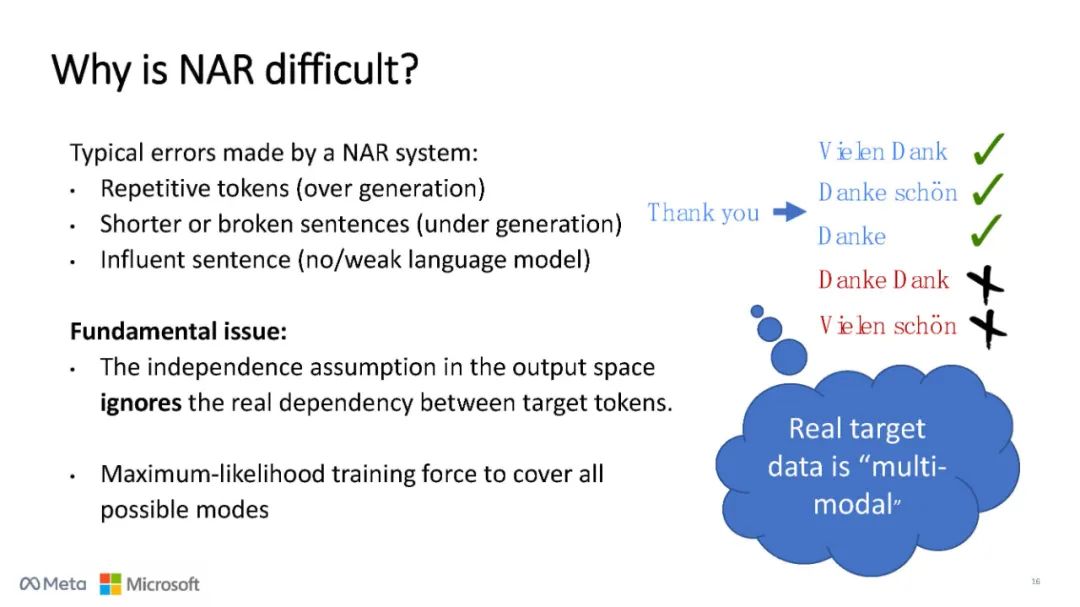

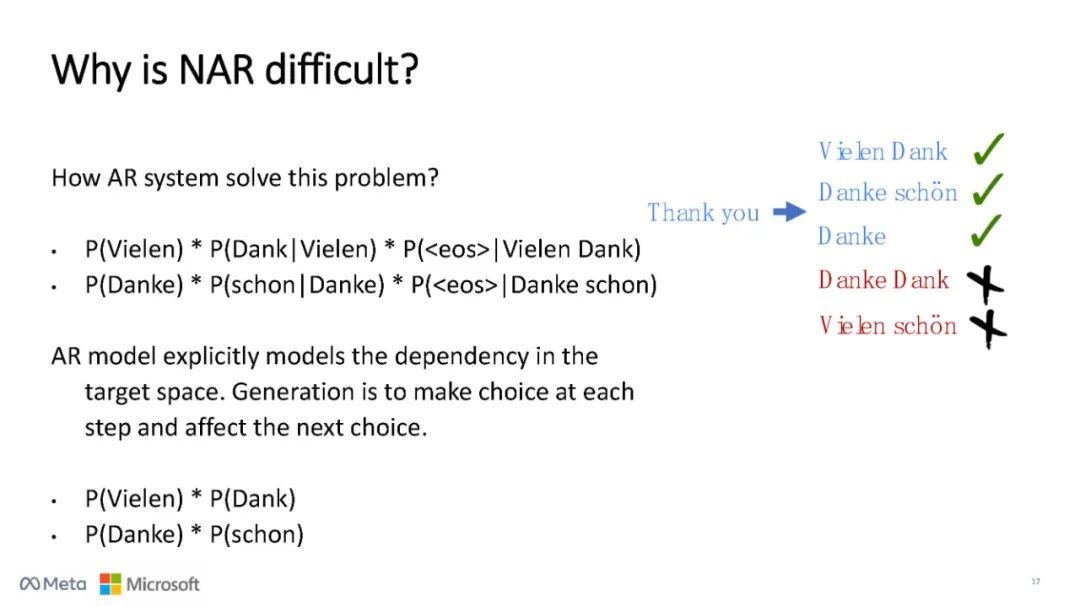

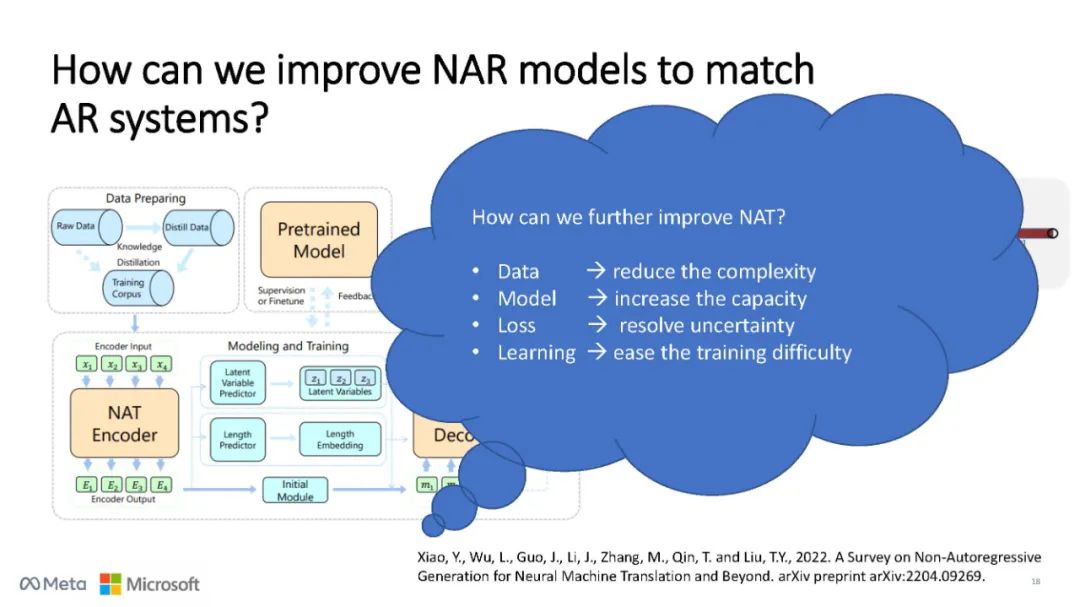

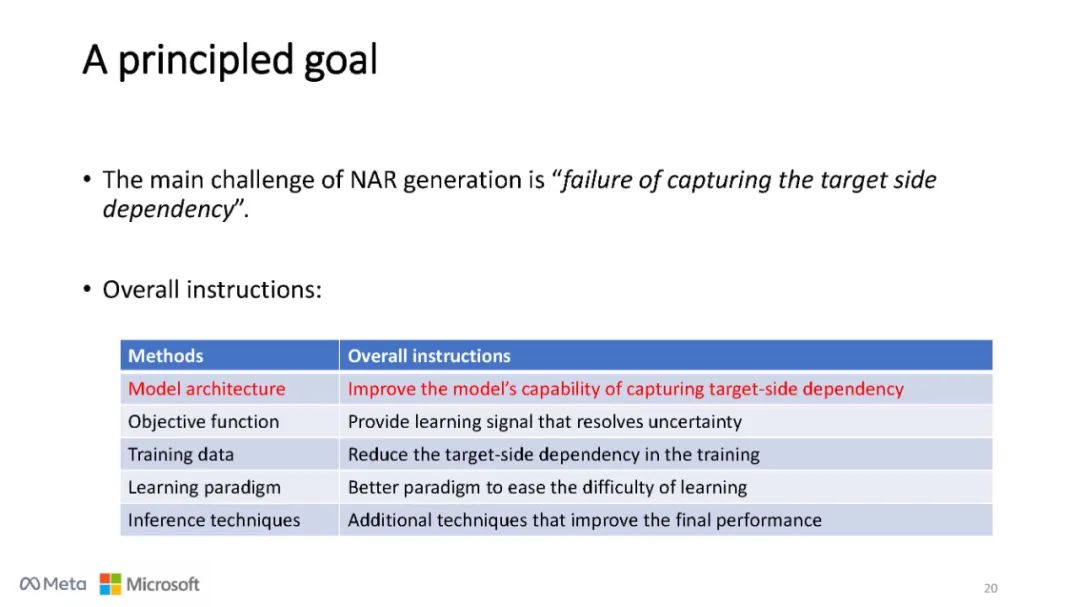

1.3 Multi-modality problemMethods (~ 80 minutes)

2.1 Model architectures

2.1.1 Fully NAR models

2.1.2 Iteration-based NAR models

2.1.3 Partially NAR models

2.1.4 Locally AR models

2.1.5 NAR models with latent variables

2.2 Objective functions

2.2.1 Loss with latent variables

2.2.2 Loss beyond token-level

2.3 Training data

2.4 Learning paradigms

2.4.1 Curriculum learning

2.4.2 Self-supervised pre-training

2.5 Inference methods and tricksApplications (~ 60 minutes)

3.1 Task overview in text/speech/image generation

3.2 NAR generation tasks

3.2.1 Neural machine translation

3.2.2 Text error correction

3.2.3 Automatic speech recognition

3.2.4 Text to speech / singing voice synthesis

3.2.5 Image (pixel/token) generation

3.3 Summary of NAR Applications

3.3.1 Benefits of NAR for different tasks

3.3.2 Addressing target-target/source dependency

3.3.3 Data difficulty vs model capacity

3.3.4 Streaming vs NAR, AR vs iterative NAROpen problems, future directions, Q\&A (~ 20 minutes)

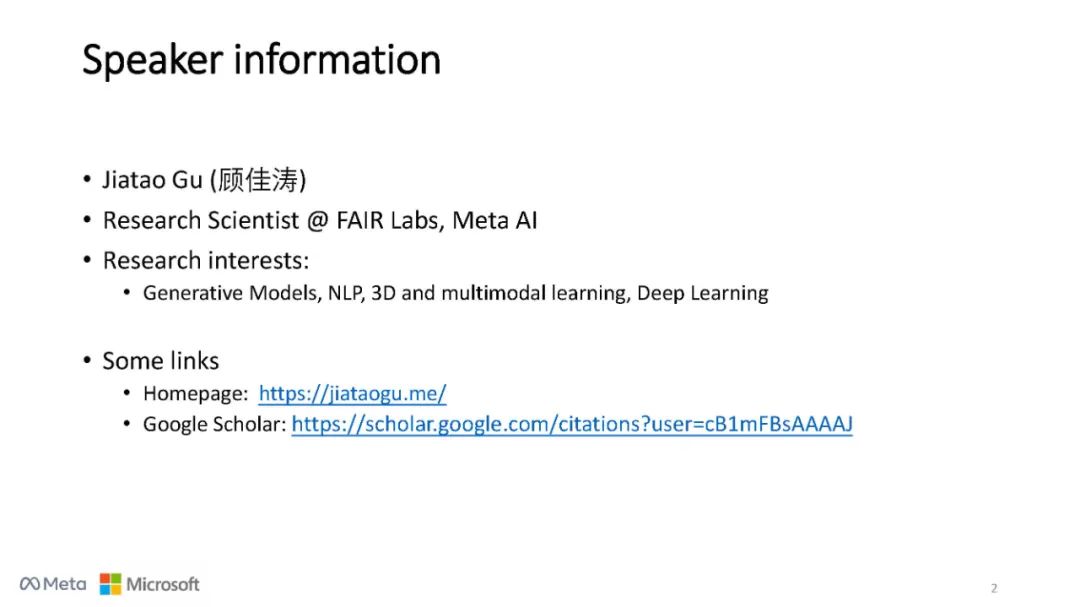

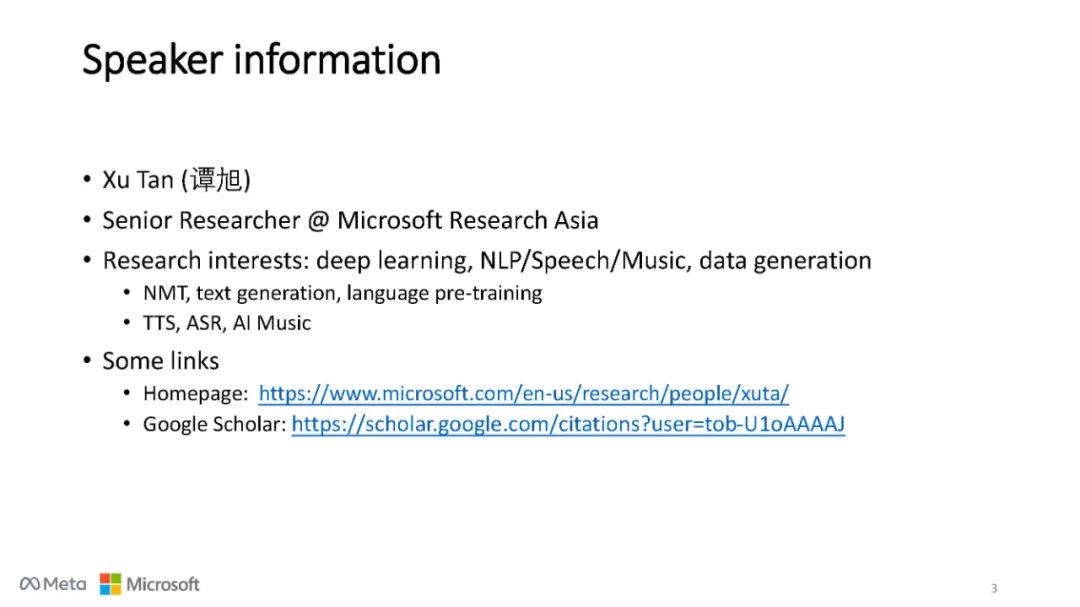

讲者:

参考文献:

Rohan Anil, Gabriel Pereyra, Alexandre Passos, Robert Ormandi, George E Dahl, and Geoffrey E Hinton. 2018. Large scale distributed neural network training through online distillation. arXiv preprint arXiv:1804.03235.

Nanxin Chen, Shinji Watanabe, Jesus Villalba, and ´ Najim Dehak. 2019. Non-autoregressive transformer automatic speech recognition. arXiv preprint arXiv:1911.04908.

Wenhu Chen, Evgeny Matusov, Shahram Khadivi, and Jan-Thorsten Peter. 2016. Guided alignment training for topic-aware neural machine translation. CoRR, abs/1607.01628.

专知便捷查看

便捷下载,请关注专知公众号(点击上方蓝色专知关注)

后台回复“S168” 就可以获取《META微软等最新ACL2022教程《非自回归序列生成》,168页ppt》专知下载链接