浅谈问题生成(Question Generation)

©作者 | 刘璐

学校 | 北京邮电大学

研究方向 | 问题生成与QA

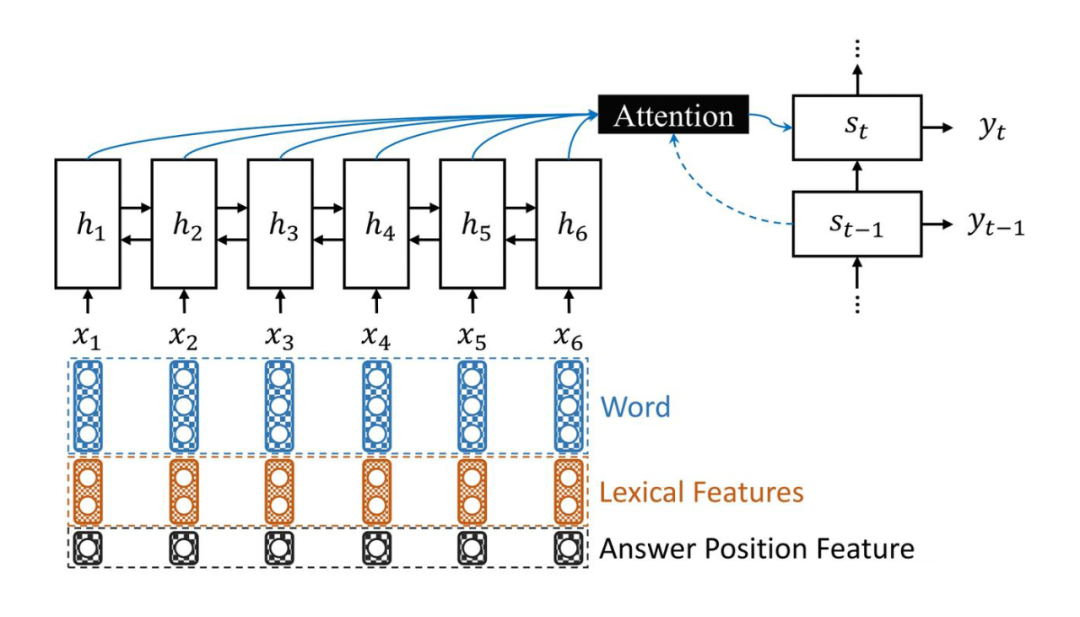

Answer-focused and Position-aware Neural Question Generation.EMNLP, 2018

Improving Neural Question Generation Using Answer Separation.AAAI, 2019.

Answer-driven Deep Question Generation based on Reinforcement Learning.COLING, 2020.

Automatic Question Generation using Relative Pronouns and Adverbs.ACL, 2018.

Learning to Generate Questions by Learning What not to Generate.WWW, 2019.

Question Generation for Question Answering.EMNLP,2017.

Answer-focused and Position-aware Neural Question Generation.EMNLP, 2018.

Question-type Driven Question Generation.EMNLP, 2019.

Harvesting paragraph-level question-answer pairs from wikipedia.ACL, 2018.

Leveraging Context Information for Natural Question Generation.ACL, 2018.

Paragraph-level Neural Question Generation with Maxout Pointer and Gated Self-attention Networks.EMNLP, 2018.

Capturing Greater Context for Question Generation.AAAI, 2020.

Identifying Where to Focus in Reading Comprehension for Neural Question Generation.EMNLP, 2017.

Neural Models for Key Phrase Extraction and Question Generation.ACL Workshop, 2018.

A Multi-Agent Communication Framework for Question-Worthy Phrase Extraction and Question Generation.AAAI, 2019.

Improving Question Generation With to the Point Context.EMNLP, 2019.

Teaching Machines to Ask Questions.IJCAI, 2018.

Natural Question Generation with Reinforcement Learning Based Graph-to-Sequence Model.NeurIPS Workshop, 2019.

Addressing Semantic Drift in Question Generation for Semi-Supervised Question Answering.EMNLP, 2019.

Exploring Question-Specific Rewards for Generating Deep Questions.COLING, 2020.

Answer-driven Deep Question Generation based on Reinforcement Learning.COLING, 2020.

Multi-Task Learning with Language Modeling for Question Generation.EMNLP, 2019.

How to Ask Good Questions? Try to Leverage Paraphrases.ACL, 2020.

Improving Question Generation with Sentence-level Semantic Matching and Answer Position Inferring.AAAI, 2020.

Variational Attention for Sequence-to-Sequence Models. ICML, 2018.

Generating Diverse and Consistent QA pairs from Contexts with Information-Maximizing Hierarchical Conditional VAEs.ACL, 2020.

On the Importance of Diversity in Question Generation for QA.ACL, 2020.

Unified Language Model Pre-training for Natural Language Understanding and Generation.NeurIPS, 2019.

UniLMv2: Pseudo-Masked Language Models for Unified Language Model Pre-Training.arXiv, 2020.

ERNIE-GEN: An Enhanced Multi-Flow Pre-training and Fine-tuning Framework for Natural Language Generation.IJCAI, 2020.(SOTA)

Addressing Semantic Drift in Question Generation for Semi-Supervised Question Answering.EMNLP, 2019.

Synthetic QA Corpora Generation with Roundtrip Consistency.ACL, 2019.

Template-Based Question Generation from Retrieved Sentences for Improved Unsupervised Question Answering.ACL, 2020.

Training Question Answering Models From Synthetic Data.EMNLP, 2020.

Embedding-based Zero-shot Retrieval through Query Generation.arXiv, 2020.

Towards Robust Neural Retrieval Models with Synthetic Pre-Training.arXiv, 2021.

End-to-End Synthetic Data Generation for Domain Adaptation of Question Answering Systems.EMNLP, 2020.

Improving Question Answering Model Robustness with Synthetic Adversarial Data Generation.ACL 2021.

Back-Training excels Self-Training at Unsupervised Domain Adaptation of Question Generation and Passage Retrieval.arXiv, 2021.

Open-domain question answering with pre-constructed question spaces.NAACL, 2021.

Accelerating real-time question answering via question generation.AAAI, 2021.

PAQ: 65 Million Probably-Asked Questions and What You Can Do With Them.arXiv, 2021.

Improving Factual Consistency of Abstractive Summarization via Question Answering.ACL, 2021.

Zero-shot Fact Verification by Claim Generation.ACL, 2021.

Towards a Better Metric for Evaluating Question Generation Systems.EMNLP, 2018.

On the Importance of Diversity in Question Generation for QA.ACL, 2020.

Evaluating for Diversity in Question Generation over Text.arXiv, 2020.

特别鸣谢

感谢 TCCI 天桥脑科学研究院对于 PaperWeekly 的支持。TCCI 关注大脑探知、大脑功能和大脑健康。

更多阅读

#投 稿 通 道#

让你的文字被更多人看到

如何才能让更多的优质内容以更短路径到达读者群体,缩短读者寻找优质内容的成本呢?答案就是:你不认识的人。

总有一些你不认识的人,知道你想知道的东西。PaperWeekly 或许可以成为一座桥梁,促使不同背景、不同方向的学者和学术灵感相互碰撞,迸发出更多的可能性。

PaperWeekly 鼓励高校实验室或个人,在我们的平台上分享各类优质内容,可以是最新论文解读,也可以是学术热点剖析、科研心得或竞赛经验讲解等。我们的目的只有一个,让知识真正流动起来。

📝 稿件基本要求:

• 文章确系个人原创作品,未曾在公开渠道发表,如为其他平台已发表或待发表的文章,请明确标注

• 稿件建议以 markdown 格式撰写,文中配图以附件形式发送,要求图片清晰,无版权问题

• PaperWeekly 尊重原作者署名权,并将为每篇被采纳的原创首发稿件,提供业内具有竞争力稿酬,具体依据文章阅读量和文章质量阶梯制结算

📬 投稿通道:

• 投稿邮箱:hr@paperweekly.site

• 来稿请备注即时联系方式(微信),以便我们在稿件选用的第一时间联系作者

• 您也可以直接添加小编微信(pwbot02)快速投稿,备注:姓名-投稿

△长按添加PaperWeekly小编

🔍

现在,在「知乎」也能找到我们了

进入知乎首页搜索「PaperWeekly」

点击「关注」订阅我们的专栏吧