Is Generator Conditioning Causally Related to GAN Performance?

Is Generator Conditioning Causally Related to GAN Performance?

abs:

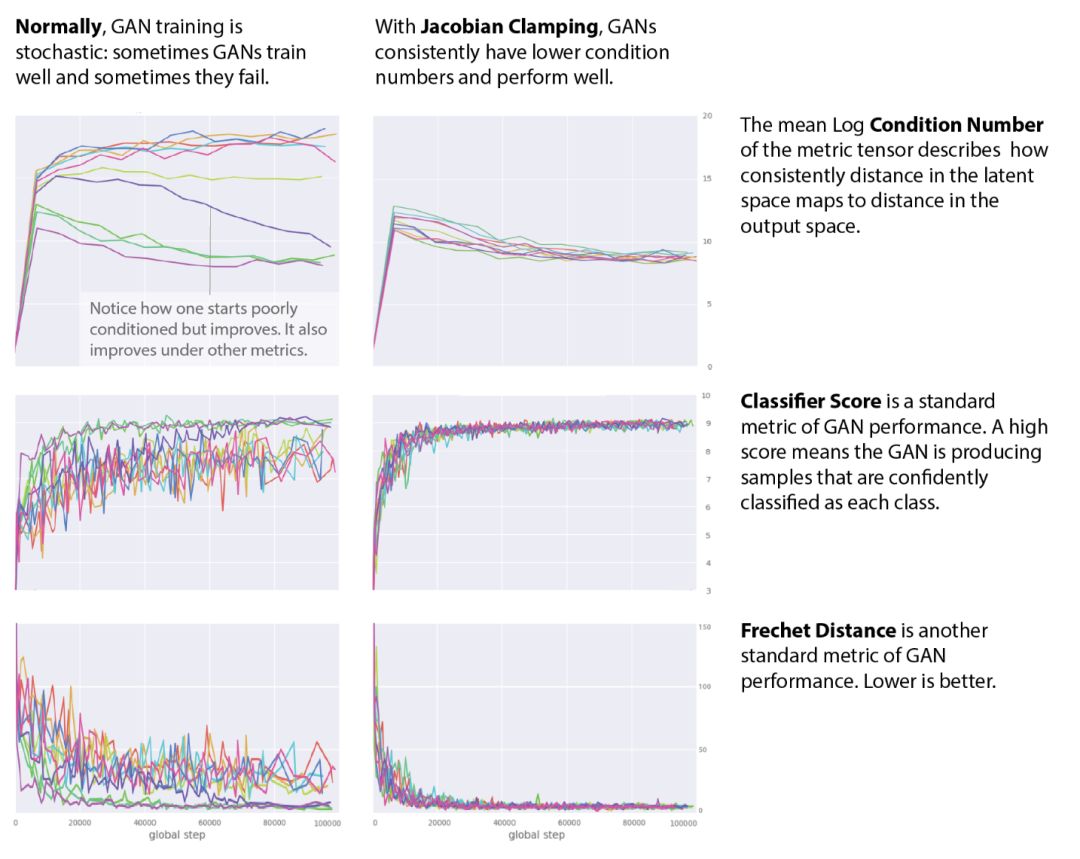

Recent work (Pennington et al., 2017) suggests

that controlling the entire distribution of Jacobian

singular values is an important design considera-

tion in deep learning. Motivated by this, we study

the distribution of singular values of the Jacobian

of the generator in Generative Adversarial Net-

works (GANs). We find that this Jacobian gen-

erally becomes ill-conditioned at the beginning

of training. Moreover, we find that the average

(with z ∼ p(z)) conditioning of the generator

is highly predictive of two other ad-hoc metrics

for measuring the “quality” of trained GANs: the

Inception Score and the Frechet Inception Dis-

tance (FID). We test the hypothesis that this re-

lationship is causal by proposing a “regulariza-

tion” technique (called Jacobian Clamping) that

softly penalizes the condition number of the gen-

erator Jacobian. Jacobian Clamping improves

the mean Inception Score and the mean FID for

GANs trained on several datasets. It also greatly

reduces inter-run variance of the aforementioned

scores, addressing (at least partially) one of the

main criticisms of GANs

https://www.arxiv-vanity.com/papers/1802.08768/