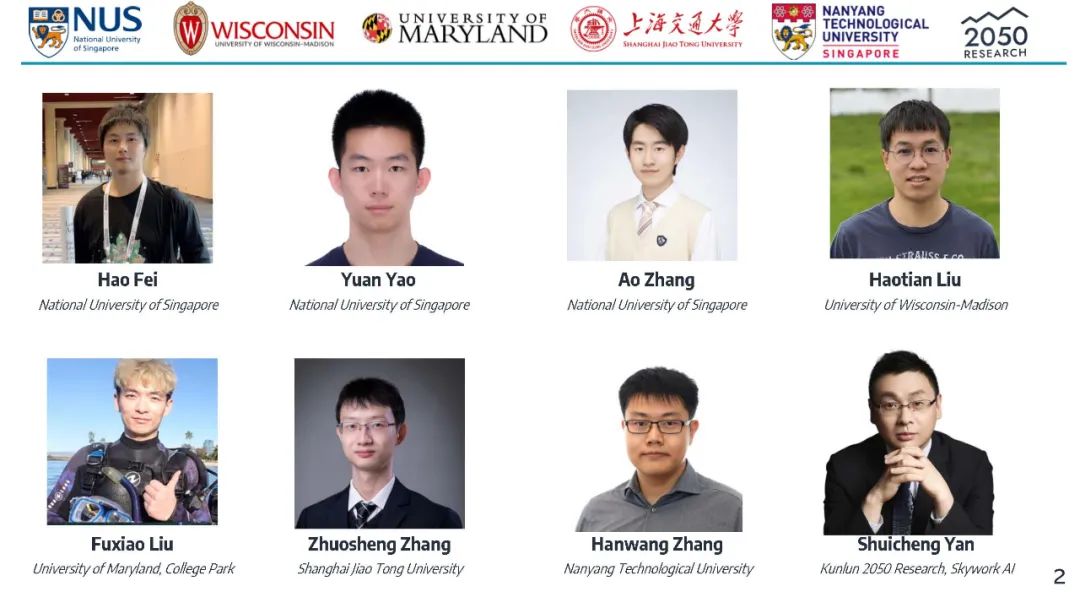

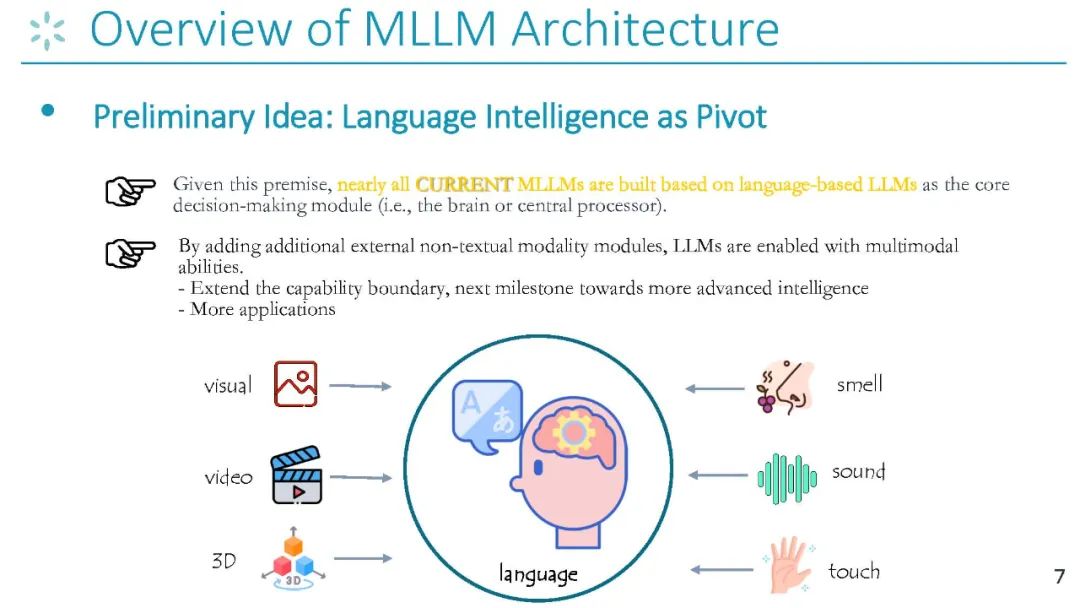

欢迎参加CVPR 2024的多模态大语言模型(MLLM)教程系列!人工智能(AI)涵盖了跨越多种模态的知识获取和现实世界的基础。作为一个多学科研究领域,多模态大语言模型(MLLM)最近在学术界和工业界引起了越来越多的关注,展示了通过MLLM实现人类水平AI的前所未有的趋势。这些大型模型通过整合和建模多种信息模态,包括语言、视觉、听觉和感官数据,提供了一个理解、推理和规划的有效工具。本教程旨在对MLLM领域的前沿研究进行全面回顾,重点关注四个关键领域:MLLM架构设计、指令学习与幻觉、多模态推理以及MLLM中的高效学习。我们将探讨技术进步,综合关键挑战,并讨论未来研究的潜在方向。

参考文献:

OpenAI, 2023, Introducing ChatGPT

OpenAI, 2023, GPT-4 Technical Report

Alayrac, et al., 2022, Flamingo: a Visual Language Model for Few-Shot Learning

Li, et al., 2023, BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models

Zhu, et al., 2023, MiniGPT-4: Enhancing Vision-Language Understanding with Advanced Large Language Models

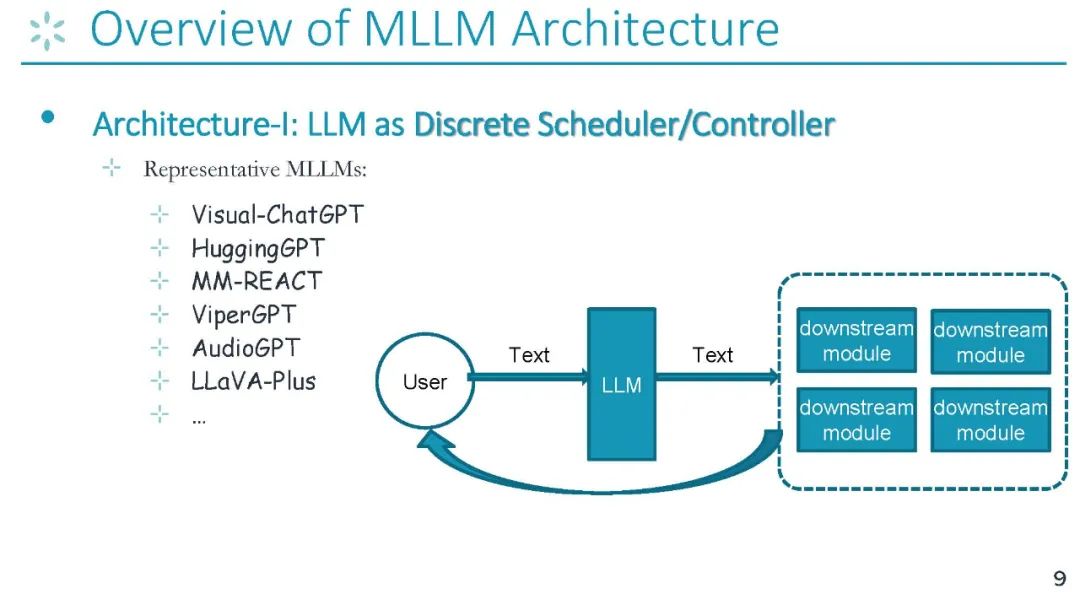

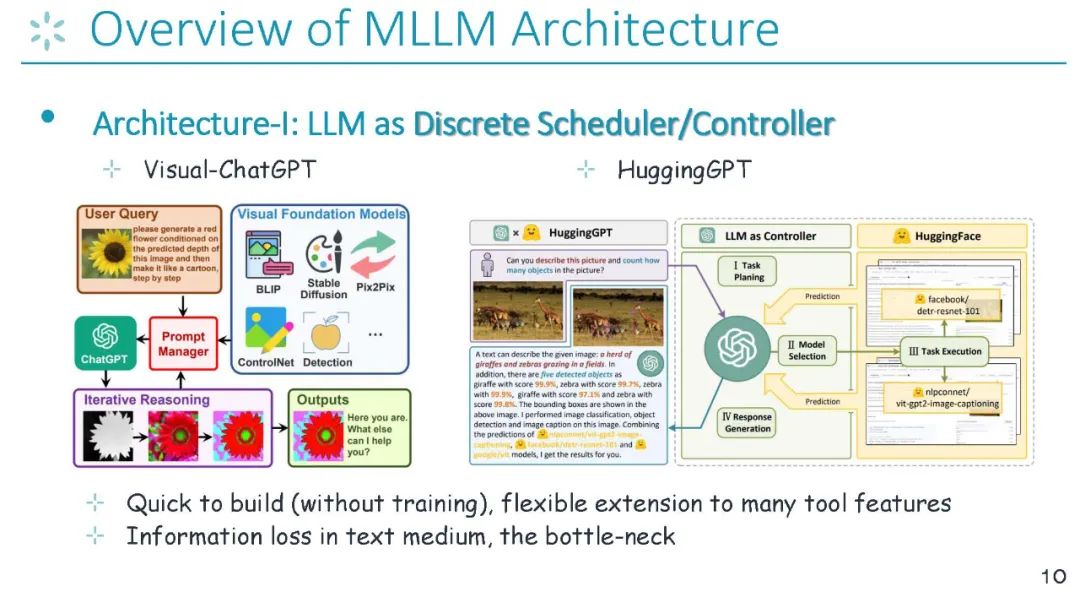

Wu, et al., 2023, Visual ChatGPT: Talking, Drawing and Editing with Visual Foundation Models

Shen, et al., 2023, HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face

Tang, et al., 2023, Any-to-Any Generation via Composable Diffusion

Girdhar, et al., 2023, ImageBind: One Embedding Space To Bind Them All

Wu, et al., 2023, NExT-GPT: Any-to-Any Multimodal LLM

Moon, et al., 2023, AnyMAL: An Efficient and Scalable Any-Modality Augmented Language Model

Hu, et al., 2023, Large Multilingual Models Pivot Zero-Shot Multimodal Learning across Languages

Bai, et al., 2023, Qwen-VL: A Versatile Vision-Language Model for Understanding, Localization, Text Reading, and Beyond

Wang, et al., 2023, CogVLM: Visual Expert for Pretrained Language Models

Peng, et al., 2023, Kosmos-2: Grounding Multimodal Large Language Models to the World

Dong, et al., 2023, InternLM-XComposer2: Mastering Free-form Text-Image Composition and Comprehension in Vision-Language Large Model

Zhu, et al., 2023, LanguageBind: Extending Video-Language Pretraining to N-modality by Language-based Semantic Alignment

Ge, et al., 2023, Planting a SEED of Vision in Large Language Model

Zhan, et al., 2024, AnyGPT: Unified Multimodal LLM with Discrete Sequence Modeling

Kondratyuk, et al., 2023, VideoPoet: A Large Language Model for Zero-Shot Video Generation

Zhang, et al., 2023, SpeechTokenizer: Unified Speech Tokenizer for Speech Large Language Models

Zeghidour, et al., 2021, SoundStream: An End-to-End Neural Audio Codec

Liu, et al., 2023, Improved Baselines with Visual Instruction Tuning

Wu, et al., 2023, Visual-ChatGPT: Talking, Drawing and Editing with Visual Foundation Models

Wang, et al., 2023, ModaVerse: Efficiently Transforming Modalities with LLMs

Fei, et al., 2024, VITRON: A Unified Pixel-level Vision LLM for Understanding, Generating, Segmenting, Editing

Lu, et al., 2023, Unified-IO 2: Scaling Autoregressive Multimodal Models with Vision, Language, Audio, and Action

Bai, et al., 2023, LVM: Sequential Modeling Enables Scalable Learning for Large Vision Models

Huang, et al., 2023, Language Is Not All You Need: Aligning Perception with Language Models

Li, et al., 2023, VideoChat: Chat-Centric Video Understanding

Maaz, et al., 2023, Video-ChatGPT: Towards Detailed Video Understanding via Large Vision and Language Models

Zhang, et al., 2023, Video-LLaMA: An Instruction-tuned Audio-Visual Language Model for Video Understanding

Lin, et al., 2023, Video-LLaVA: Learning United Visual Representation by Alignment Before Projection

Qian, et al., 2024, Momentor: Advancing Video Large Language Model with Fine-Grained Temporal Reasoning

专知便捷查看

便捷下载,请关注专知公众号(点击上方蓝色专知关注)

后台回复或发消息“R169” 就可以获取《【CVPR2024教程】推理的鲁棒性:走向可解释性、不确定性和可干预性,169页ppt****》专知下载链接

点击“阅读原文”,了解使用专知****,查看获取100000**+AI主题知识资料**