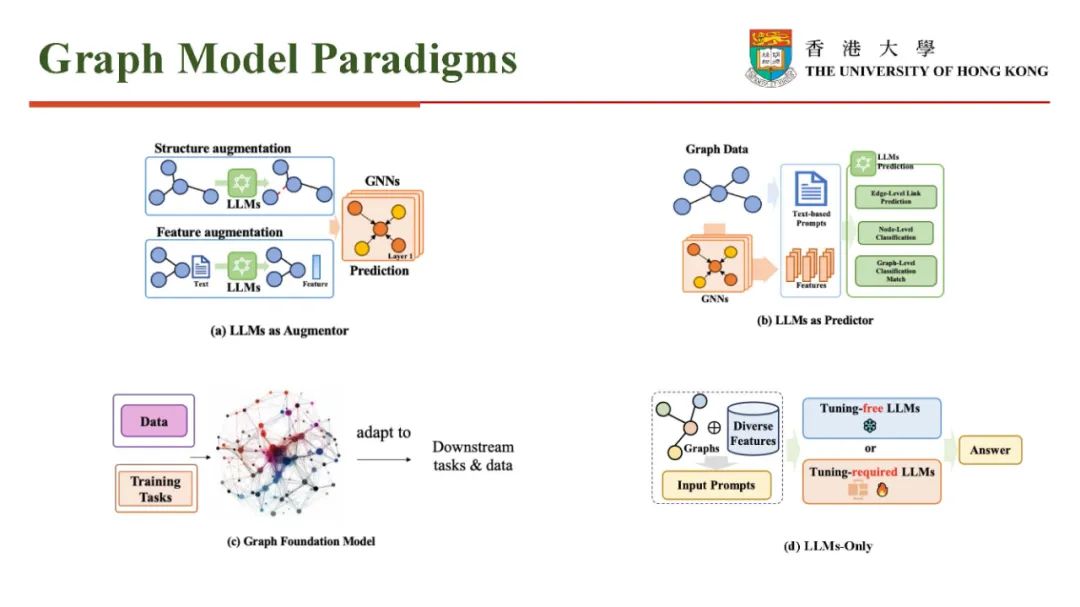

Section 1: GNNs as Prefix

**3.1 Node- level Tokenization

-

GraphGPT: Graph instruction tuning for large language models

-

HiGPT: Heterogeneous Graph Language Model

-

GraphTranslator: Aligning Graph Model to Large Language Model for Open-ended Tasks

-

UniGraph: Learning a Cross-Domain Graph Foundation Model From Natural Language

-

GIMLET:Aunifiedgraph-textmodelforinstruction-based molecule zero-shot learning

-

XRec: Large Language Models for Explainable Recommendation

**3.1 Node- level Tokenization

- GraphLLM: Boosting graph reasoning ability of large language model

- GIT-Mol: A multi-modal large language model for molecular science with graph, image, and text

- MolCA: Molecular graph-language modeling with cross-modal projector and uni-modal adapter

- InstructMol: Multi-modal integration for building a versatile and reliable molecular assistant in drug discovery

- G-Retriever: Retrieval-Augmented Generation for Textual Graph Understanding and Question Answering

- Graph neural prompting with large language models

Section 2: LLMs as Prefix

**2.1 Embeddings from LLMs for GNNs

- Prompt-based node feature extractor for few-shot learning on text-attributed graphs

- SimTeG: A frustratingly simple approach improves textual graph learning

- Graph-aware language model pre-training on a large graph corpus can help multiple graph applications

- One for all: Towards training one graph model for all classification tasks

- Harnessing explanations: Llm-to-lm interpreter for enhanced text-attributed graph representation learning

- LLMRec: Large language models with graph augmentation for recommendation

**2.2 Labels from LLMs for GNNs

-

OpenGraph: Towards Open Graph Foundation Models

-

Label-free node classification on graphs with large language models (LLMs)

-

GraphEdit: Large Language Models for Graph Structure Learning

-

Representation learning with large language models for recommendation

Section 3: LLMs-Graphs Intergration

**3.1 Alignment between GNNs and LLMs

- A molecular multimodal foundation model associating molecule graphs with natural language

- ConGraT: Self-supervised contrastive pretraining for joint graph and text embeddings

- Prompt tuning on graph-augmented low-resource text classification

- GRENADE: Graph-Centric Language Model for Self-Supervised Representation Learning on Text-Attributed Graphs

- Multi-modal molecule structure–text model for text-based retrieval and editing

- Pretraining language models with text-attributed heterogeneous graphs

- Learning on large-scale text-attributed graphs via variational inference

**3.2 Fusion Training of GNNs and LLMs

- GreaseLM: Graph reasoning enhanced language models for question answering

- Disentangled representation learning with large language models for text-attributed graphs

- Efficient Tuning and Inference for Large Language Models on Textual Graphs

- Can GNN be Good Adapter for LLMs?

**3.3 LLMs Agent for Graphs

- Don't Generate, Discriminate: A Proposal for Grounding Language Models to Real-World Environments

- Graph Agent: Explicit Reasoning Agent for Graphs

- Middleware for LLMs: Tools Are Instrumental for Language Agents in Complex Environments

- Call Me When Necessary: LLMs can Efficiently and Faithfully Reason over Structured Environments

- Reasoning on graphs: Faithful and interpretable large language model reasoning

Section 4: LLMs-Only

**4.1 Tuning-free

- Can language models solve graph problems in natural language?

- GPT4Graph: Can large language models understand graph structured data? an empirical evaluation and benchmarking

- BeyondText: A Deep Dive into Large Language Models’ Ability on Understanding Graph Data

- Exploring the potential of large language models (llms) in learning on graphs

- Graphtext: Graph reasoning in text space

- Talk like a graph: Encoding graphs for large language models

- LLM4DyG: Can Large Language Models Solve Problems on Dynamic Graphs?

- Which Modality should I use–Text, Motif, or Image?: Understanding Graphs with Large Language Models

- When Graph Data Meets Multimodal: A New Paradigm for Graph Understanding and Reasoning

**4.2 Tuning-required

- Natural language is all a graph needs

- Walklm: A uniform language model fine-tuning framework for attributed graph embedding

- LLaGA: Large Language and Graph Assistant

- InstructGraph: Boosting Large Language Models via Graph-centric Instruction Tuning and Preference Alignment

- ZeroG: Investigating Cross-dataset Zero-shot Transferability in Graphs

- GraphWiz: An Instruction-Following Language Model for Graph Problems

- GraphInstruct: Empowering Large Language Models with Graph Understanding and Reasoning Capability

- MuseGraph: Graph-oriented Instruction Tuning of Large Language Models for Generic Graph Mining

Survey

-

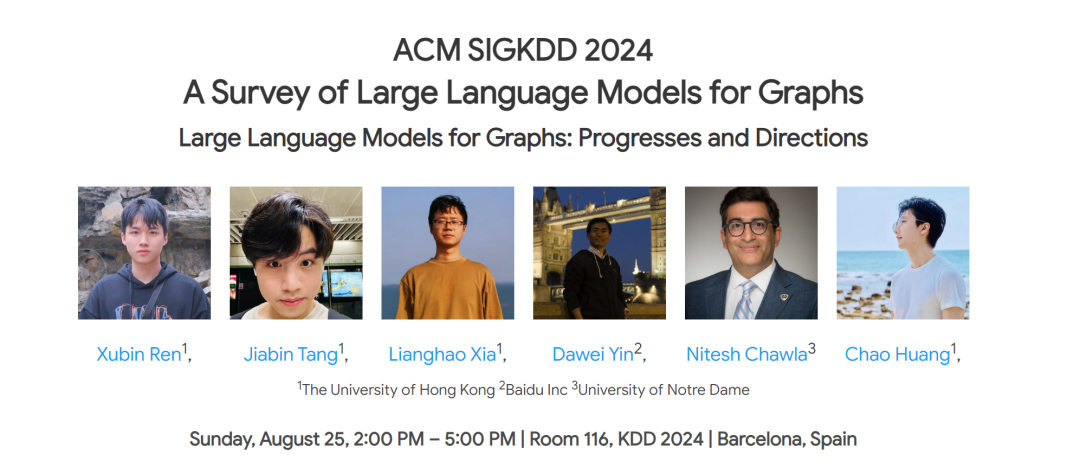

A Survey of Large Language Models for Graphs

-

Large language models on graphs: A comprehensive survey

-

A Survey of Graph Meets Large Language Model: Progress and Future Directions