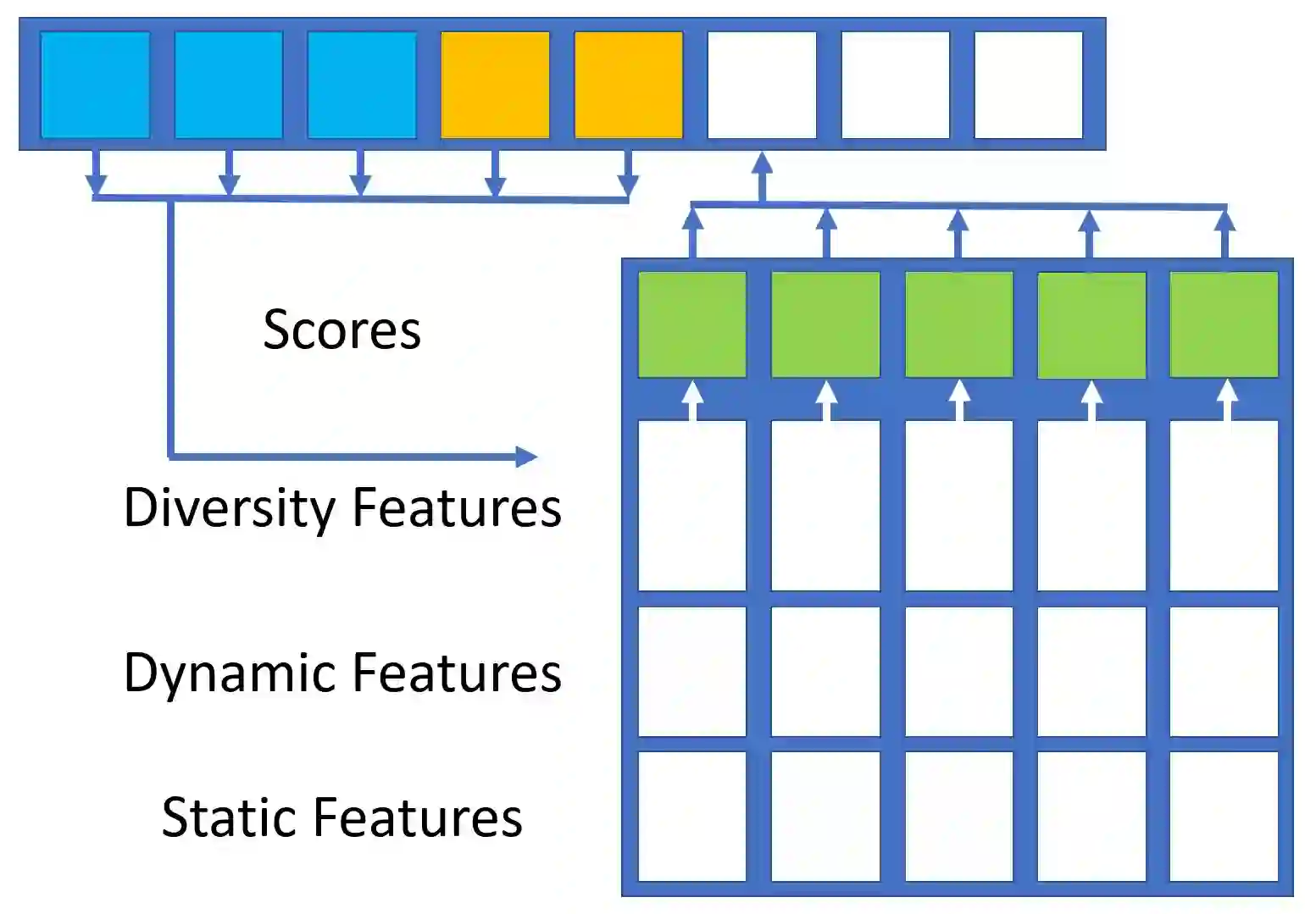

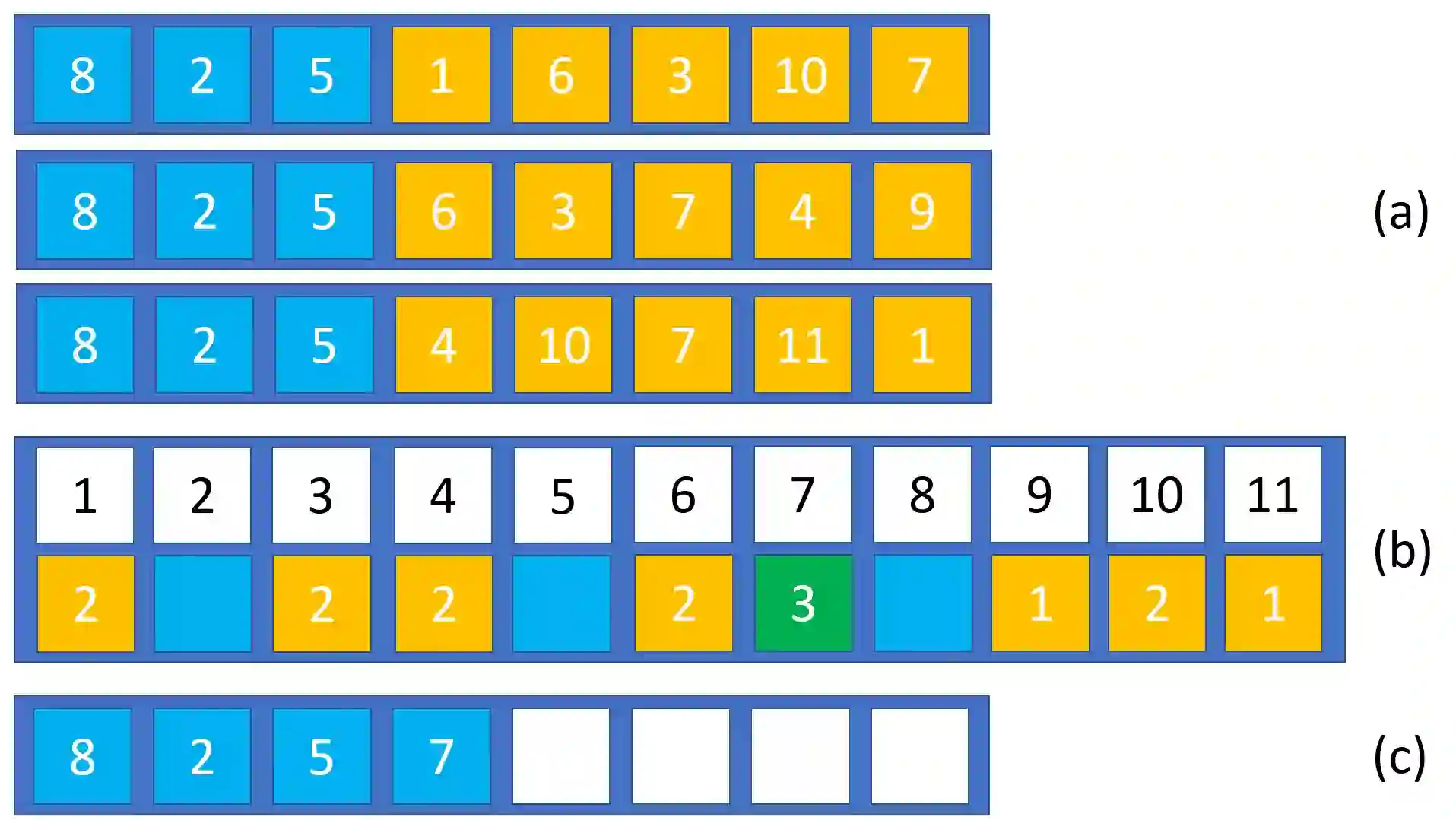

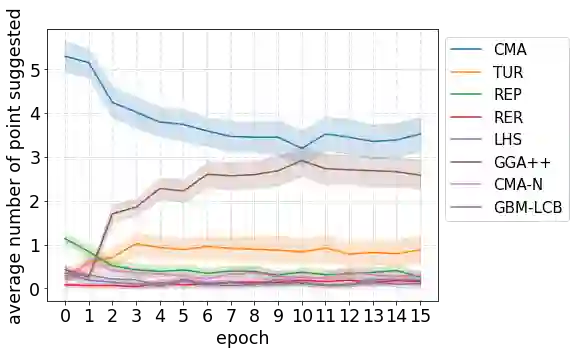

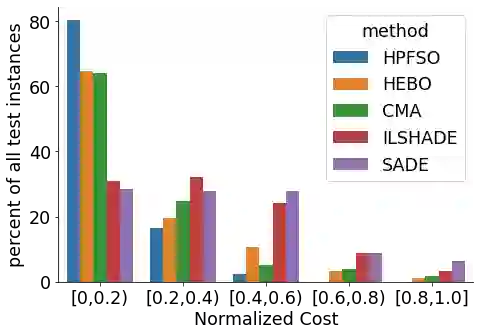

We consider black-box optimization in which only an extremely limited number of function evaluations, on the order of around 100, are affordable and the function evaluations must be performed in even fewer batches of a limited number of parallel trials. This is a typical scenario when optimizing variable settings that are very costly to evaluate, for example in the context of simulation-based optimization or machine learning hyperparameterization. We propose an original method that uses established approaches to propose a set of points for each batch and then down-selects from these candidate points to the number of trials that can be run in parallel. The key novelty of our approach lies in the introduction of a hyperparameterized method for down-selecting the number of candidates to the allowed batch-size, which is optimized offline using automated algorithm configuration. We tune this method for black box optimization and then evaluate on classical black box optimization benchmarks. Our results show that it is possible to learn how to combine evaluation points suggested by highly diverse black box optimization methods conditioned on the progress of the optimization. Compared with the state of the art in black box minimization and various other methods specifically geared towards few-shot minimization, we achieve an average reduction of 50\% of normalized cost, which is a highly significant improvement in performance.

翻译:我们考虑的是黑箱优化,在这种优化中,只有数量极有限的功能评价(大约100个左右)才能负担得起,而功能评价必须在数量有限的平行试验中以更少的批量进行,而功能评价则必须在更少的批量中进行。这是最优化非常昂贵的可变设置的典型情景,这些可变设置对评估费用非常高,例如模拟优化或机器学习超光度计。我们建议了一种原始方法,使用既定方法为每个批次提出一组点,然后从这些候选者中下调选择,这指向可以平行运行的试验次数。我们方法的关键新颖之处在于采用一种超分光化方法,将候选人人数从允许的批量中下选,而允许的批量则使用自动算式配置优化。我们调整这种黑盒优化方法,然后对经典黑盒优化基准进行评估。我们的结果显示,可以学习如何将高度多样化黑盒优化方法所建议的评价点与优化的进展结合起来。与黑盒中最短化的艺术状态和专门为微最小化而专门调整的其他方法相比,我们实现了平均的正常性地降低50个成本。