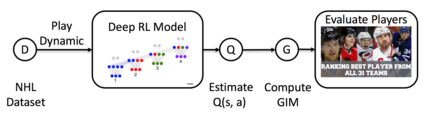

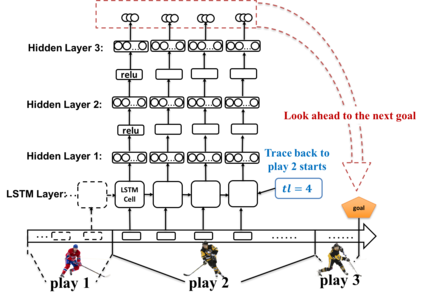

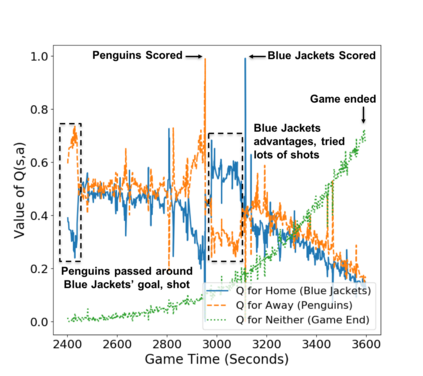

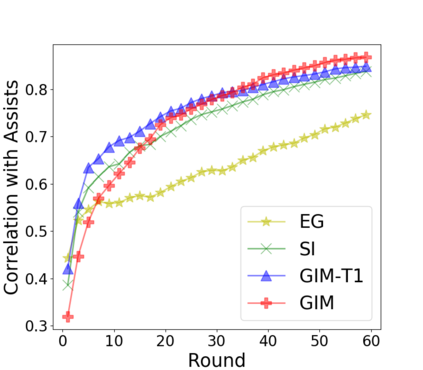

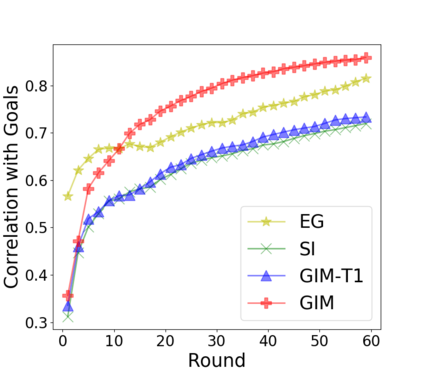

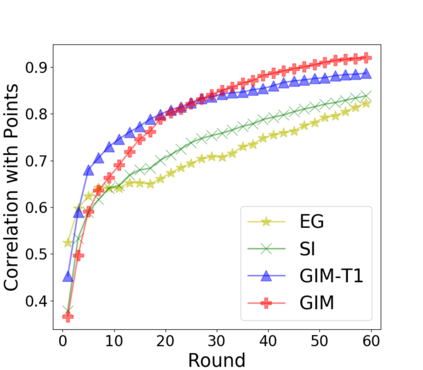

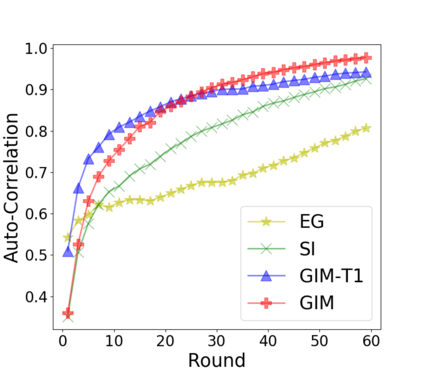

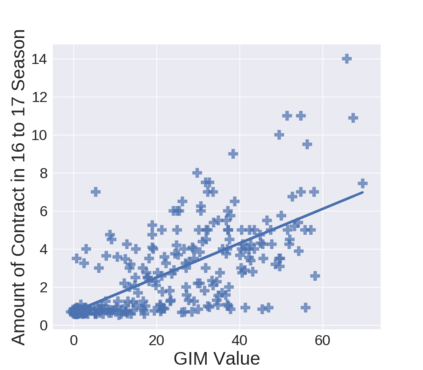

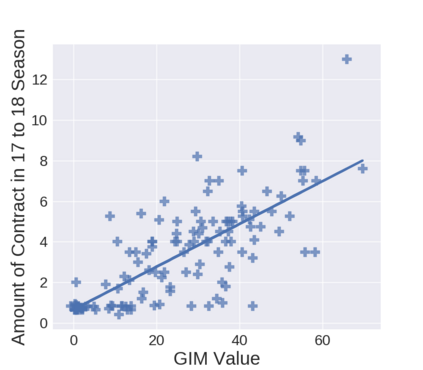

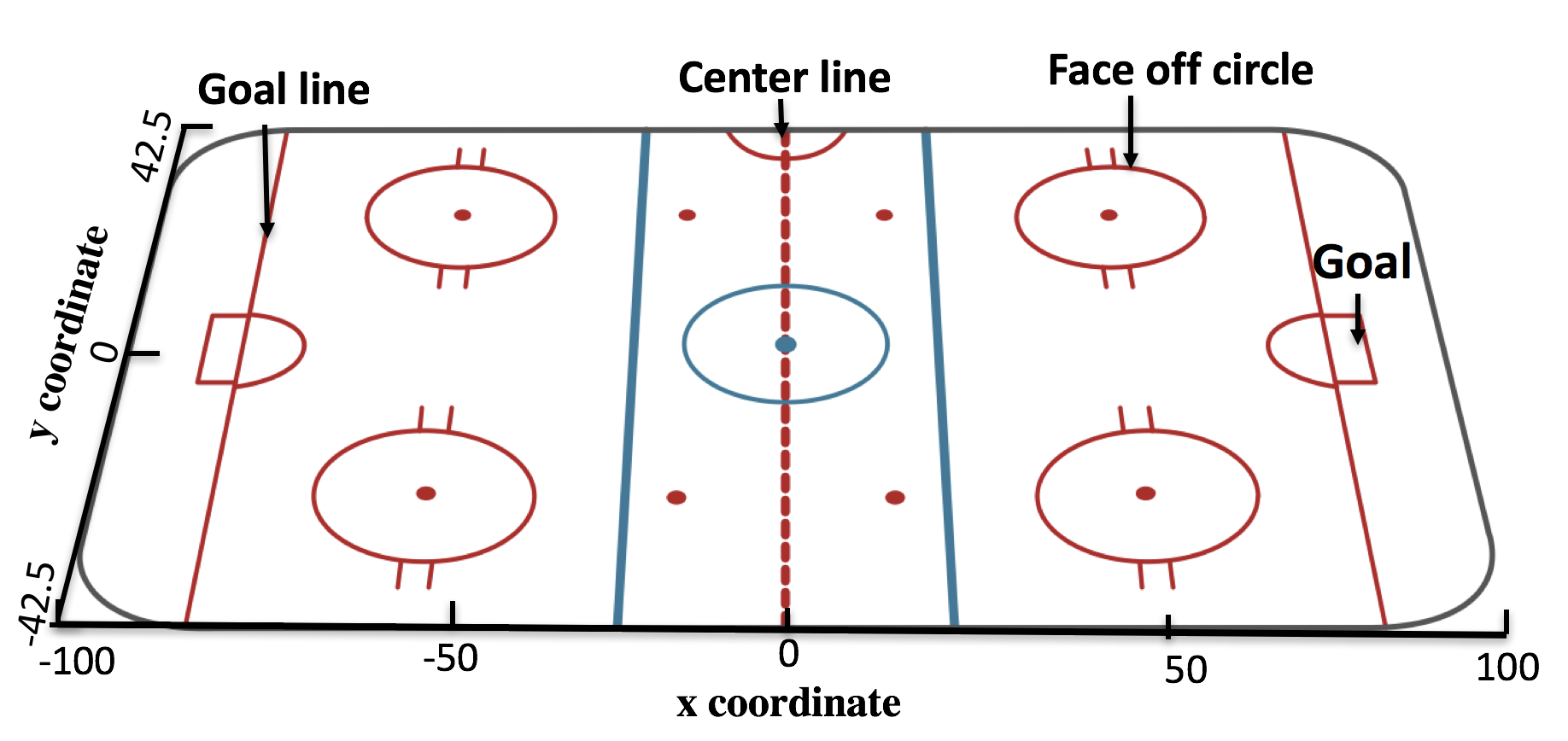

A variety of machine learning models have been proposed to assess the performance of players in professional sports. However, they have only a limited ability to model how player performance depends on the game context. This paper proposes a new approach to capturing game context: we apply Deep Reinforcement Learning (DRL) to learn an action-value Q function from 3M play-by-play events in the National Hockey League (NHL). The neural network representation integrates both continuous context signals and game history, using a possession-based LSTM. The learned Q-function is used to value players' actions under different game contexts. To assess a player's overall performance, we introduce a novel Game Impact Metric (GIM) that aggregates the values of the player's actions. Empirical Evaluation shows GIM is consistent throughout a play season, and correlates highly with standard success measures and future salary.

翻译:为了评估职业体育运动员的成绩,提出了各种机器学习模式,但是,他们模拟运动员表现如何取决于游戏背景的能力有限。本文提出了一种捕捉游戏背景的新方法:我们应用深强化学习(DRL)从国家曲棍球联盟(NHL)的3M游戏活动中学习一个行动价值Q函数。神经网络代表将连续的背景信号和游戏历史结合起来,使用基于拥有的LSTM。学习的Q功能被用于评价球员在不同游戏背景下的行动。为了评估球员的总体表现,我们引入了一个新的游戏影响计量(GIM),汇总球员行动的价值。经验评估显示,球在整个游戏季节里,GIM与标准的成功措施和未来工资密切相关。