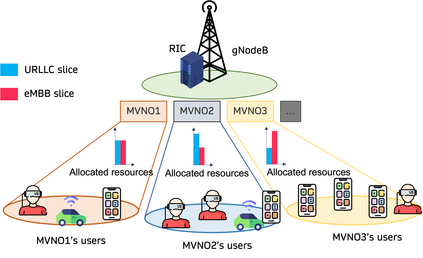

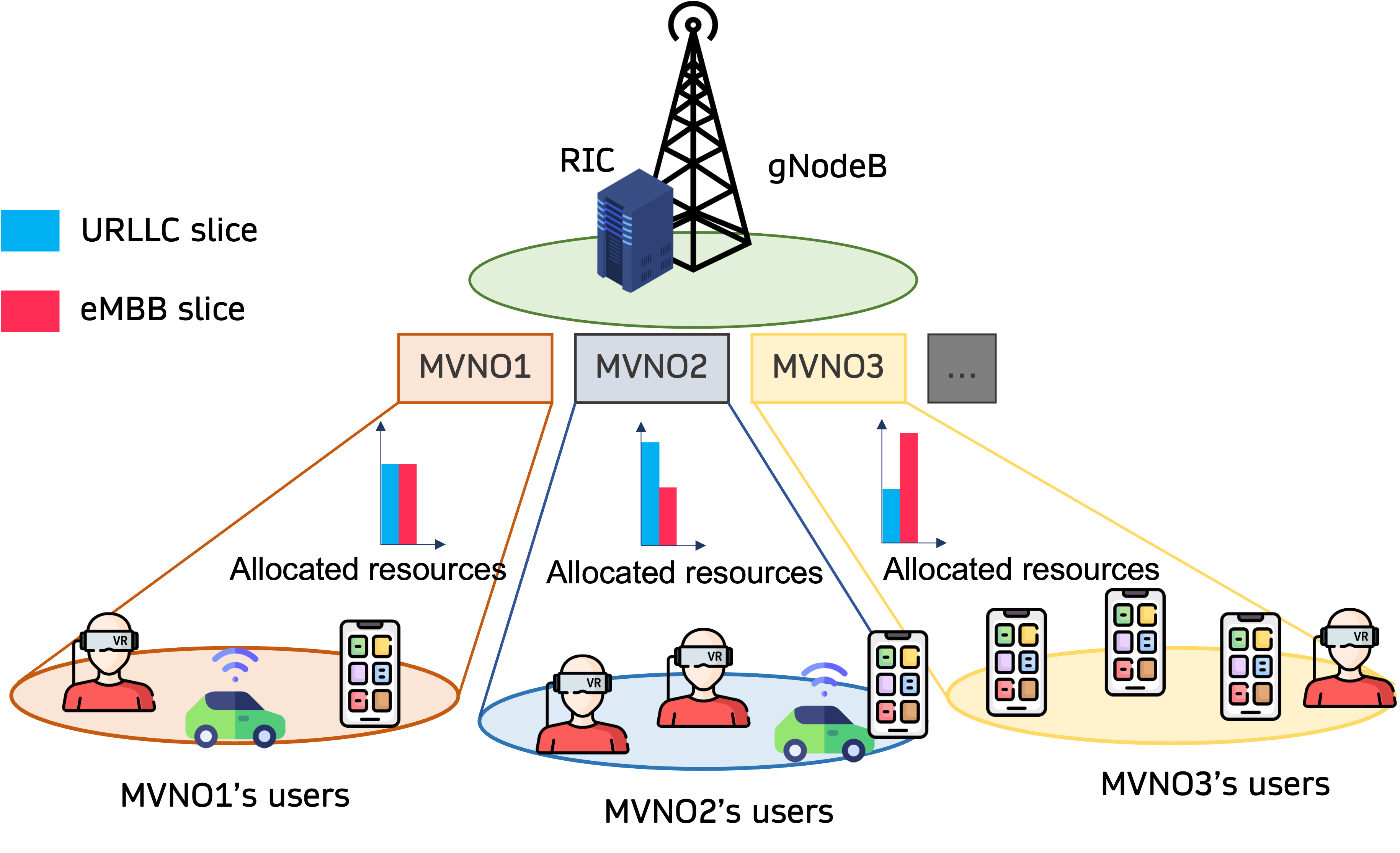

Radio access network (RAN) slicing is a key element in enabling current 5G networks and next-generation networks to meet the requirements of different services in various verticals. However, the heterogeneous nature of these services' requirements, along with the limited RAN resources, makes the RAN slicing very complex. Indeed, the challenge that mobile virtual network operators (MVNOs) face is to rapidly adapt their RAN slicing strategies to the frequent changes of the environment constraints and service requirements. Machine learning techniques, such as deep reinforcement learning (DRL), are increasingly considered a key enabler for automating the management and orchestration of RAN slicing operations. Nerveless, the ability to generalize DRL models to multiple RAN slicing environments may be limited, due to their strong dependence on the environment data on which they are trained. Federated learning enables MVNOs to leverage more diverse training inputs for DRL without the high cost of collecting this data from different RANs. In this paper, we propose a federated deep reinforcement learning approach for RAN slicing. In this approach, MVNOs collaborate to improve the performance of their DRL-based RAN slicing models. Each MVNO trains a DRL model and sends it for aggregation. The aggregated model is then sent back to each MVNO for immediate use and further training. The simulation results show the effectiveness of the proposed DRL approach.

翻译:移动虚拟网络操作员(MVNOs)面临的挑战是迅速调整其RAN切片战略,使之适应环境制约和服务要求的频繁变化。诸如深层强化学习(DRL)等机械学习技术日益被视为使RAN切片作业的管理和调控自动化的关键推进器。Nerveles,这些服务要求的多样化性质,加上RAN的资源有限,使RAN切片环境更加复杂。事实上,移动虚拟网络操作员(MVNOs)面临的挑战是迅速调整其RAN切片战略,使之适应环境制约和服务要求的频繁变化。深层强化学习(DRL)等机械学习技术日益被视为使RAN切片作业管理和调控的关键推进器。在这个方法中,将DRL模型推广到多个RAN切片环境中的能力可能受到限制,因为他们非常依赖他们受训的环境数据。快速学习使MVNOs能够利用更多样化的培训投入,而无需从不同的RAN进一步收集这些数据的高成本。我们提议为RAN模版的RAN模版模拟剪片模型提供一种加油的深层强化学习方法。MVNOsal 将每一次的MVDRDRDRBS-BRBS-S-BRBS-BRBRBS-S-S-BS-BRV-BRV-BRDRDRDRBS-BS-S-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-N-N-N-N-N-N-N-B-N-N-N-N-N-BDRDRDRDL-B-NL-N-N-N-N-N-N-B-B-B-B-B-N-N-N-N-N-N-N-N-N-N-N-N-N-N-N-N-N-N-N-N-