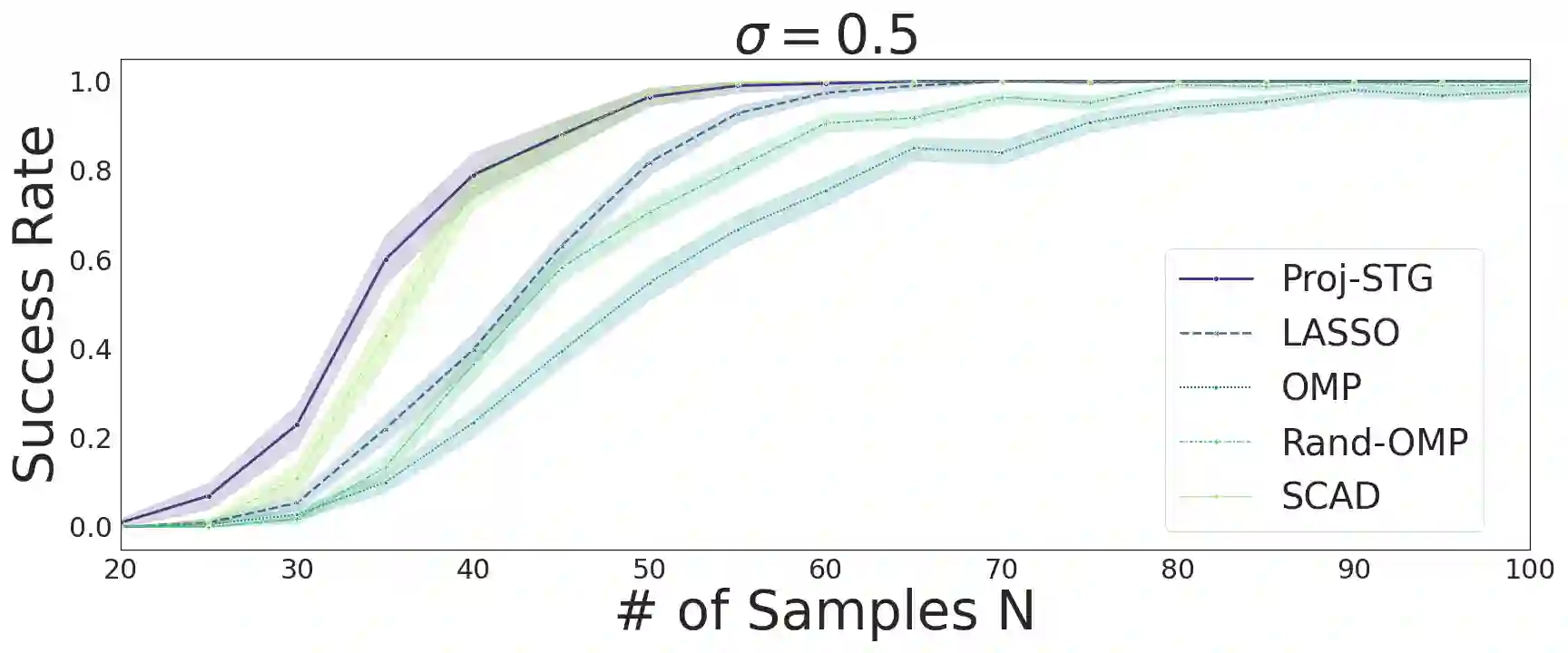

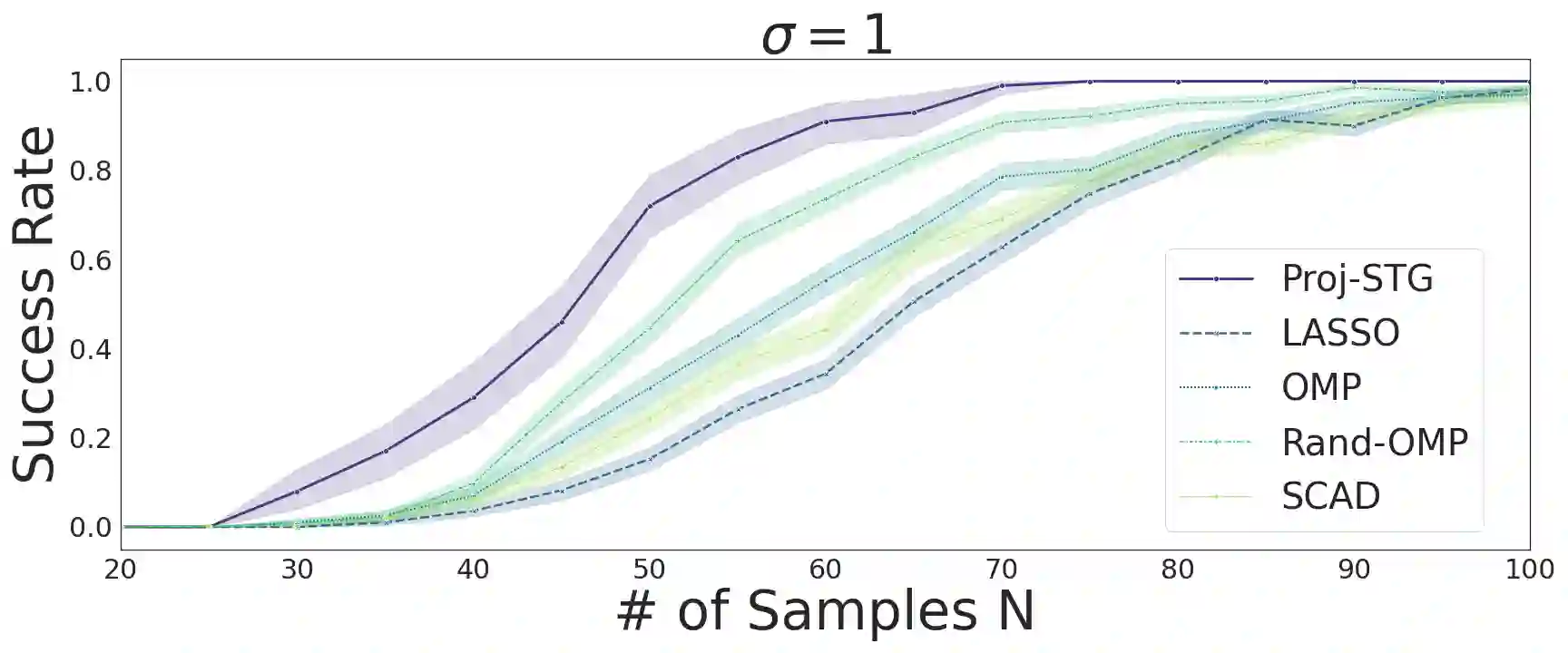

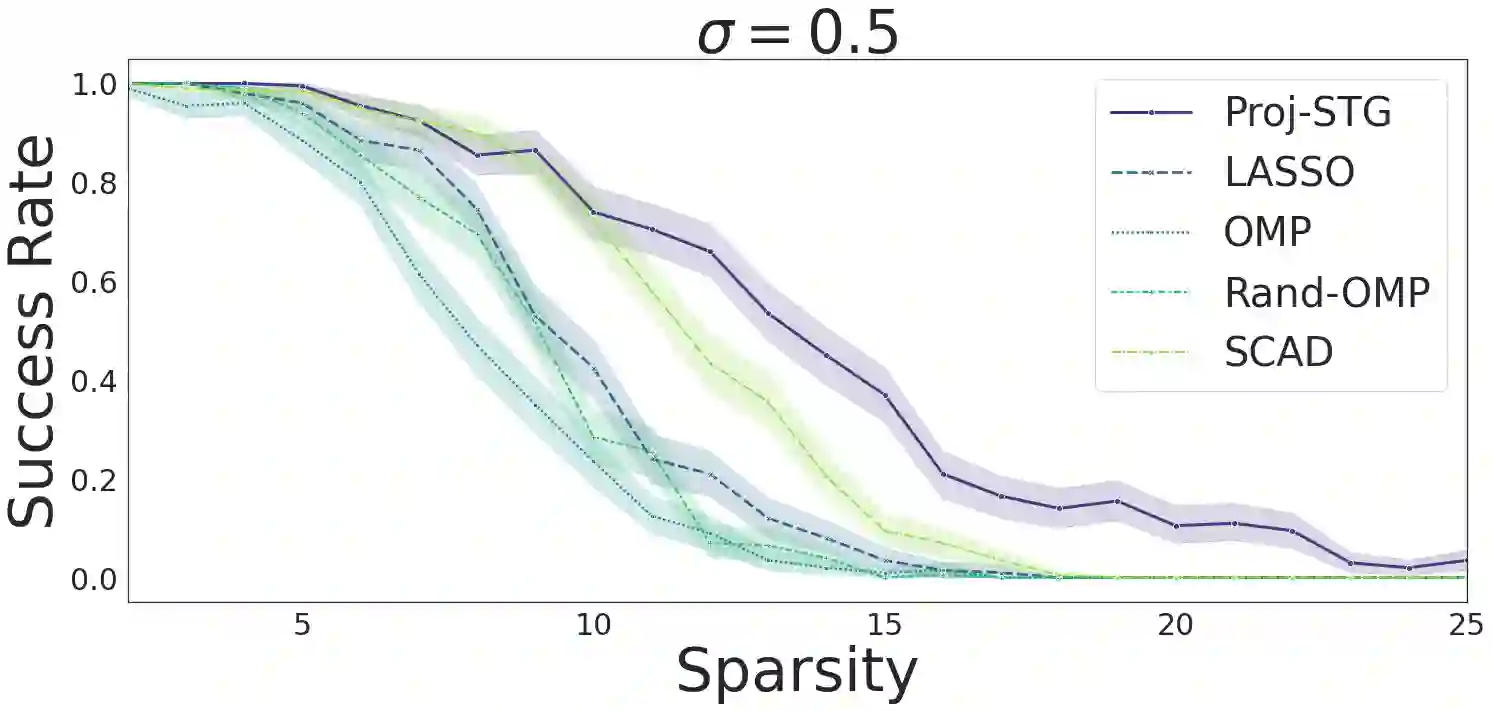

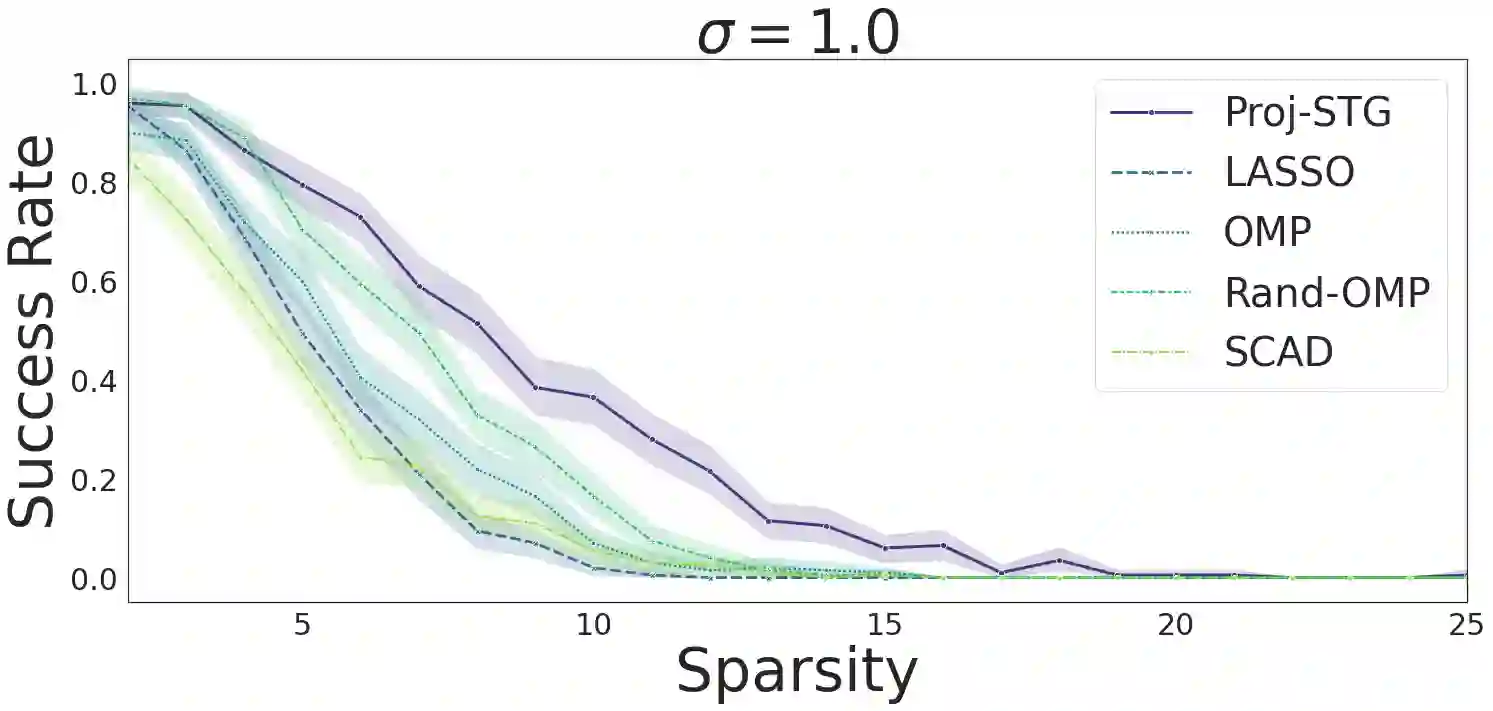

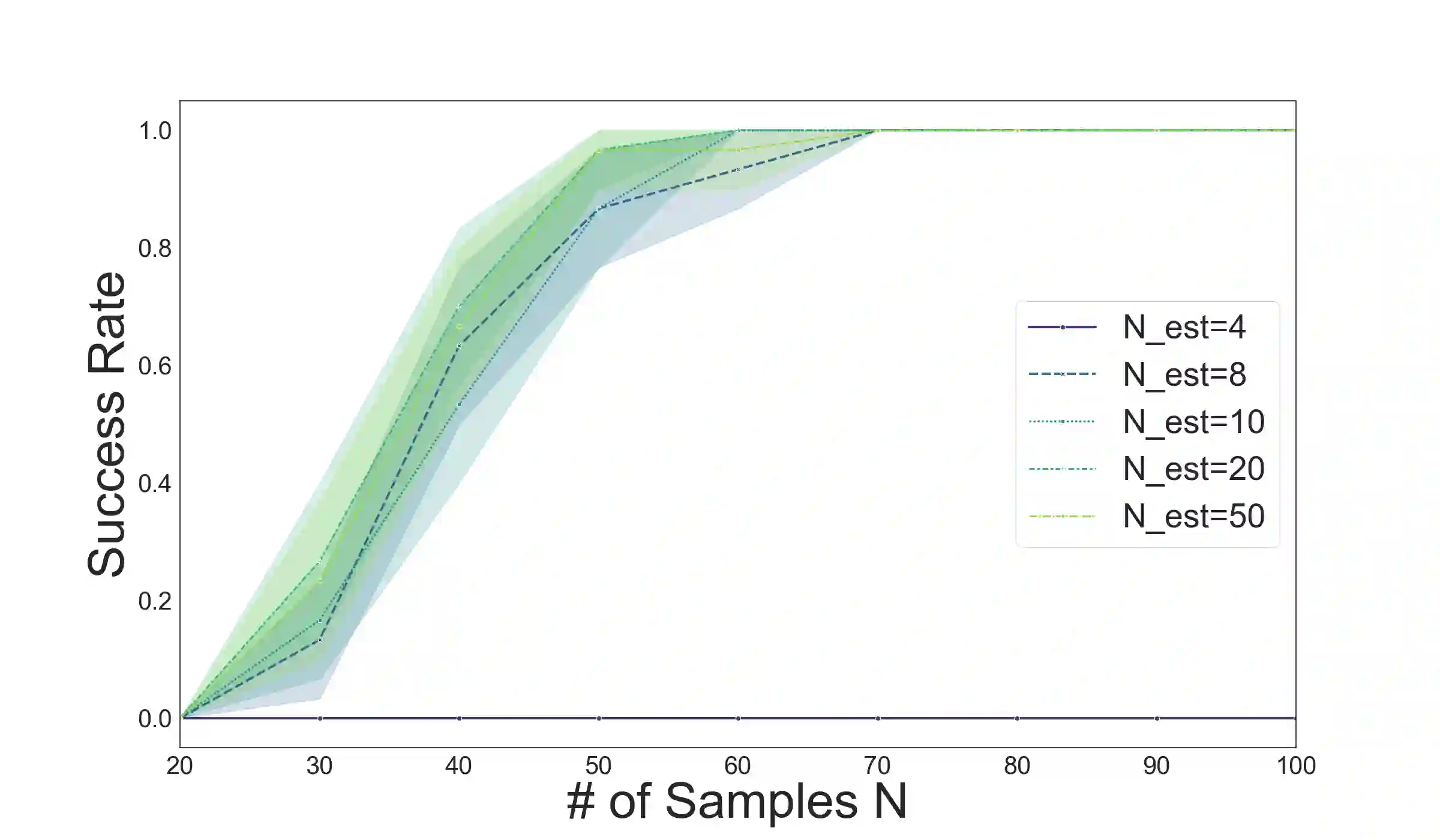

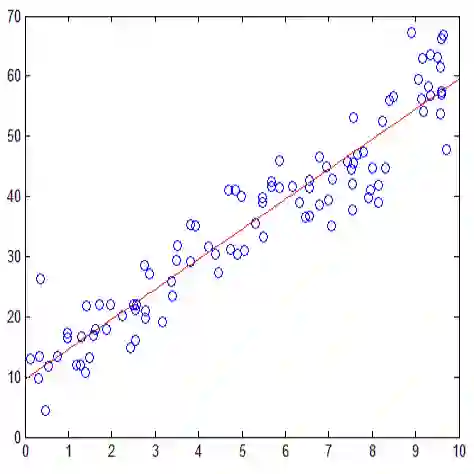

We analyze the problem of simultaneous support recovery and estimation of the coefficient vector ($\beta^*$) in a linear model with independent and identically distributed Normal errors. We apply the penalized least square estimator based on non-linear penalties of stochastic gates (STG) [YLNK20] to estimate the coefficients. Considering Gaussian design matrices we show that under reasonable conditions on dimension and sparsity of $\beta^*$ the STG based estimator converges to the true data generating coefficient vector and also detects its support set with high probability. We propose a new projection based algorithm for linear models setup to improve upon the existing STG estimator that was originally designed for general non-linear models. Our new procedure outperforms many classical estimators for support recovery in synthetic data analysis.

翻译:我们用独立且分布均匀的正常差错的线性模型分析同时支持回收和估计系数矢量( $\beta ⁇ $) 的问题。 我们使用基于对随机门( STG) [ YLNK20] 的非线性惩罚的受罚最低平方估计值来估计系数。 考虑到高斯设计矩阵, 我们显示在维度和聚度的合理条件下, 以 $\beta ⁇ $ 的 STG 为基础的估计值会和真正的数据生成系数矢量汇合, 并发现其支持值的概率很高。 我们为线性模型设置提出了一个新的基于预测的算法, 以改进现有的STG 估计值, 原为一般非线性模型设计。 我们的新程序超越了许多支持合成数据分析恢复的经典估计值。