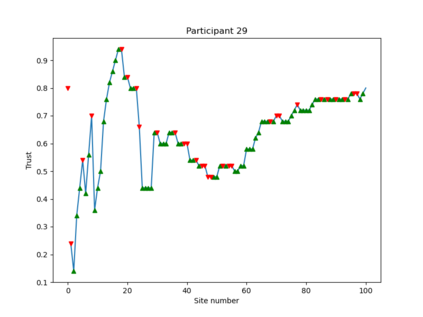

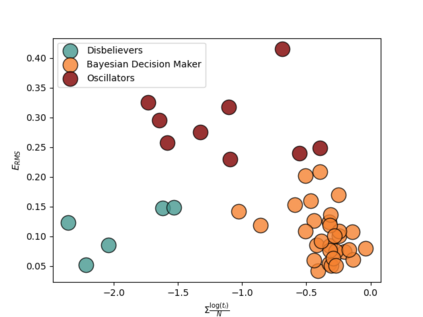

In this paper, we present a framework for trust-aware sequential decision-making in a human-robot team. We model the problem as a finite-horizon Markov Decision Process with a reward-based performance metric, allowing the robotic agent to make trust-aware recommendations. Results of a human-subject experiment show that the proposed trust update model is able to accurately capture the human agent's moment-to-moment trust changes. Moreover, we cluster the participants' trust dynamics into three categories, namely, Bayesian decision makers, oscillators, and disbelievers, and identify personal characteristics that could be used to predict which type of trust dynamics a person will belong to. We find that the disbelievers are less extroverted, less agreeable, and have lower expectations toward the robotic agent, compared to the Bayesian decision makers and oscillators. The oscillators are significantly more frustrated than the Bayesian decision makers.

翻译:在本文中,我们提出了一个在人类机器人团队中进行有信任意识的相继决策的框架。我们把这一问题以基于奖赏的绩效衡量标准作为限量的Horizon Markov 决策程序模型,允许机器人代理商提出有信任的建议。 人类实验的结果显示,拟议的信任更新模式能够准确地捕捉人类代理人的瞬间信任变化。 此外,我们把参与者的信任动态分为三类,即巴耶西亚决策者、振荡者和不信者,并找出个人特征,用来预测一个人属于哪种类型的信任动态。我们发现,与巴耶斯决策者和振荡者相比,不受欢迎的人较少,对机器人的期待也较少。振荡者比贝耶斯决策者更加沮丧。