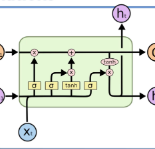

This paper presents a Bayesian multilingual topic model for learning language-independent document embeddings. Our model learns to represent the documents in the form of Gaussian distributions, thereby encoding the uncertainty in its covariance. We propagate the learned uncertainties through linear classifiers for zero-shot cross-lingual topic identification. Our experiments on 5 language Europarl and Reuters (MLDoc) corpora show that the proposed model outperforms multi-lingual word embedding and BiLSTM sentence encoder based systems with significant margins in the majority of the transfer directions. Moreover, our system trained under a single day on a single GPU with much lower amounts of data performs competitively as compared to the state-of-the-art universal BiLSTM sentence encoder trained on 93 languages. Our experimental analysis shows that the amount of parallel data improves the overall performance of embeddings. Nonetheless, exploiting the uncertainties is always beneficial.

翻译:本文展示了学习语言独立文件嵌入的巴伊西亚多语种主题模型。 我们的模型学会以高山分布形式代表文件,从而将不确定性编码。 我们通过线性分类器来传播所学到的不确定性,用于零弹射跨语言专题识别。 我们对5种语言Europarl和路透社(MLDoc)的实验显示,拟议的模型优于多语言嵌入和BILSTM句编码器系统,这些系统在大部分传输方向上有很大的边际。 此外,我们的系统在单日内以单一的GPU培训,其数据数量比对93种语言进行最先进的通用的 BILSTM 句编码器进行竞争。 我们的实验分析显示,平行数据的数量可以改善嵌入的总体性。 尽管如此,利用不确定性总是有好处的。