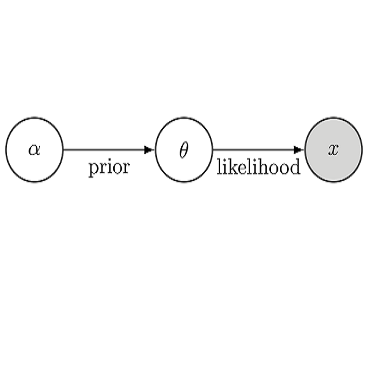

Bayesian experimental design (BED) has been used as a method for conducting efficient experiments based on Bayesian inference. The existing methods, however, mostly focus on maximizing the expected information gain (EIG); the cost of experiments and sample efficiency are often not taken into account. In order to address this issue and enhance practical applicability of BED, we provide a new approach Sequential Experimental Design via Reinforcement Learning to construct BED in a sequential manner by applying reinforcement learning in this paper. Here, reinforcement learning is a branch of machine learning in which an agent learns a policy to maximize its reward by interacting with the environment. The characteristics of interacting with the environment are similar to the sequential experiment, and reinforcement learning is indeed a method that excels at sequential decision making. By proposing a new real-world-oriented experimental environment, our approach aims to maximize the EIG while keeping the cost of experiments and sample efficiency in mind simultaneously. We conduct numerical experiments for three different examples. It is confirmed that our method outperforms the existing methods in various indices such as the EIG and sampling efficiency, indicating that our proposed method and experimental environment can make a significant contribution to application of BED to the real world.

翻译:现有方法主要侧重于最大限度地增加预期获得的信息(EIG);试验成本和抽样效率往往得不到考虑;为了解决这一问题,提高BED的实际适用性,我们提供了一种新的方法,即通过强化学习,逐步进行实验设计,以便通过在本文中应用强化学习方法,按顺序构建BED。这里,强化学习是机器学习的一个分支,在机器学习中,代理人学习政策,通过与环境互动最大限度地获得其奖励。与环境互动的特点与连续试验相似,强化学习确实是一种优于顺序决策的方法。通过提出一个新的面向现实世界的实验环境,我们的方法旨在最大限度地扩大EIG,同时牢记实验成本和抽样效率,我们为三个不同的例子进行数字实验。我们确认,我们的方法超越了各种指数中的现有方法,如EIG和取样效率,表明我们提议的方法和实验环境可对BED的实际应用作出重大贡献。