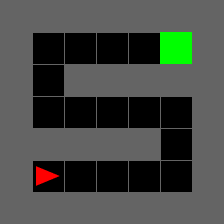

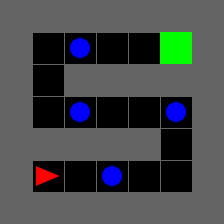

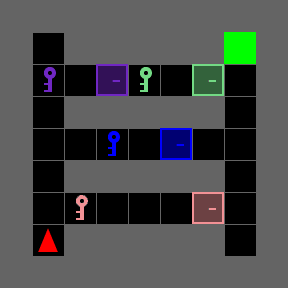

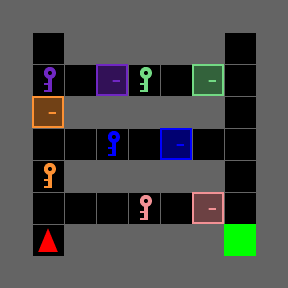

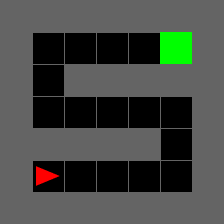

Many hierarchical reinforcement learning (RL) applications have empirically verified that incorporating prior knowledge in reward design improves convergence speed and practical performance. We attempt to quantify the computational benefits of hierarchical RL from a planning perspective under assumptions about the intermediate state and intermediate rewards frequently (but often implicitly) adopted in practice. Our approach reveals a trade-off between computational complexity and the pursuit of the shortest path in hierarchical planning: using intermediate rewards significantly reduces the computational complexity in finding a successful policy but does not guarantee to find the shortest path, whereas using sparse terminal rewards finds the shortest path at a significantly higher computational cost. We also corroborate our theoretical results with extensive experiments on the MiniGrid environments using Q-learning and other popular deep RL algorithms.

翻译:许多等级强化学习(RL)应用经验已经证实,将先前的知识纳入奖励设计可以提高趋同速度和实际业绩。我们试图从规划角度,根据经常(但往往是隐含的)实际采用的中间状态和中间奖励假设,量化等级RL的计算效益。我们的方法揭示了计算复杂性和在等级规划中追求最短路径之间的权衡:在寻找成功的政策时,使用中间奖励会大大降低计算复杂性,但不能保证找到最短路径,而使用稀少的终点奖励会发现最短路径,计算成本要高得多。我们还利用Q-学习和其他流行的深层次RL算法,在微型Grid环境中进行广泛的实验,证实了我们的理论结果。