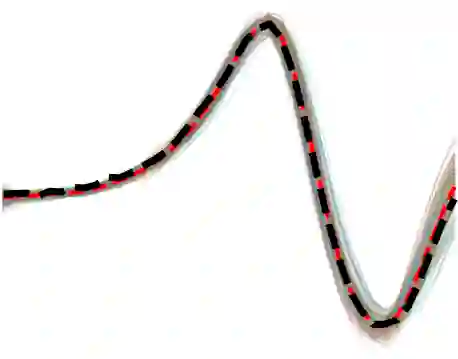

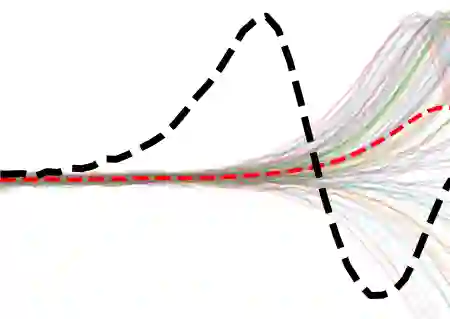

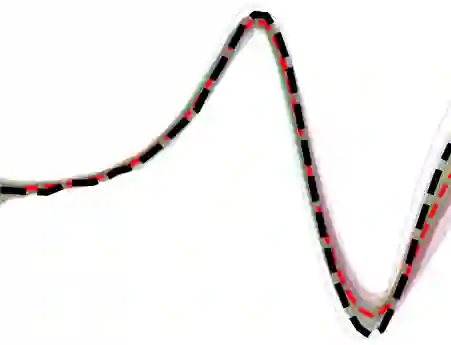

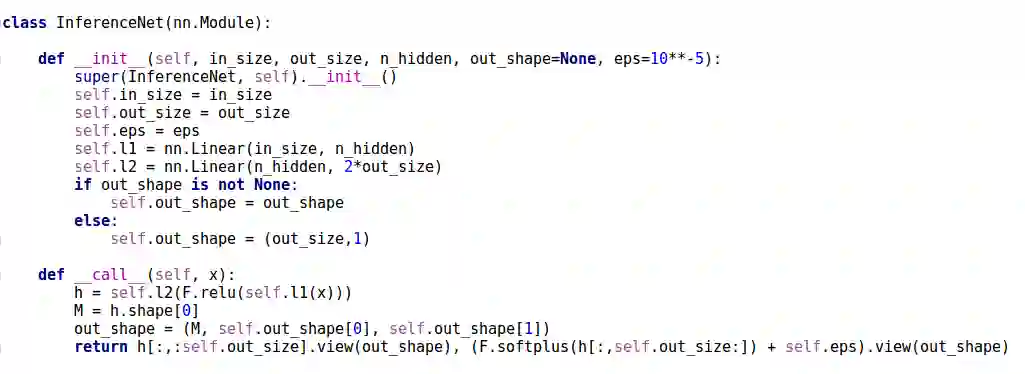

The automation of probabilistic reasoning is one of the primary aims of machine learning. Recently, the confluence of variational inference and deep learning has led to powerful and flexible automatic inference methods that can be trained by stochastic gradient descent. In particular, normalizing flows are highly parameterized deep models that can fit arbitrarily complex posterior densities. However, normalizing flows struggle in highly structured probabilistic programs as they need to relearn the forward-pass of the program. Automatic structured variational inference (ASVI) remedies this problem by constructing variational programs that embed the forward-pass. Here, we combine the flexibility of normalizing flows and the prior-embedding property of ASVI in a new family of variational programs, which we named cascading flows. A cascading flows program interposes a newly designed highway flow architecture in between the conditional distributions of the prior program such as to steer it toward the observed data. These programs can be constructed automatically from an input probabilistic program and can also be amortized automatically. We evaluate the performance of the new variational programs in a series of structured inference problems. We find that cascading flows have much higher performance than both normalizing flows and ASVI in a large set of structured inference problems.

翻译:概率推理的自动化是机器学习的主要目标之一。 最近,变异推理和深层次学习的结合导致了强大和灵活的自动推论方法,这些方法可以通过随机梯度梯度下降来训练。 特别是, 正常流是高度参数化的深模型, 能够任意复杂的外表密度。 但是, 在高度结构化的概率推理程序上, 流的正常化是高度结构化的概率推论, 因为它们需要将程序前方通道重新复制出来。 自动结构化的变异推论( ASVI) 通过构建嵌入前方通道的变异程序来补救这一问题。 在这里, 我们把自动变异性流的灵活性和ASI的先前渗透属性结合起来, 在一个新的变异程序组合中, 我们称之为渐渐变流。 串流程序将新设计的高速公路流结构结构在前程序有条件分布之间, 从而将它引向观测到观测到的数据。 这些方案可以自动地从输入变异性程序构建成型, 并且也可以自动和解。 我们评估了新变式方案在结构化过程中的大规模变现过程中的运行情况。