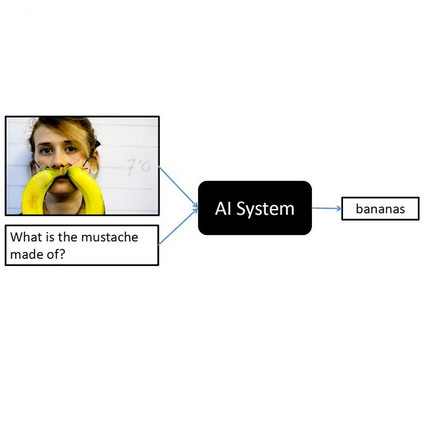

Due to the significant advancement of Natural Language Processing and Computer Vision-based models, Visual Question Answering (VQA) systems are becoming more intelligent and advanced. However, they are still error-prone when dealing with relatively complex questions. Therefore, it is important to understand the behaviour of the VQA models before adopting their results. In this paper, we introduce an interpretability approach for VQA models by generating counterfactual images. Specifically, the generated image is supposed to have the minimal possible change to the original image and leads the VQA model to give a different answer. In addition, our approach ensures that the generated image is realistic. Since quantitative metrics cannot be employed to evaluate the interpretability of the model, we carried out a user study to assess different aspects of our approach. In addition to interpreting the result of VQA models on single images, the obtained results and the discussion provides an extensive explanation of VQA models' behaviour.

翻译:由于基于自然语言处理和计算机愿景的模型的显著进步,视觉问题解答系统(VQA)越来越智能和先进,但是,在处理相对复杂的问题时,它们仍然容易出错,因此,在通过结果之前必须了解VQA模型的行为,因此,在本文件中,我们通过产生反事实图像,对VQA模型采用可解释性方法,具体地说,产生的图像应当对原始图像作出尽可能小的改变,并导致VQA模型给出不同的答案。此外,我们的方法确保生成的图像是现实的。由于无法使用定量指标来评估模型的可解释性,我们进行了用户研究,以评估我们方法的不同方面。除了解释VQA模型对单一图像的结果外,所获得的结果和讨论还提供了对VQA模型行为的广泛解释。