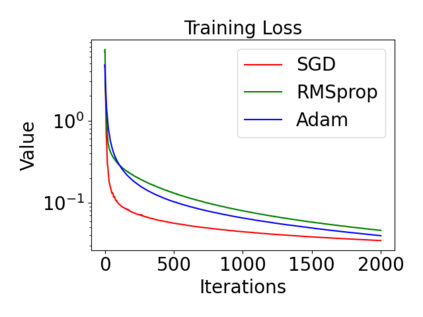

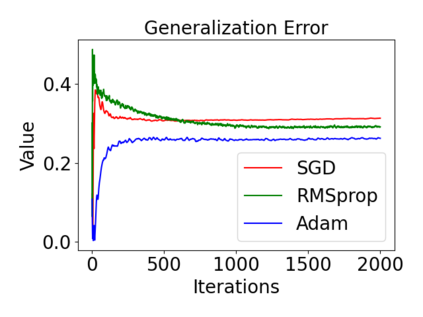

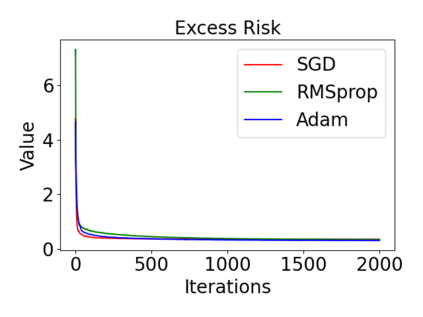

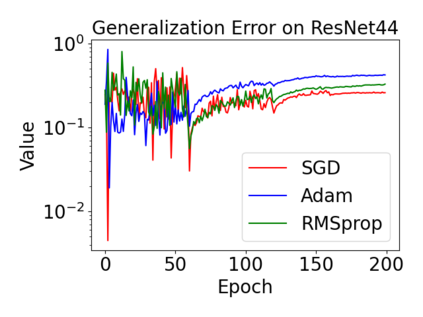

In this paper, we provide a unified analysis of the excess risk of the model trained by a proper algorithm with both smooth convex and non-convex loss functions. In contrast to the existing bounds in the literature that depends on iteration steps, our bounds to the excess risk do not diverge with the number of iterations. This underscores that, at least for smooth loss functions, the excess risk can be guaranteed after training. To get the bounds to excess risk, we develop a technique based on algorithmic stability and non-asymptotic characterization of the empirical risk landscape. The model obtained by a proper algorithm is proved to generalize with this technique. Specifically, for non-convex loss, the conclusion is obtained via the technique and analyzing the stability of a constructed auxiliary algorithm. Combining this with some properties of the empirical risk landscape, we derive converged upper bounds to the excess risk in both convex and non-convex regime with the help of some classical optimization results.

翻译:在本文中,我们提供了对模型过重风险的统一分析,该模型由光滑的卷轴和非卷轴损失功能的适当算法所培训。与文献中取决于迭代步骤的现有界限不同,我们对超重风险的界限与迭代次数没有差异。这突出表明,至少对于平稳损失功能而言,在培训后可以保证超重风险。为了让这些界限达到超重风险,我们开发了一种基于算法稳定性和对经验风险景观的非零散定性的技术。通过适当算法获得的模型被证明与这一技术相提并论。具体来说,对于非阴道损失,结论是通过技术和分析构建的辅助算法的稳定性获得的。将这一点与经验风险景观的某些特性结合起来,我们在一些经典优化结果的帮助下,在康韦克斯和非卷轴制度下,将超重风险的界限与一些超重风险相融合。