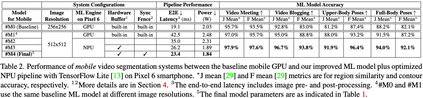

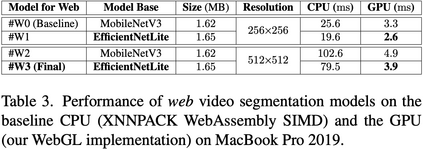

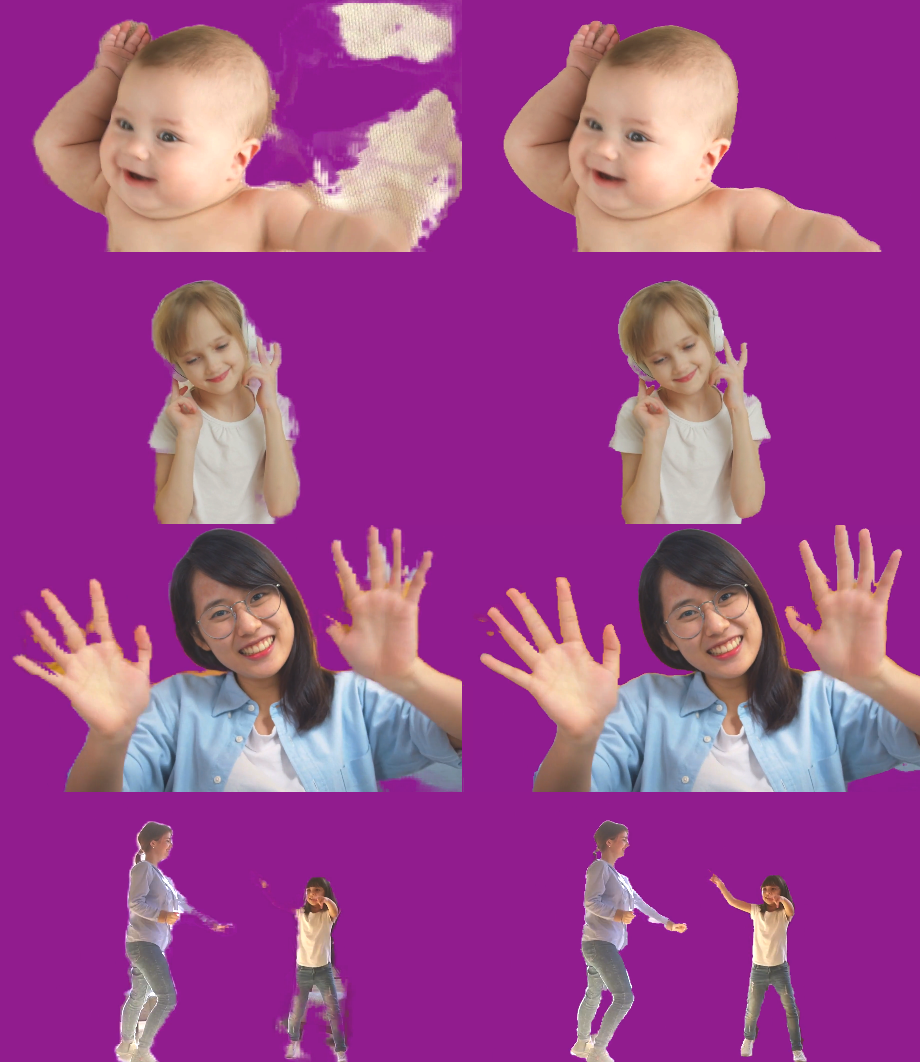

We introduce an efficient video segmentation system for resource-limited edge devices leveraging heterogeneous compute. Specifically, we design network models by searching across multiple dimensions of specifications for the neural architectures and operations on top of already light-weight backbones, targeting commercially available edge inference engines. We further analyze and optimize the heterogeneous data flows in our systems across the CPU, the GPU and the NPU. Our approach has empirically factored well into our real-time AR system, enabling remarkably higher accuracy with quadrupled effective resolutions, yet at much shorter end-to-end latency, much higher frame rate, and even lower power consumption on edge platforms.

翻译:我们为资源有限的边缘装置引入了高效的视频分割系统,利用多种计算法。 具体地说,我们设计网络模型,在已经轻度骨干之上,以商业上可获得的边缘推断引擎为目标,对神经结构和操作的规格进行多个层面的搜索,我们进一步分析和优化我们系统在CPU、GPU和NPU上的差异性数据流动。我们的方法在实时AR系统中进行了大量的经验性考虑,通过四倍有效的分辨率,使得精准度显著提高,但端到端的延迟度要短得多,框架率要高得多,边缘平台上的电能消耗甚至更低。