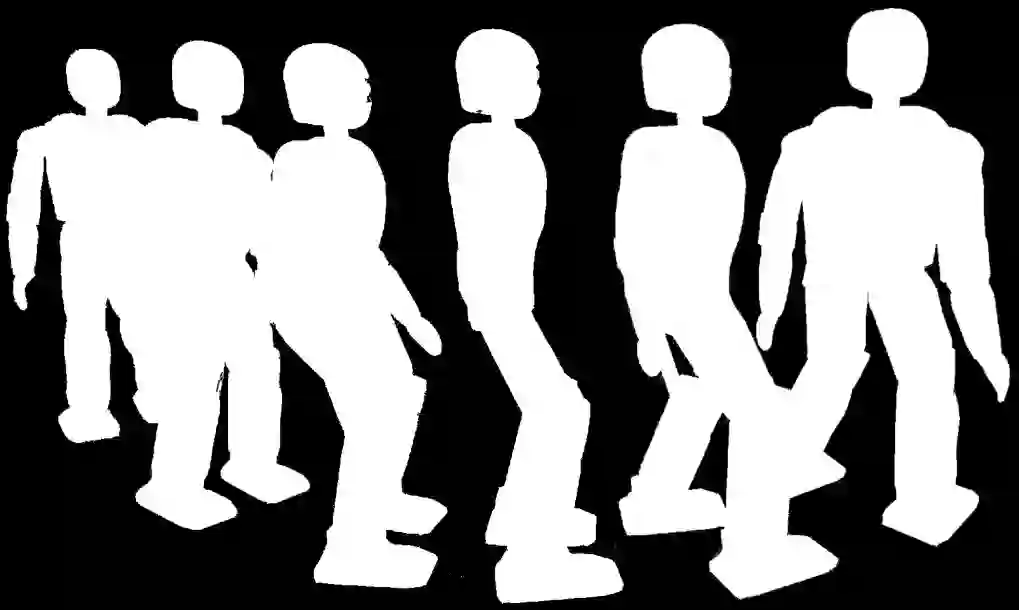

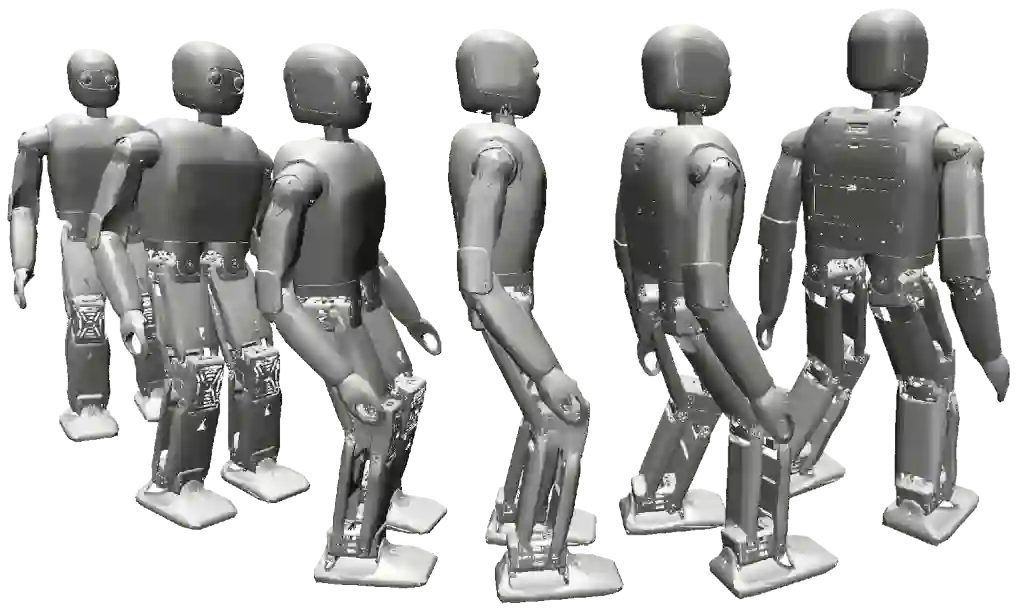

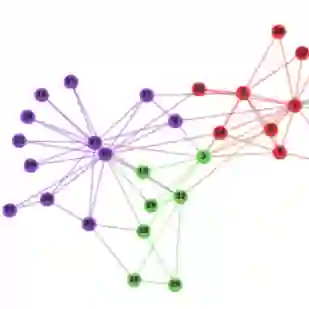

Bipedal walking is one of the most difficult but exciting challenges in robotics. The difficulties arise from the complexity of high-dimensional dynamics, sensing and actuation limitations combined with real-time and computational constraints. Deep Reinforcement Learning (DRL) holds the promise to address these issues by fully exploiting the robot dynamics with minimal craftsmanship. In this paper, we propose a novel DRL approach that enables an agent to learn omnidirectional locomotion for humanoid (bipedal) robots. Notably, the locomotion behaviors are accomplished by a single control policy (a single neural network). We achieve this by introducing a new curriculum learning method that gradually increases the task difficulty by scheduling target velocities. In addition, our method does not require reference motions which facilities its application to robots with different kinematics, and reduces the overall complexity. Finally, different strategies for sim-to-real transfer are presented which allow us to transfer the learned policy to a real humanoid robot.

翻译:双层步行是机器人中最困难但令人兴奋的挑战之一。 困难来自高维动态、感应和振动限制的复杂性以及实时和计算限制。 深强化学习( DRL) 承诺通过充分利用机器人动态来解决这些问题, 使用最少的手艺。 在本文中, 我们提出一个新的 DRL 方法, 使代理人能够学习人体( 双层)机器人的全向流动。 值得注意的是, 移动行为是通过单一的控制政策( 单一神经网络) 完成的。 我们通过引入新的课程学习方法来实现这一目标, 通过设定目标速度, 逐渐增加任务难度。 此外, 我们的方法并不要求参考动作, 将其应用到不同运动的机器人, 并降低总体复杂性。 最后, 提出了不同的模拟到真实传输策略, 从而使我们能够将学习的政策转移到真正的人形机器人 。