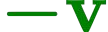

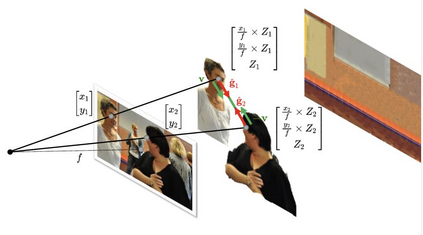

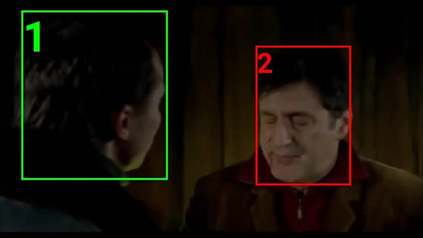

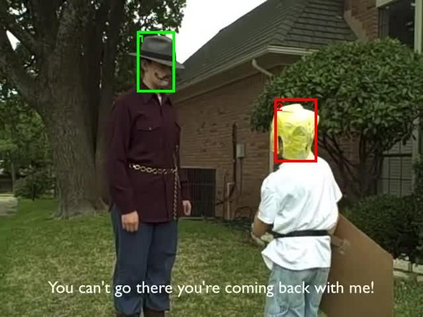

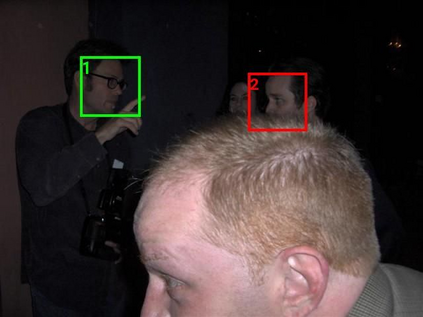

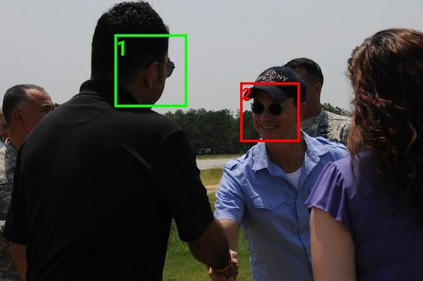

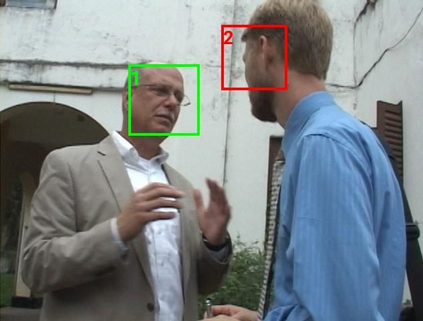

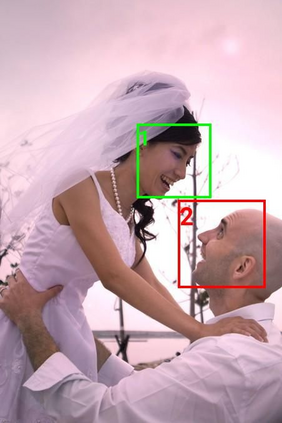

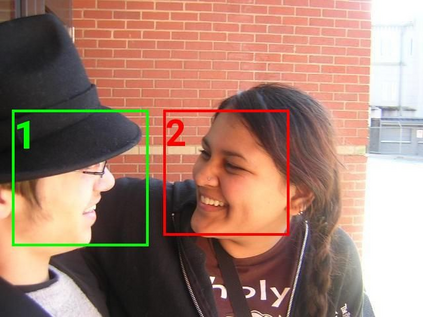

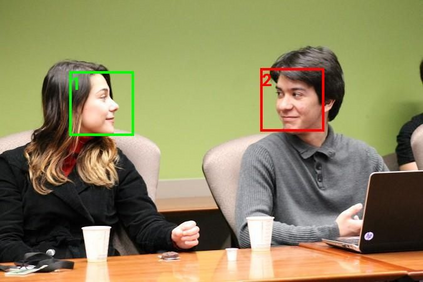

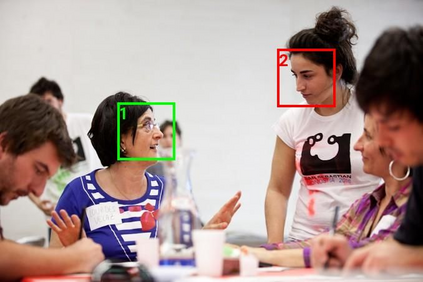

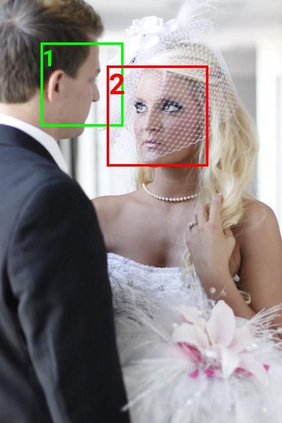

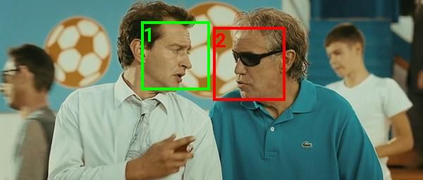

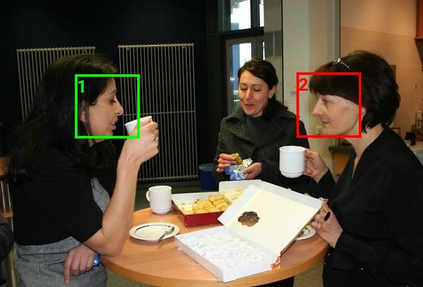

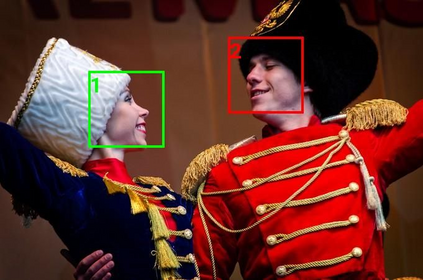

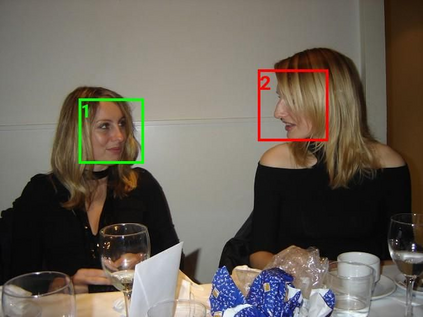

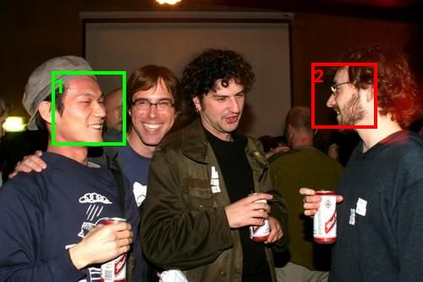

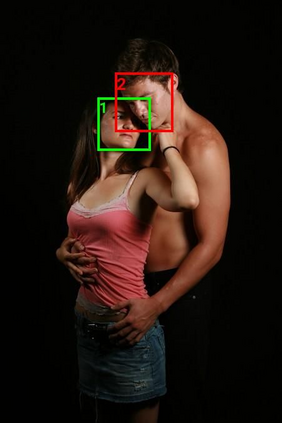

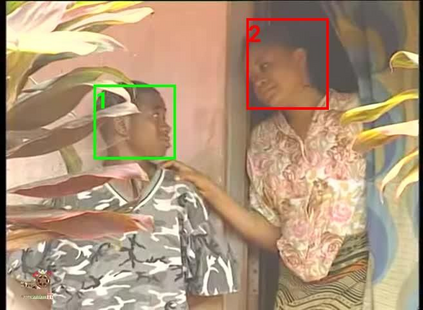

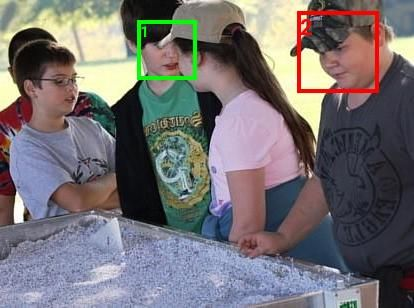

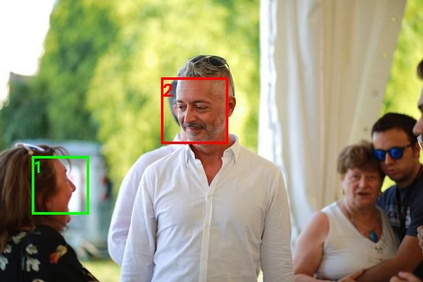

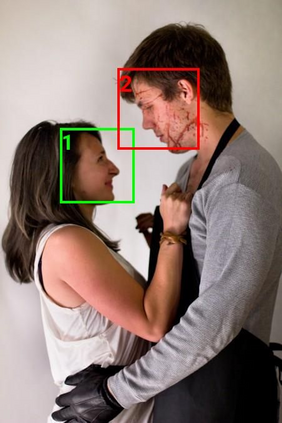

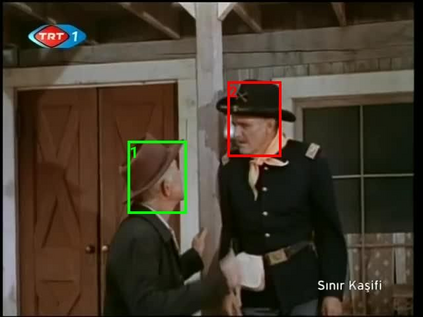

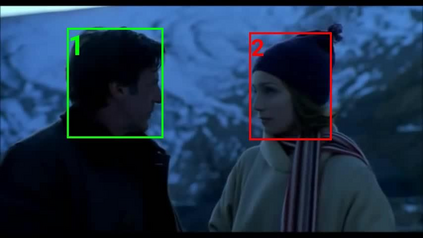

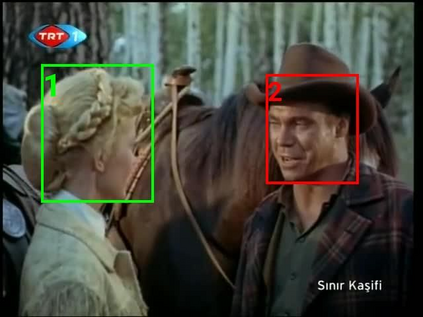

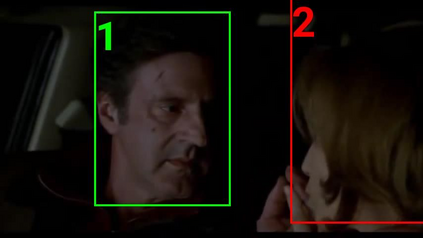

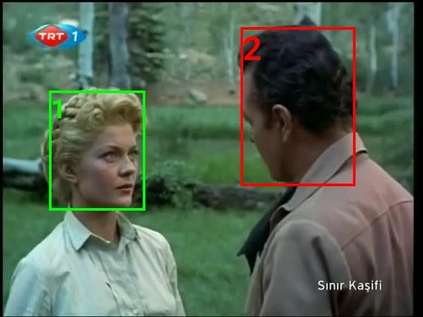

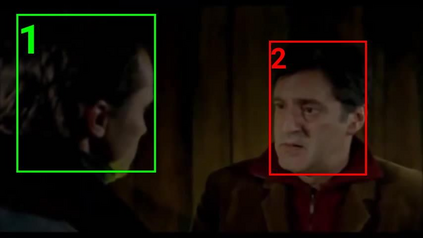

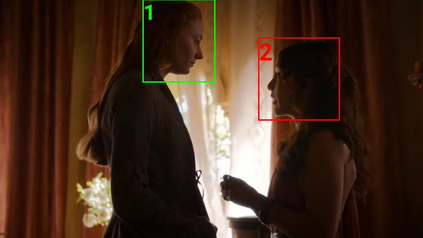

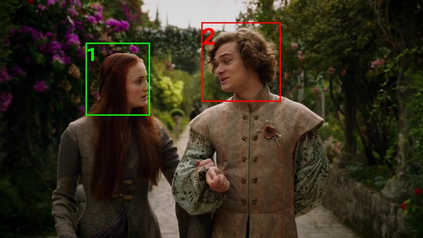

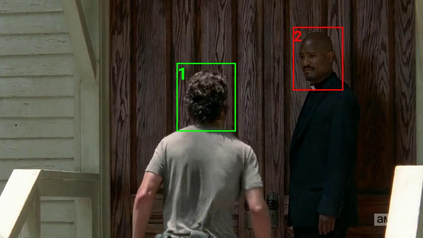

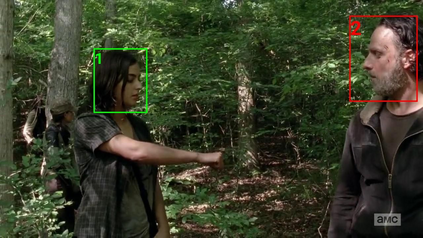

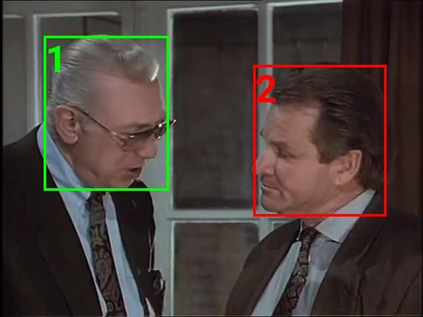

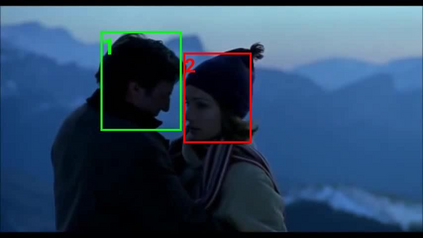

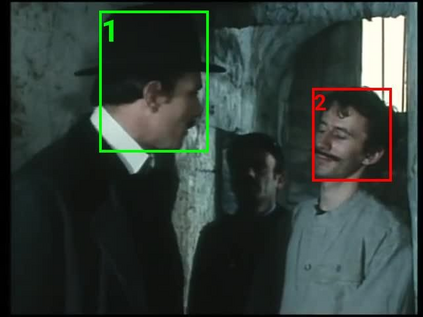

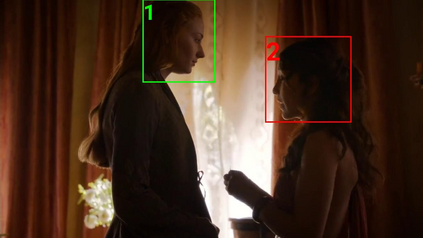

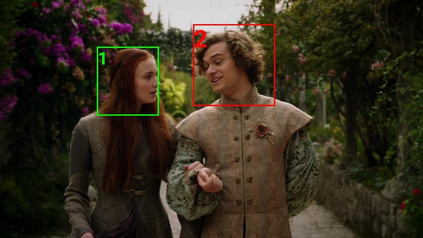

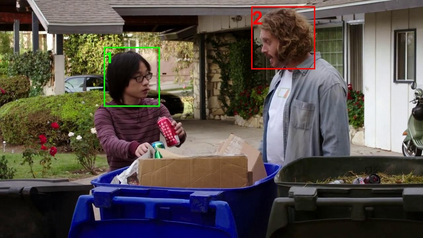

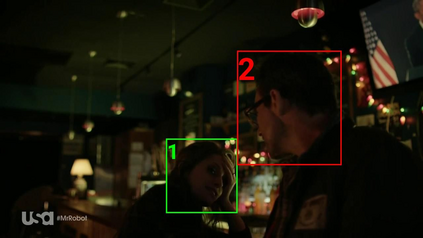

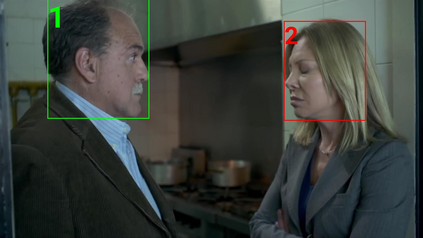

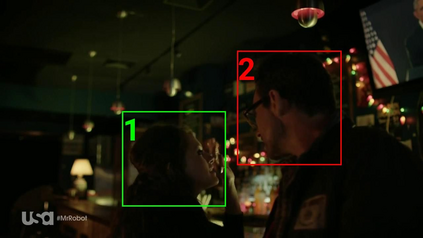

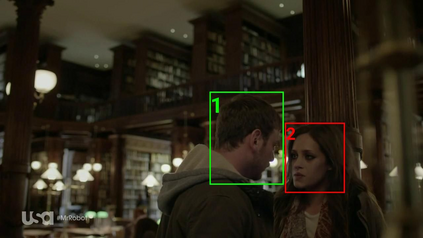

Mutual gaze detection, i.e., predicting whether or not two people are looking at each other, plays an important role in understanding human interactions. In this work, we focus on the task of image-based mutual gaze detection, and propose a simple and effective approach to boost the performance by using an auxiliary 3D gaze estimation task during training. We achieve the performance boost without additional labeling cost by training the 3D gaze estimation branch using pseudo 3D gaze labels deduced from mutual gaze labels. By sharing the head image encoder between the 3D gaze estimation and the mutual gaze detection branches, we achieve better head features than learned by training the mutual gaze detection branch alone. Experimental results on three image datasets show that the proposed approach improves the detection performance significantly without additional annotations. This work also introduces a new image dataset that consists of 33.1K pairs of humans annotated with mutual gaze labels in 29.2K images.

翻译:共同凝视检测,即预测两个人是否互相看对方,在理解人类互动方面发挥着重要作用。在这项工作中,我们侧重于基于图像的相互凝视检测任务,提出一种简单有效的方法,在培训期间使用辅助的 3D 视觉估测任务来提高性能。我们通过使用从共同凝视标签推导出来的伪3D 视觉估测分支来培训3D 视觉估测分支,在不增加标签的情况下实现了性能提升,而无需额外成本。通过在3D 视觉估测和相互凝视检测分支之间共享头部图像编码,我们取得比学习更好的头部特征,仅通过培训共同凝视检测分支。三个图像数据集的实验结果显示,拟议方法在没有附加说明的情况下大大改进了检测性能。这项工作还引入了一个新的图像数据集,由29.2K图像中带有共同凝视标签的33.1K对人组成加注的相框组成。